1977

Joint-ISTA-Net: A model-based deep learning network for multi-contrast CS-MRI reconstruction1Center for Biomedical Imaging Research, Department of Biomedical Engineering, School of Medicine, Tsinghua University, Beijing, China, 2MR Clinical Science, Philips Healthcare, Suzhou, China

Synopsis

Compressed Sensing theory is often applied to accelerate the acquisition of multi-contrast MR images. When highly undersampled, CS-MRI suffers from non-negligible reconstruction error. Here we propose an unrolled iterative deep-learning model to further utilize the group sparsity property for multi-contrast MRI reconstruction at high acceleration factor, named Joint-ISTA-Net, to reduce reconstruction error and aliasing. Our method adds a joint-shrinkage-thresholding model into ISTA-Net to generate a better reconstruction for multi-contrast image pairs. Experiments show the effectiveness of the proposed strategy.

Introduction

Since multi-contrast MR images provide abundant diagnostic information, a typical MRI protocol usually includes sequences that acquire images of the same anatomical structure, which may be time-consuming. To accelerate MRI acquisition, Compressed Sensing, a strategy that acquires down-sampled k-space data and reconstructs images utilizing image sparsity, is usually applied[1].Recently, deep learning has been introduced to CS-MRI reconstruction, and Model-driven deep learning networks including ISTA-Net[2] and Admm-Net[3] have shown great success, giving a better reconstruction result for highly undersampled kspace data compared with traditional method.

In addition, multi-contrast MR images sharing similar structures can increase image sparsity jointly. Thus, reconstructing multi-contrast down-sampled MR images via group sparsity property is supposed to be efficient, and has been proved to be more powerful than reconstructing each contrast individually[4,5,6]. Inspired by this, we extend the ISTA-Net network by introducing a Joint-shrinkage-thresholding model to utilize group sparsity information, and propose a Joint-ISTA-Net.

Theory

Traditionally a compressed sensing reconstruction problem is regarded as a constrained optimization problem, which can be written as$$ \underset m{\text{min}}\left\|Am-y\right\|_2^2+{\lambda\left\|\psi m\right\|}_1 $$

Where $$$A$$$ denotes encoding matrix, $$$y$$$ denotes acquired down-sampled kspace data, $$$\psi$$$ denotes sparse transform such as wavelet or total variation, and $$$m$$$ is the image to be reconstructed. In deep learning model ISTA-Net, a general nonlinear transform function $$$G$$$ with learnable parameters is adopted to replace the original sparse transform, changing the model into

$$ \underset m{\text{min}}\left\|Am-y\right\|_2^2+{\lambda\left\|G\left(m\right)\right\|}_1 $$

Here we introduce the traditional group sparsity concept[5-6] into the model above. Group sparsity of multi-contrast image $$$m^{(i)}$$$ with sparse transform $$$\psi$$$ is

$$G_{SP}\left(m\right)={\lambda\left\|\psi m\right\|}_1$$

Replace sparse transform $$$\psi$$$ with nonlinear transform function $$$G$$$, then the reconstruction model is

$$ \underset m{\text{min}}\left\|Am-y\right\|_2^2+{\lambda\left\|G\left(m\right)\right\|}_1+G_{SP}\left(m\right) $$

Which equals

$$ \underset m{\text{min}}\left\|Am-y\right\|_2^2+{\lambda\left\|G\left(m\right)\right\|}_1+\lambda{\left\|\sqrt{\sum_i\left(G\left(m^{(i)}\right)\right)^2}\right\|}_1 $$

Therefore the total iterative solution of the Group-Sparsity model would be

$$ r^{\left(n+1,i\right)}=m^{(n,i)}-\rho^{(n,i)}A^T\left(Am^{(n,i)}-y\right) $$

$$ m^{(n+1,i)}=\widetilde G((1-\mu^{(n,i)})soft(G(r^{(n+1,i)}),\theta^{(n,i)})+\mu^{(n,i)}soft(\sqrt{\sum_i(G{(r^{(n+1,i)}))}^2},\theta_J^{(n)})) $$

Where $$$n$$$ is the iteration step. Here, forward transform $$$G$$$ and back transform $$$\widetilde G$$$, together with step size $$$\rho^{(n,i)}$$$ , soft threshold $$$\theta^{(n,i)}$$$ , joint soft threshold $$$\theta_J^{(n)}$$$ and sum weights $$$\mu^{(n,i)}$$$ are learnable during the training process.

Method

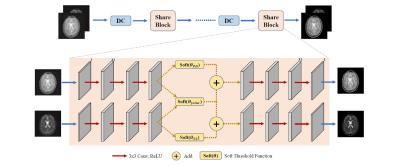

A Joint-ISTA-Net is designed to learn the parameters mentioned above, and its structure is shown in Fig. 1. The network is trained on public dataset IXI[7]. T2 and PD weighted data are used jointly as multi-contrast MR images dataset for training and testing. 1878 pairs of 2D multi-contrast fully-sampled slices of brain from 15 subjects are chosen as train dataset, and are undersampled using 2D Variable Density Poisson disk sampling mask. After training we test the network on 5 subjects, 650 pairs of 2D multi-contrast slices. Peak Signal to Noise Ratio(PSNR) and Structural Similarity(SSIM) is used to demonstrate the method’s capability.We also implement ISTA-Net trained on single contrast, and other deep learning multi-contrast CS-MRI reconstruction methods including Deep Information Sharing Network(DISN)[8] and X-Net model reconstructing multi-contrast images based on U-Net[9], for comparison. In addition, a traditional multi-contrast CS-MRI reconstruction method FCSA-MT[5] is tested. ISTA-Net and Joint-ISTA-Net are trained using a combination of Multiscale-Structural Similarity (MSSIM) and $$$L_1$$$ as the loss function:

$$LOSS=\alpha MSSIM(t,x)+(1-\alpha){\left\|t-x\right\|}_1$$

Where $$$t$$$ denotes target image, $$$x$$$ denotes reconstructed image, $$$\alpha=0.84$$$. Other networks are trained using the loss functions proposed in references[8,9].

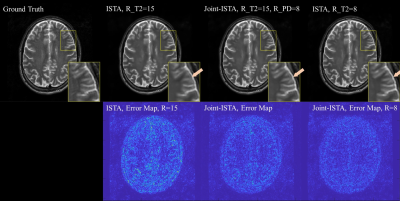

Furthermore, an extra experiment takes T2-weighted images with a high acceleration factor (R=15) and PD-weighted images with a low acceleration factor (R=8) as input, to illustrate whether contrast with a low acceleration factor could further benefit the reconstruction quality of other contrasts with a high acceleration factor using Joint-ISTA-Net. We expect that with help of other contrast, highly undersampled MR images can be reconstructed as the same quality of MR images under low acceleration factor. Therefore, efficiency can be improved for clinical practice.

Results

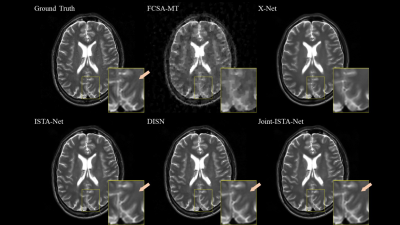

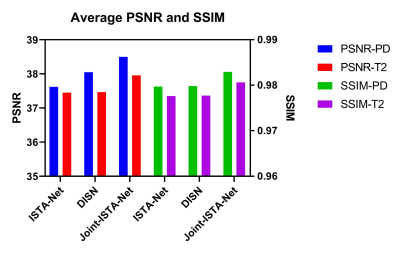

Figure 2 shows the results with zoom-in images from the methods of FCSA-MT, X-Net, DISN, ISTA-Net and Joint-ISTA-Net on test dataset. Both train dataset and test dataset are undersampled using the same 10X Poisson disk sampling mask. Among the results Joint-ISTA-Net shows an advantage in reducing reconstruction error and showing shaper edges. Figure 3 shows the average PSNR and SSIM of DISN, ISTA-Net and Joint-ISTA-Net, demonstrating that proposed Joint-ISTA-Net outperform other methods.A T2 weighted image with acceleration factor R=15 is reconstructed jointly with PD weighted image of same structure under R=8 using Joint-ISTA-Net, to show the result of jointly reconstruct slightly undersampled image with highly undersampled image. Figure 4 shows the results, where jointly reconstruction shows better reconstruction quality compared with the single-contrast reconstruction result using ISTA-Net, and has little difference compared to slightly undersampled result(T2w, R=8).

Discussion and Conclusion

In this work, we develop an effective deep learning CS-MRI reconstruction model Joint-ISTA-Net, which exploits the group sparsity property of multi-contrast MR images to generate better reconstruction results. Experiments shows the capability of proposed method.We also show that Joint-ISTA-Net can promote reconstruction quality of images under high acceleration factors with images under relatively low acceleration factor of different contrast. Thus, protocols including acquisition of one contrast under a low acceleration factor and other highly undersampled contrasts can be implemented and therefore shorten the acquisition time.

Acknowledgements

No acknowledgement found.References

1. Lustig, Michael, David Donoho, and John M. Pauly. "Sparse MRI: The application of compressed sensing for rapid MR imaging." Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine 58.6 (2007): 1182-1195.

2. Zhang, Jian, and Bernard Ghanem. "ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing." Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

3. Sun, Jian, Huibin Li, and Zongben Xu. "Deep ADMM-Net for compressive sensing MRI." Advances in neural information processing systems. 2016.

4. Ji, Shihao, Ya Xue, and Lawrence Carin. "Bayesian compressive sensing." IEEE Transactions on signal processing 56.6 (2008): 2346-2356.

5. Huang, Junzhou, Chen Chen, and Leon Axel. "Fast multi-contrast MRI reconstruction." Magnetic resonance imaging 32.10 (2014): 1344-1352.

6. Kopanoglu, Emre, et al. "Simultaneous use of individual and joint regularization terms in compressive sensing: Joint reconstruction of multi‐channel multi‐contrast MRI acquisitions." NMR in Biomedicine 33.4 (2020): e4247.

7. http://brain-development.org/ixi-dataset/

8. Sun, Liyan, et al. "A deep information sharing network for multi-contrast compressed sensing MRI reconstruction." IEEE Transactions on Image Processing 28.12 (2019): 6141-6153.

9. Do, Won‐Joon, et al. "Reconstruction of multicontrast MR images through deep learning." Medical Physics 47.3 (2020): 983-997.

Figures