1959

Deep image reconstruction for MRI using unregistered measurement pairs without ground truth1Department of Computer Science and Engineering, Washington University in St. Louis, St. Louis, MO, United States, 2Mallinckrodt Institute of Radiology, Washington University in St. Louis, St. Louis, MO, United States, 3Department of Electrical and Systems Engineering, Washington University in St. Louis, St. Louis, MO, United States

Synopsis

One of the key limitations in conventional deep learning-based MR image reconstruction is the need for registered pairs of training images, where fullysampled ground truth images are required as the target. We address this limitation by proposing a novel registration-augmented image reconstruction method that trains a CNN by directly mapping pairs of unregistered and undersampled MR measurements. The proposed method is validated on a single-coil MRI data set by training a model directly on pairs of undersampled measurements from images that have undergone nonrigid deformations.

INTRODUCTION

Reconstructing a high-quality image from several undersampled k-space measurements is an important task in MRI research1. Deep learning (DL) has gained popularity in addressing this problem. A widely used strategy in this context trains a convolutional neural network (CNN) to learn a mapping from zero-filled images to the corresponding ground truth2,3. Despite its success, one is often faced with a challenge of collecting a large number of fully sampled training samples. In order not to depend on the existence of a ground truth during training, a recent method called Noise2Noise (N2N)4 was introduced to train a CNN by mapping pairs of registered noisy and undersampled measurements of the same subject.However, due to the structural deformations that result from subject motion during acquisition, obtaining such registered pairs is a practical limitation. To address this issue, we proposed a novel unsupervised deep registration-augmented reconstruction method (U-Dream). The novelty of our work is two-fold: (a) Inspired by N2N, U-Dream learns directly from undersampled and noisy measurements without the need for high-quality ground truth images; (b) U-Dream simultaneously addresses the problems of registration and reconstruction by integrating two separate CNN modules that are trained jointly by using pairs of unregistered and undersampled measurements.

METHODS

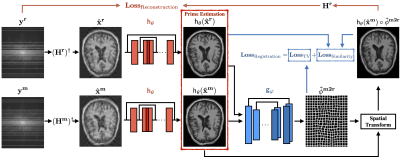

As illustrated in Figure 1, the proposed framework consists of two separate CNN modules - one reconstruction module and one registration module. The reconstruction module is trained to reconstruct clean images given two uncalibrated zero-filled images as input. Inspired by one of the recent DL solutions to the image registration problem5, the registration module is trained to generate a motion field characterizing a directional mapping from the coordinates of one image to those of the other. We then implement a differentiable wrapping operator as the Spatial Transform Network to wrap one of the two reconstructions and obtain a calibrated image with respect to the other reconstruction. The loss function of the registration module is formulated to penalize the mismatch between the wrapped reconstruction and its reference. It also imposes the total variation prior on the predicted motion field. By transforming the wrapped reconstruction back to the k-space, the reconstruction module is trained to minimize the difference between the calibrated image and the raw k-space measurement of its reference.All experiments were conducted given fully sampled T1-weighted MR images from an open-access data set (OASIS-36). We performed retrospective Cartesian undersampling in order to simulate a practical single-coil acquisition scenario. We set the sampling rate to 25% of the original k-space data with additive white Gaussian noise corresponding to an input SNR of 40 dB. Synthetic registration fields were also generated with various spatial frequencies and amplitudes7, in order to represent nonrigid deformations for different measurements from a same subject. Three pre-defined parameters of the generation were the number of points randomly selected in an initial vector field [2000], the range of random values assigned to those points [-10 to 10], and the standard deviation of the smoothing Gaussian kernel for the vector field [10]. U-Dream was estimated against zero-filled reconstruction (ZF), Total Variation8 (TV), unregistered Noise2Noise, and Noise2Noise using pre-calibrated image pairs registered by Symmetric Normalization9 (SyN + N2N).

RESULTS

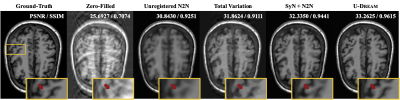

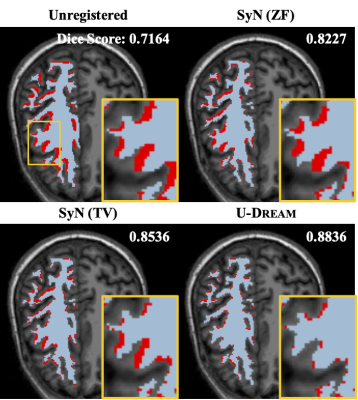

Among the results illustrated in Figure 2, zero-filled images contain ghosting and blurring artifacts. All other methods yield a significant improvement over ZF. While TV shows a considerable reduction in the aliasing artifacts, it leads to a loss of detail due to the well-known ``staircasing effect.’’ Unregistered N2N method achieves a reasonable result even without any registration in training, but it also contains a noticeable amount of blur, especially along the edges. While SyN + N2N performs significantly better than the traditional N2N and TV, it still suffers from oversmoothing in the region highlighted by the red arrow. U-Dream outperforms all of these baseline methods in term of sharpness, contrast, and artifact-removal, which we attribute to its ability to jointly address registration and reconstruction.As illustrated in Figure 3, we also evaluated U-Dream in terms of image registration by feeding corrupted image pairs. We compared U-Dream with SyN applied to zero-filled and TV reconstructions pairs (SyN(ZF) and SyN(TV), respectively). We observed that the proposed method outperforms the benchmarks.

DISSCUSSION

Given only unregistered measurement pairs, U-Dream provide a feasible pathway to train a CNN for reconstructing high-quality images from undersampled measurements. One limitation of U-Dream is that it requires well-tuned hyperparameters (e.g., learning rate) to ensure loss functions of CNNs stably converged. Moreover, experiments on in-vivo acquisition are needed to further verify the effectiveness of U-Dream in real application.CONCLUSION

The proposed method addresses an important problem in training a deep CNN. It performs MRI reconstruction from unregistered measurements without any groundtruth by jointly performing reconstruction and registration. We validated the method on undersampled MR measurement pairs corresponding to image pairs related by a non-rigid deformation. We observed that the proposed method leads to a significant improvement over several baseline algorithms.Acknowledgements

No acknowledgement found.References

1. Lustig M, Donoho D, Pauly JM. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn. Reson. Med. 2007;58(6):1182-1195.

2. Sun J, Li H, Xu Z, others. Deep ADMM-Net for compressive sensing MRI. In: Advances in Neural Information Processing Systems. ; 2016:10-18.

3. Han Y, Sunwoo L, Ye J. K-Space Deep Learning for Accelerated MRI. IEEE Trans. Med. Imaging. 2020;39(2):377-386.

4. Lehtinen J, Munkberg J, Hasselgren J, et al. Noise2Noise: Learning image restoration without clean data. In: International Conference on Machine Learning. Vol 7. ; 2018:4620-4631.

5. Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, Dalca A V. VoxelMorph: A Learning Framework for Deformable Medical Image Registration. IEEE Trans. Med. Imaging. 2019;38(8):1788-1800.

6. LaMontagne PJ, Benzinger TLS, Morris JC, et al. OASIS-3: longitudinal neuroimaging, clinical, and cognitive dataset for normal aging and Alzheimer disease. medRxiv 2019121319014902. Published online 2019.

7. Sokooti H, de Vos B, Berendsen F, Lelieveldt BPF, Išgum I, Staring M. Nonrigid Image Registration Using Multi-scale 3D Convolutional Neural Networks. In: International Conference on Medical Image Computing and Computer Assisted Intervention. Vol 10433. ; 2017:232-239.

8. Beck A, Teboulle M. Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans. Image Process. 2009;18(11):2419-2434.

9. Avants BB, Epstein CL, Grossman M, Gee JC. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008;12(1):26-41.

Figures