1767

A 3D-UNET for Gibbs artifact removal from quantitative susceptibility maps1Imaging Genetics Center, Mark and Mary Stevens Neuroimaging and Informatics Institute, Keck School of Medicine of USC, Los Angeles, CA, United States

Synopsis

Magnetic resonance image quality is susceptible to several artifacts including Gibbs-ringing. Although there have been deep learning approaches to address these artifacts on T1-weighted scans, Quantitative susceptibility maps (QSMs), derived from susceptibility-weighted imaging, are often more prone to Gibbs artifacts than T1w images, and require their own model. Removing such artifacts from QSM will improve the ability to non-invasively map iron deposits, calcification, inflammation, and vasculature in the brain. In this work, we develop a 3D U-Net based approach to remove Gibbs-ringing from QSM maps.

Introduction:

Quantitative susceptibility maps (QSMs), derived from phase and magnitude images of MRI-based susceptibility-weighted images (SWI), characterize pathological neuroanatomical features such as microbleeds, aberrant levels of iron, calcium, and myelin. Artifacts like motion and Gibbs-ringing [1] can obscure tissue boundaries caused during reconstruction that excludes high spatial frequencies on the edges of the k-space, also known as truncation, results in artifacts that usually appear as rings around high-contrast tissue boundaries of the image. The artifact is due to the reconstruction of the finite k–space using an inverse 2D Fourier transformation where the high spatial frequencies have been eliminated. High frequencies are on the edges of the k-space, and low frequencies at the center of the k-space are results of the strong gradients and the low gradients, respectively. However, in MR imaging we are limited by sampling in the finite k-space, and excluding the high-frequency signals leads to a truncation effect and commonly, Gibbs overshooting. These artifacts usually appear as a ringing effect near high-contrast tissue boundaries in the image. There are methods that reduce this effect in the spatial reconstructed images. One recent method implemented in MRtrix3 (mrdegibbs) uses a local subvoxel shift [2]. Deep learning-based methods show promising results, but they currently exist only for T1-weighted images. Here we introduce a U-Net based 3-dimensional deep learning network to remove Gibbs artifacts from SWI-derived QSM.Methods:

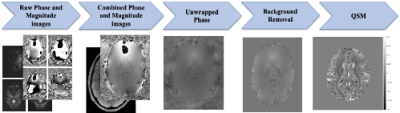

SWI were obtained from the UK Biobank (UKB) resource[3], collected on a 3T Siemens Skyra scanners (32-channel receiver head coil; 3D axial acquisition, double-echo GRE, TE = 9.42, 20 ms, 0.8x0.8x3 mm3, FOV = 256x288x48 mm3). Magnitude and phase images from the 32 coils were combined using the Bernstein method [4]. Individual brain masks were generated from the magnitude images using HD-BET [5]. For each mask, the phase image was spatially unwrapped through a fast phase unwrapping algorithm [6]. A local field map was generated through background removal using the Laplacian boundary value method [7]. Finally, QSMs were reconstructed using Morphology Enabled Dipole Inversion (MEDI) [8]. Figure 1 shows this preprocessing pipeline.We visually quality controlled 11290 SWI scans and discarded all with visible Gibbs ringing for model building purposes. From images with minimal or no artifacts, we generated artificial Gibbs artifacts by removing high frequency elements from the Fourier space, causing inaccurate sampling of sharp edges after applying the inverse Fourier transform. We truncated ~50-90% of high frequency components in the k-space[9]: A QSM slice contains approximately 7300 high frequency elements after FFT. To simulate Gibbs, we removed 55%, 70%, 80%, or 90% of these elements from the masks. We used a combination of truncations on three mask sizes. High frequency elements at the edges of k-space correspond to sharp intensity differences at the boundaries of the mask, so removing them causes ringing in image space. Artifacts were added to every axial slice. For each volume, 12 new volumes containing simulated artifacts were generated with varied ringing intensities and widths.

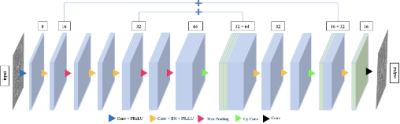

We implemented a 3D U-Net model [10], trained using the following parameters on 93% of the subject scans: Adam optimizer [11], batch size = 64, learning rate = 10-5. The volumes were split into training (80%) and test (20%) sets. Validation was performed on a left-out sample, the remaining 7% of the data. Our network architecture (Figure 2) consists of two pairs of encoder and decoder blocks. Each encoder has two convolution layers, each containing a PReLU activation function, a batch normalization layer, and a max pooling layer. The pre-processed and quality-controlled QSMs were cropped to 180x240x48 using “CenterCropOrPad” from Torchio [12] and then input to the encoder. Each decoder contains two deconvolution layers, followed by batch normalization and a max pooling layer with the final up-convolution layer.

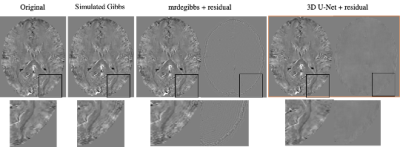

We compared outputs of our UNet model to the Gibbs-free input and calculated the mean squared error across the full image for all Gibbs levels of our validation set. We also ran mrdegibbs and compared those outputs to the ground truth. MSE of both de-Gibbs methods were compared using a paired t-test.

Results:

From 11290 SWI datasets, we noted 8903 had some artifacts (2229 major) and were excluded; 1966 had visually undetectable artifacts. These were augmented and used to generate ringing as described. 1846 were used for model generation, and 120 for validation. The average MSE from our U-Net model and mrdegibbs were 1.30*10^-5 and 3.25*10^-5, respectively. We found the difference was statistically significant at p < 6.1x10^-17, showing our model significantly reduced the Gibbs artifact compared to mrdegibbs. A visual representation of one image is shown in Figure 3.Discussion:

We provide for the first time, a tool specifically designed to remove Gibbs ringing artifacts from QSM, which may outperform models intended for broad use across MR contrasts. Other methods may over-correct and remove anatomical features such as microbleeds and small vasculature, important features in QSM.Conclusion:

Our model can quickly be run across Gibbs-artifact corrupted QSM maps and will allow for high-powered population studies of brain vasculature and tissue microstructure with SWI.Acknowledgements

This research was conducted using the UK Biobank Resource under Application Number ‘11559’. This research was supported by the National Institutes of Health (NIH): R01 AG059874, P41 EB015922. NJ is MPI of a research related grant from Biogen Inc for image processing.References

[1] Gallagher, Thomas A., Alexander J. Nemeth, and Lotfi Hacein-Bey. "An introduction to the Fourier transform: relationship to MRI." American journal of roentgenology 190.5 (2008): 1396-1405.

[2] Kellner, E; Dhital, B; Kiselev, V.G & Reisert, M. Gibbs-ringing artifact removal based on local subvoxel-shifts. Magnetic Resonance in Medicine, 2016, 76, 1574–1581.

[3] Miller, Karla L., et al. "Multimodal population brain imaging in the UK Biobank prospective epidemiological study." Nature neuroscience 19.11 (2016): 1523-1536.

[4] Bernstein, Matt A., et al. "Reconstructions of phase contrast, phased array multicoil data." Magnetic resonance in medicine 32.3 (1994): 330-334.

[5] Isensee, Fabian, et al. "Automated brain extraction of multisequence MRI using artificial neural networks." Human brain mapping 40.17 (2019): 4952-4964.

[6] Schofield, Marvin A., and Yimei Zhu. "Fast phase unwrapping algorithm for interferometric applications." Optics letters 28.14 (2003): 1194-1196.

[7] Zhou, Dong, et al. "Background field removal by solving the Laplacian boundary value problem." NMR in Biomedicine 27.3 (2014): 312-319.

[8] Liu, Zhe, et al. "MEDI+ 0: morphology enabled dipole inversion with automatic uniform cerebrospinal fluid zero reference for quantitative susceptibility mapping." Magnetic resonance in medicine 79.5 (2018): 2795-2803.

[9] Zhang, Qianqian, et al. "MRI Gibbs‐ringing artifact reduction by means of machine learning using convolutional neural networks." Magnetic resonance in medicine 82.6 (2019): 2133-2145.

[10] Çiçek, Özgün, et al. "3D U-Net: learning dense volumetric segmentation from sparse annotation." International conference on medical image computing and computer-assisted intervention. Springer, Cham, 2016.

[11] Kingma, Diederik P., and Jimmy Ba. "Adam: A method for stochastic optimization." arXiv preprint arXiv:1412.6980 (2014).

[12] Pérez-García, Fernando, Rachel Sparks, and Sebastien Ourselin. "TorchIO: a Python library for efficient loading, preprocessing, augmentation and patch-based sampling of medical images in deep learning." arXiv preprint arXiv:2003.04696 (2020)

Figures