1763

Deep Learning Based Joint MR Image Reconstruction and Under-sampling Pattern Optimization1Radiation Oncology, University of Michigan, Ann Arbor, MI, United States

Synopsis

Accelerating MRI acquisition by under-sampling measurements in k-space and learning an image reconstruction model with high image quality is necessary to expand its clinical utilization. In this work, we explore joint optimization of under-sampling patterns and image reconstruction neural networks for aggressive sub-sampling of images that require very long acquisition times (e.g., FLAIR T2 weighted images). We propose Attention Residual Non-Local Networks (ARNL-Net) trained with an uncertainty based L1 loss function for producing high quality images. Initial experiments demonstrate the practicability of this method, with reconstructions demonstrating superior fidelity to fully sampled images as compared to random under-sampling schemes.

Introduction

Accelerating Magnetic Resonance Imaging (MRI) acquisition serves to dramatically improve efficiency of scanning and consequently increase the ability of the clinical utilization of MRI in diagnosis and image-guided interventions. Data-driven deep-learning models have been used to reconstruct high quality and high resolution MR images from subsampled k-space data. Optimizing k-space subsampling patterns, instead of random sampling, could further improve acquisition efficiency and quality of reconstructed images. In this study, we jointly optimize the subsampling patterns and image reconstruction neural networks for Fluid Attenuated Inversion Recovery T2-weighted images (FLAIR-T2) of the brain. The joint-optimization reconstruction yields substantially better quality images and higher under-sampling rates than Cartesian random or variable density under-sampling patterns with the same reconstruction networks.Methods

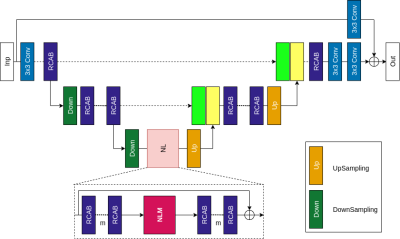

In the reconstruction model (Figure 1), we designed the Attention Residual Non Local Network (ARNL-Net) that consists of residual channel attention blocks (RCAB), down-sampling/up-sampling stages and non-local attention modules. As in U-Net [1], the shallow features obtained during down-sampling stages are concatenated with deep features obtained during up-sampling. The non-local attention module (NLM) computes visual self-similarity of extracted pixel features allowing to account for long-range correlations between relevant features to improve reconstruction quality. The ARNL-Net is followed by a two-step data consistency module to ensure k-space data fidelity. Subsampling patterns of the images are learned from the full images retrospectively that is optimized along with the ARNL-Net using LOUPE [2]. We took full resolution FLAIR-T2 images to optimize the subsampling probability mask and reconstruct high resolution FLAIR-T2 images from the subsampled k-space data.Dataset and Implementation- The experiments were conducted on an in-house collected heterogeneous axial brain dataset from 130 patients with primary brain tumors (grade II- IV gliomas) obtained from a standard brain tumor MRI protocol. FLAIR-T2 images were acquired in 2D with an in-plane resolution 1x1 mm, slice thickness 3 mm and 30% gap. All 2D images of FLAIR-T2 were reformatted to have a matrix size of 256x256. The k-space data was emulated from the reformatted images for each patient. The dataset was split 9:1:3 for training, validation and testing respectively. We used an uncertainty based L1 loss function to train the ARNL-Net end-to-end. We evaluated our reconstructions by computing structural similarity (SSIM), peak signal to noise ratio (PSNR), and high frequency error norm (HFEN), where the fully sampled FLAIR-T2 images are used as ground-truth.

Results

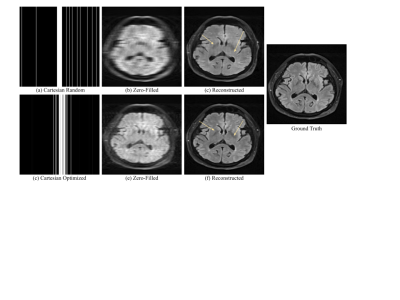

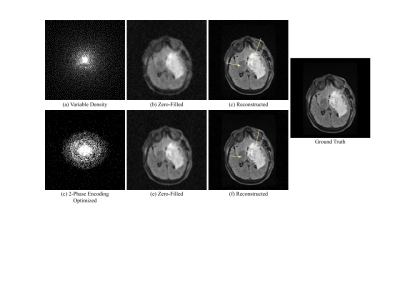

Compared to random under-sampling patterns such as Cartesian random or variable density, the jointly optimized model produced less blurry reconstructions and preserved finer anatomic details. We evaluated results at a 10-fold acceleration rate to demonstrate reliable reconstructions under an aggressive under-sampling rate. With regard to 2D acquisition, the joint optimization model achieved SSIM = 0.954, PSNR = 34.04, HFEN = 0.42, which improved from the reconstruction model using the Cartesian random under-sampling pattern that had SSIM = 0.925, PSNR = 31.56, HFEN = 0.59 (Figure 2). Under a 2-phase encoding acquisition (Figure 3), the joint optimization mode achieved SSIM = 0.984, PSNR = 39.63, HFEN = 0.09, which was better than the reconstruction model using the variable density under-sampling pattern that had SSIM = 0.974, PSNR = 38.42, HFEN = 0.24. Note better preserved tumor and anatomical details (arrows) by the jointly optimization model in Figures 2 and 3.Conclusion

In this work, we explored an under-sampling pattern optimization with the reconstruction network in a data-driven fashion to achieve improved image quality with a high acceleration rate of subsampling. Overall, adapting the under-sampling pattern to the data along with a well devised reconstruction network and loss function allowed us to achieve aggressive under-sampling while preserving high image reconstruction quality.Acknowledgements

This work was supported in part by NIH R01 EB16079.References

[1] Ronneberger O., Fischer P., Brox T., "U-Net: Convolutional Networks for Biomedical Image Segmentation," In Medical Image Computing and Computer-Assisted Intervention, MICCAI, 2015.

[2] C. D. Bahadir, A. Q. Wang, A. V. Dalca and M. R. Sabuncu, "Deep-Learning-Based Optimization of the Under-Sampling Pattern in MRI," in IEEE Transactions on Computational Imaging, vol. 6, pp. 1139-1152, 2020.

Figures