1753

MAGnitude Image to Complex (MAGIC)-Net: reconstructing multi-coil complex images with pre-trained network using synthesized raw data

Fanwen Wang1, Hui Zhang1, Jiawei Han1, Fei Dai1, Yuxiang Dai1, Weibo Chen2, Chengyan Wang3, and He Wang1,3

1Institute of Science and Technology for Brain-Inspired Intelligence, Fudan University, Shanghai, China, 2Philips Healthcare, Shanghai, China, 3Human Phenome Institute, Fudan University, Shanghai, China

1Institute of Science and Technology for Brain-Inspired Intelligence, Fudan University, Shanghai, China, 2Philips Healthcare, Shanghai, China, 3Human Phenome Institute, Fudan University, Shanghai, China

Synopsis

This study proposed a novel MAGnitude Image to Complex (MAGIC) Network to reconstruct images using deep learning with limited number of training data. Collecting complex multi-coil data is inconvenient since it is beyond the routine examination. However, there are many magnitude images available in hospitals. By applying deformation between the magnitude image and complex image, MAGIC Net succeeded in synthesizing deformed data for training and enabled deep learning methods. Results show that with the same original data, MAGIC-Net outperforms the conventional CG-SENSE in PSNR for all undersampling trajectories with high resolution b = 0 and b = 1000 s/mm2.

Introduction

Deep learning has been widely used in the field of MRI reconstruction. Using fully sampled data as labels, many deep learning networks1,2 have succeeded in recovering artifact-free images from accelerated MRI acquisitions, with much better reconstruction performance than model-based parallel imaging methods. However, the effectiveness of deep learning is limited by the size and diversity of training data3. Collecting complex multi-coil MRI data is beyond the routine procedures in hospital and takes a lot of storages. Meanwhile, many magnitude images are available in the database. Hence, we proposed a MAGnitude Images to Complex (MAGIC)-Net to synthesize large number of complex training data from the existing magnitude images to facilitate deep learning reconstructions.Methods

Data AcquisitionA total of 768 images from 48 patients were exported from the database of a medical center. Four healthy volunteers were recruited in this study, with 16 slices scanned each. Subjects were grouped into a source group (1 subject, 16 slices) and test group (3 subjects, 48 slices). All data were acquired on a 3.0 T MRI scanner (Ingenia CX, Philips Healthcare, Best, the Netherlands) equipped with a 32-channel head coil. Multi-shot Diffusion Weighted Images (DWI) were acquired, consisting b-values of 0 and 1000 s/mm2 with interleaved four-shot EPI acquisition. The following parameters were utilized: TE, 75 ms; TR, 2800 ms; matrix size, 228x228; slice thickness, 4 mm; partial Fourier factor, 0.702; voxel size, 1.0x1.0 mm2. The acquired data were reconstructed using MUSE4.

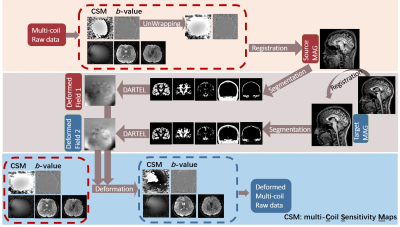

Pipeline of MAGIC-Net

Firstly, we unwrapped the phase of the source multi-Coil Sensitivity Maps (CSMs) based on the transportation of intensity equation5. Secondly, we calculated the geometric deformation between the source and magnitude cases. Thirdly, we applied the deformation map to the complex data to synthesize data with different anatomies. The deformation was implemented using the SPM DARTEL6 toolbox.

Experiment Setup

The deformations were calculated between 48 subjects of magnitude images and 1 subject of source complex data. Hence the number of synthesized data set is up to 48 subjects (768 slices). To validate the effectiveness of the deformed samples, we further used sets of 6, 12, 32 and 48 subjects (96, 192, 284 and 768 slices) for comparison. A state-of-art network MoDL7 was trained to evaluate the performance of MAGIC-Net for deep learning. MoDL cascaded the data consistency and ResNet together, said to be relatively insensitive to training data beyond 100 images. Interleaved undersampling trajectories with rates of 2, 4 and 6 were applied to simulate the sparse multi-coil measurements.

Results

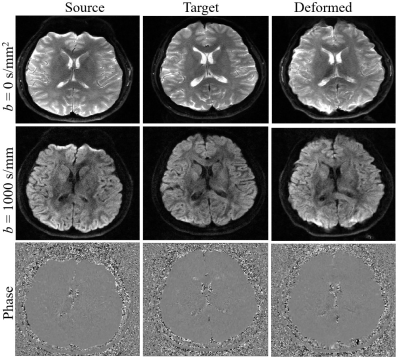

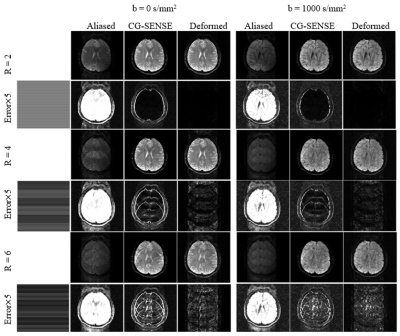

To ensure the feasibility of the deformation, we synthesized the source subject to one test subject. Comparison of the source, target and deformed DWI with b = 0 and b = 1000 s/mm2 were shown (Fig. 2). The source, target and deformed CSMs with the unwarping procedure were also shown. It is worth noting that the deformed subject shown in the figures was NOT included for training. Compared with the directly deformed CSMs, the unwrapped phases have better smoothness and less distortion, hence providing better space information (Fig. 3). Then we also calculated PSNR with different undersampling trajectories reconstructed by MAGIC Net and model-based CG-SENSE8,9 (Fig. 4). As the synthesized samples increased from 96 to 768 slices, the MAGIC-Net outperformed CG-SENSE for high-resolution b =1000 s/mm2 in PSNR (49.17 v. s. 32.65, 38.66 v. s. 30.42, 33.01 v. s. 28.32) under the undersampling rate of 2,4 and 6 respectively. Furthermore, for all undersampling trajectories with b = 0 and b =1000 s/mm2, MAGIC-Net shows less error compared to CG-SENSE and better PSNR without actually collected complex data (Fig. 5).Conclusion

We proposed a MAGIC-Net to reconstruct images using deep learning with limited number of training data. Results show that MAGIC-Net enables the deep learning network by synthesizing diverse data and outperforms the conventional CG-SENSE with PSNR for different undersampling trajectories. The pre-trained reconstruction network can be replaced by other deep learning networks. The model can also be extended for other imaging sequences without acquiring large number of raw data for training.Acknowledgements

The work was supported in part by the National Natural Science Foundation of China (No. 81971583), National Key R&D Program of China (No. 2018YFC1312900), Shanghai Natural Science Foundation (No. 20ZR1406400), Shanghai Municipal Science and Technology Major Project (No.2017SHZDZX01, No.2018SHZDZX01) and ZJLab.References

- Hammernik K, Klatzer T, Kobler E, et al. Learning a variational network for reconstruction of accelerated MRI data. Magn Reson Med. 2018;79(6):3055-3071.

- Zhang P, Wang F, Xu W, Li Y. Multi-channel generative adversarial network for parallel magnetic resonance image reconstruction in k-space. Paper presented at: International Conference on Medical Image Computing and Computer-Assisted Intervention2018.

- Ding J, Li X, Gudivada VN. Augmentation and evaluation of training data for deep learning. Paper presented at: 2017 IEEE International Conference on Big Data (Big Data)2017.

- Chen NK, Guidon A, Chang HC, Song AW. A robust multi-shot scan strategy for high-resolution diffusion weighted MRI enabled by multiplexed sensitivity-encoding (MUSE). NeuroImage. 2013;72:41-47.

- Zixin Zhao (2020). Robust 2D phase unwrapping algorithm (https://www.mathworks.com/matlabcentral/fileexchange/68493-robust-2d-phase-unwrapping-algorithm), MATLAB Central File Exchange. Retrieved December 14, 2020.

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38(1):95-113.

- Aggarwal HK, Mani MP, Jacob M. MoDL: Model-based deep learning architecture for inverse problems. IEEE transactions on medical imaging. 2018;38(2):394-405.

- Pruessmann KP, Weiger M, Scheidegger MB, Boesiger P. SENSE: sensitivity encoding for fast MRI. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine. 1999;42(5):952-962.

- Uecker M, Lai P, Murphy MJ, et al. ESPIRiT—an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA. Magn Reson Med. 2014;71(3):990-1001.

Figures

Fig. 1: The pipeline of MAGIC-Net.

Fig. 2: The deformation of different b-values using MAGIC-Net.

Fig. 3: The magnitude and phase of the source, target and

deformed coil sensitivity maps by MAGIC-Net. The unwrapped coil sensitivity

maps have better smoothness.

Fig. 4: The reconstruction of MAGIC-Net and CG-SENSE of b

= 0 s/mm2 and b =1000 s/mm2 under different

sampling trajectories.

Fig. 5: PSNR of reconstructed images using MAGIC-Net and

CG-SENSE.