1363

Computer vision object tracking for MRI motion estimation1High Field MR Center, Center for Medical Physics and Biomedical Engineering, Medical University of Vienna, Vienna, Austria

Synopsis

Computer vision (CV) libraries such as OpenCV provide versatile algorithms for object tracking. We demonstrate the feasibility of out-of-the-box object trackers in different dynamic MR imaging scenarios. In contrast to specialized detection and registration algorithms, the generic implementation of object trackers enables targeting of different and challenging organs during respiratory motion, including the heart, liver and kidney. Apart from post-processing, fast algorithm and implementation allowed for application in our online and prospective motion compensation pipeline. By leveraging these open source libraries, MR applications can benefit from both the current powerful library and the continuous developments by the CV community.

Introduction

Despite the immense advances in MR technology in the last decades, motion during MR data acquisition is still a major challenge in a wide range of applications. In particular, in the thorax and abdomen, motion patterns of tissues are complex and do often not correlate well with external sensor data.Our approach was to leverage the significant effort and rapid development in computer vision (CV). We investigated CV object tracking capabilities for MR images first offline and, consequently, later online during image reconstruction.

Methods

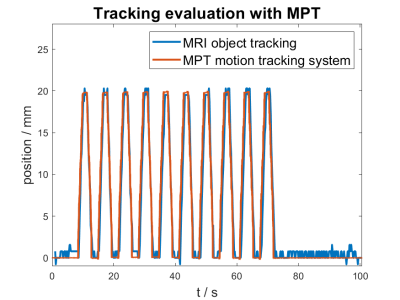

We compiled version 3.4 of the open-source CV the high processing speed library OpenCV 1 for our 3T and 7T MR scanners (Siemens MAGNETOM 7T, Prisma, Prisma Fit) and implemented its object tracking functionality into our existing pipeline for online real-time motion compensation (MoCo) for MR spectroscopy 2,3. The only user interaction required is to select the initial bounding box and tracker algorithm, as integrated into the scanner UI. The bounding box (position, width, height) is translated into the scanner coordinates and compared to the starting value. Thus, a projection of the real displacement onto the 2D image plane is achieved. Multiple projections (column and row displacements for each 2D image) are finally combined using singular value decomposition. The resulting 3D position data are fed back to the target sequence for positional slice or voxel updates.In a phantom measurement, we validated OpenCV tracking by the MPT motion tracking system (Metria Innovation)4, which was mounted to our in-house built torso phantom5. A pneumatic stepper motor allowed for accurate and discrete positional changes during scanning5.

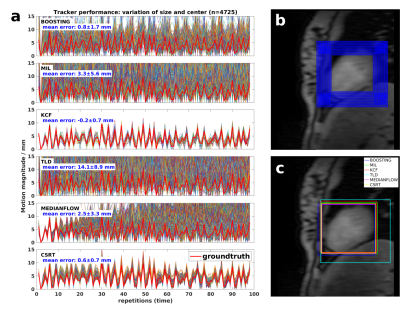

To evaluate tracker precision on in vivo data (sagittal 3T FLASH image series, 168x200 mm2, 48x192 matrix, TR/TE 109/1.1), tracking was performed repeatedly offline on reconstructed magnitude images with varying initial bounding boxes. The six tracking algorithms compared are: Boosting, CSRT (Correlation Filter with Channel and Spatial Reliability), KCF (Kernelized Correlation Filter), MedianFlow, MIL (Multiple Instance Learning), MOSSE (Minimum Output Sum of Squared Error), and TLD (Tracking Learning Detection)1. Tracker accuracy was determined by comparing image ground-truth, which was defined manually through a semi-automatic segmentation.

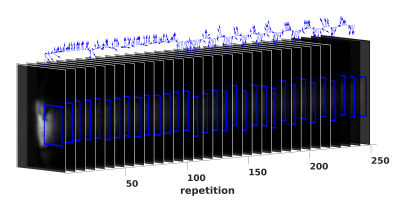

To target prospective motion estimation, low resolution FLASH images (200x200 mm2, 26x128 matrix, TR/TE 2.5/1.3) were acquired in three orientations, resulting in acquisition times of 200-300 ms per repetition.

Results

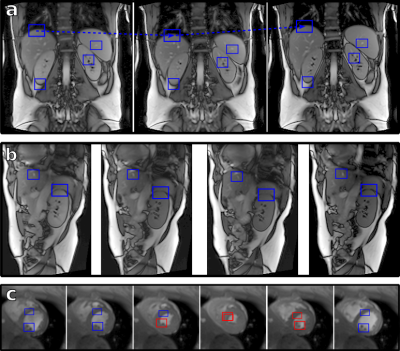

We successfully implemented OpenCV’s object tracking capabilities into our existing MoCo framework and validated its performance experimentally. The online procedure involves minimal user interference, no additional setup and fast position update. Comparison with the external motion sensor showed an excellent agreement (figure 1).On in vivo images, accuracy and precision differed between the available trackers. Results by KCF6 and CSRT7 algorithms were the most reliable (figure 2). In figure 3 we demonstrate tracking of various locations in the abdomen on high resolution MRI where the bounding box accurately followed motion of the liver, diaphragm and kidney.

Tracking even performs well on low-resolution navigator images under a combination of challenging circumstances (figure 4): (1) untriggered acquisitions exhibit heavily changing image contrast and image features; (2) heavy breathing causes large displacements and partial occlusion; and (3) a small and heterogeneous sensitivity profile of the surface coil as compared to body coils.

On a regular PC (8 core, 4.2 GHz, 32 GB RAM), processing speed per image permitted frame rates of at least 30 fps, largely sufficient for online position updates.

Discussion

By using generic object tracking algorithms, image-based motion compensation is not limited to specific organs, hardware configurations or navigator resolutions.While the setup of the image navigators is unavoidable, user interaction is minimized to the mere drawing of a bounding box on a reference image. T

he best performing trackers are able to cope well with heterogeneous coil sensitivities, changing image contrasts during a time series and large target organ displacements. This makes them a perfect candidate for prospective motion estimation. Additionally, the acquisition of navigation information allows for retrospective compensation options.

In its current implementation, the tracking performs on 8 bit gray scale images which reduces intensity depth. By extending to 8 bit RGB, two color channels could be used for additional contrasts or to exploit phase information, too. Testing of this potential performance improvement is currently in progress.

By tracking multiple objects in a single image, estimations of crude deformation maps can be obtained or tracking quality improved. In such cases, sufficient processing time can be achieved by parallelization of the multiple tracking tasks.

Training and testing of the neural network based tracker in OpenCV (GOTURN) is promising and will be evaluated in the future.

Conclusion

We successfully integrated OpenCV into the image reconstruction software of the MR scanners. This allows for access to multiple established object tracking algorithms, as implemented in OpenCV. They work well even on low-featured MR navigator images and hence provide a generic alternative to specialized detection methods.By leveraging an open-source tool, MRI applications are expected to benefit from the continuous developments in the field of computer vision. This may be a useful alternative to existing motion tracking and detection methods. In the future it may find applications in angiography, breast, abdomen or pediatric imaging, as well as for oncological applications like MR Linac.

Acknowledgements

This project was supported by the Austrian Science Fund (FWF) project P28867-B30.References

1. OpenCV: Open Source Computer Vision (available from GitHub: https://github.com/opencv/opencv), documentation of Tracking API, https://docs.opencv.org/3.4/d0/d0a/classcv_1_1Tracker.html

2. Hess, A. T., Dylan Tisdall, M., Andronesi, O. C., Meintjes, E. M., & van der Kouwe, A. J. W. (2011). eal-time motion and B0 corrected single voxel spectroscopy using volumetric navigators. Magnetic Resonance in Medicine, 66(2), 314–323. https://doi.org/10.1002/mrm.22805

3. Wampl, S., Körner, T., Roat, S., Wolzt, M., Moser, E., Trattnig, S., Schmid, A. I. (2020). Cardiac 31P MR Spectroscopy With Interleaved 1H Image Navigation for Prospective Respiratory Motion Compensation – Initial Results. Proc. Intl. Soc. Mag. Reson. Med. 28, 0487.

4. Maclaren, J., Armstrong, B. S. R., Barrows, R. T., Danishad, K. A., Ernst, T., Foster, C. L., Zaitsev, M. (2012). Measurement and Correction of Microscopic Head Motion during Magnetic Resonance Imaging of the Brain. PLoS ONE, 7(11), 3–11. https://doi.org/10.1371/journal.pone.0048088

5. Körner, T., et al., submitted to ISMRM 2021: Development of an anthropomorphic torso and left ventricle phantom for respiratory motion simulation

6. Henriques, J. F., Caseiro, R., Martins, P., & Batista, J. (2015). High-Speed Tracking with Kernelized Correlation Filters. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(3), 583–596. https://doi.org/10.1109/TPAMI.2014.2345390

7. Lukežič, A., Vojíř, T., Čehovin Zajc, L., Matas, J., & Kristan, M. (2018). Discriminative Correlation Filter Tracker with Channel and Spatial Reliability. International Journal of Computer Vision, 126(7), 671–688. https://doi.org/10.1007/s11263-017-1061-3

Figures