1353

Retrospective motion correction for Fast Spin Echo based on conditional GAN with entropy loss1Department of Chemical and Biological Physics, Weizmann Institute of Science, Rehovot, Israel, 2School of Information Engineering, Wuhan University of Technology, Wuhan, China, 3State Key Laboratory of Magnetic Resonance and Atomic and Molecular Physics, Wuhan Center for Magnetic Resonance, Wuhan Institute of Physics and Mathematics, Innovation Academy for Precision Measurement Science and Technology, Chinese Academy of Sciences., Wuhan, China

Synopsis

We proposed a new end-to-end motion correction method based on conditional generative adversarial network (GAN) and minimum entropy of MRI images for Fast Spin Echo(FSE) sequence. The network contains an encoder-decoder generator to generate the motion-corrected images and a PatchGAN discriminator to classify an image as either real (motion-free) or fake(motion-corrected). Moreover, the image's entropy is set as one loss item in the cGAN's loss as the entropy increases monotonically with the motion amplitude, indicating that entropy is a good criterion for motion. The results show that the proposed method can effectively reduce the artifacts and obtain high-quality motion-corrected images from the motion-affected images in both pre-clinical and clinical datasets.

INTRODUCTION

Most of the FSE protocols are worked in multi-shot mode to obtain high-resolution images, in which the k-space data is acquired using several shots at a different time1. As a result, the image may be severely degraded due to subject motion between consecutive shots, especially for pediatric or stroke patients in clinical and awake rodents in pre-clinical studies. The traditional motion correction methods can be divided into retrospective and prospective motion correction, and most of them need to predict the motion model, or manually outline the artifact area, or need for extra hardware facilities2,3. Recently, more and more researchers began to use deep learning as a tool for MRI motion correction4,5,6 because it can provide a potential avenue for dramatically reducing the computation time and improving the convergence of retrospective motion correction methods. And several groups have proposed the use of GAN for motion correction due to its capability of generating data without the explicit modeling of the probability density function and robustness to over-fitting7. This work aims to design a new end-to-end motion correction method based on cGAN and minimum entropy of images for multi-shot FSE sequence, train the network with the simulated random rigid motion data, and apply this network for both clinical and pre-clinical studies.METHODS

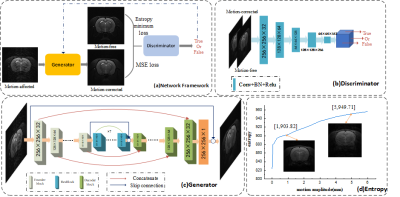

The network architecture based on the cGAN and minimum entropy of MR image is shown in Figure 1(a). It contains an encoder-decoder generator and a PatchGAN discriminator. The input of the generator is motion-affected images, and the output is motion-corrected. The generator contains five encoder blocks, seven residual blocks (ResBlocks), and five decoder blocks. Moreover, concatenations were applied between the same scale feature maps from the encoder and decoder, which allow the network to propagate context information to higher resolution layers. Finally, we introduce the global skip connection, which learns residual artifacts images to ensure train the network faster and model generalizes better8. The discriminator network divides the input images (motion-free and motion-corrected) into patches, then classifies each patch as either real or fake by a sigmoid activation, and finally averages all image scores patches.This network's loss function is composed of adversarial loss (WGAN-GP)9, mean absolute error (MSE) loss, and minimum entropy loss. WGAN-GP adopts Wasserstein distance rather than the JS divergence of the original GAN, which can avoid the gradient vanished problem. And the minimum entropy loss is based on the entropy focusing motion correction method10. When the motion amplitude increases, the entropy value follows the increase, shown in Figure 1(d).

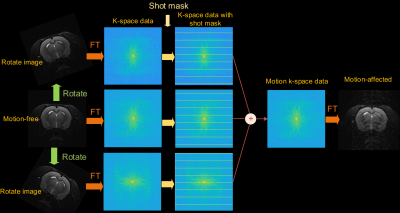

The paired motion-free and motion-affected datasets are often hard or impossible to acquire. We proposed a multi-pattern (Markov, periodic, completely random) motion simulation method to generate the training dataset. The process of motion simulation is depicted in Figure 2(a).

The network was trained in the small motion range, and the predictions were performed on a wider motion range in simulation and in vivo data of pre-clinical datasets to test the generalization capability of our method. And we also evaluate our method on the clinical datasets. The pre-clinical data are rat brain images collected by fast spin-echo (FSE) sequences on Bruker's 7.0 Tesla scanner in our local laboratory. The clinical dataset is the public dataset HCP Brain dataset. Three quantitative metrics evaluated the quality of the network's outputs: peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and mean-square error (MSE).

RESULTS

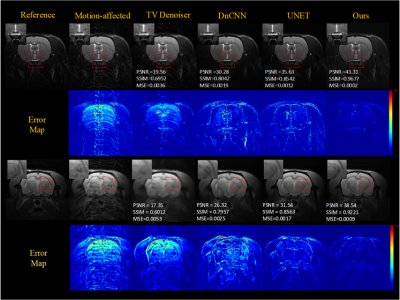

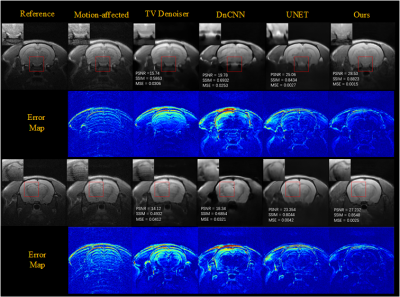

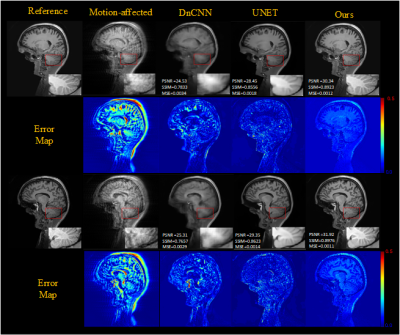

To evaluate the effect of motion level, different level motion artifacts have been introduced into motion-free images with 32-shots and completely random motion pattern. Figure 3 shows the results obtained for two degrees of rotational motion (Δθ = {1°, 2°}) and two millimeters of translational motion (ΔL = {1mm,2mm }) on test data, illustrates that our method can remove artifacts efficiently and obtain better images than other methods (TV denoiser, DnCNN, UNET) in varying motion levels. Figures 4 show the generalization ability test's motion correction results for the in vivo data. We performed two scans, one with motion and one without motion to get the reference images. The performance of our method also is better than other methods for in vivo data.Results of motion correction on the clinical dataset were shown in Figure 5. As shown in the brain stem's zoomed views, our method can get higher resolution results and reduce more artifacts than the others.

DISCUSSION & CONCLUSION

We proposed a new end-to-end motion correction method based on cGAN and minimum entropy of images for multi-shot FSE sequence. The network is trained with simulated random rigid motion data. And we apply this network for both clinical and pre-clinical studies. Our method outperforms UNET, DnCNN, and TV denoiser in qualitative and quantitative comparisons for the different motion level of pre-clinical and clinical datasets.Acknowledgements

We gratefully acknowledge the financial support by NationalMajor Scientific Research Equipment Development Projectof China (81627901), the National key of R&D Program ofChina (Grant 2018YFC0115000, 2016YFC1304702), NationalNatural Science Foundation of China (11575287, 11705274),and the Chinese Academy of Sciences (YZ201677).References

1.Usman M, Latif S, Asim M, Lee B. Retrospective Motion Correction in Multishot MRI using Generative Adversarial Network. Sci Rep. Published online 2020:1-11. doi:10.1038/s41598-020-61705-9

2.Ruppert K, Hill DLG, Batchelor PG, Holden M. reconstruction Motion correction in MRI of the brain. doi:10.1088/0031-9155/61/5/R32

3.Salem KA. MRI Hot Topics Motion Correction for MR Imaging s medical.

4.Pawar K, Chen Z, Shah NJ, Egan GF. Suppressing motion artefacts in MRI using an Inception- ResNet network with motion simulation augmentation. 2019;(October):1-14. doi:10.1002/nbm.4225

5.Haskell MW, Splitthoff DN, Cauley SF, Hossbach J, Wald LL. Network Accelerated Motion Estimation and Reduction ( NAMER ): Convolutional neural network guided retrospective motion correction using a separable motion model. 2019;(February):1452-1461. doi:10.1002/mrm.27771

6.Sommer K, Saalbach A, Brosch T, Hall C, Cross NM, Andre JB. Correction of motion artifacts using a multiscale fully convolutional neural network. Am J Neuroradiol. 2020;41(3):416-423. doi:10.3174/ajnr.A6436

7.Luo J, Huang J. Generative adversarial network: An overview. Yi Qi Yi Biao Xue Bao/Chinese J Sci Instrum. Published online 2019. doi:10.19650/j.cnki.cjsi.J1804413

8.Kupyn O, Budzan V, Mykhailych M, Mishkin D. DeblurGAN : Blind Motion Deblurring Using Conditional Adversarial Networks.

9.Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville A. Improved training of wasserstein GANs. In: Advances in Neural Information Processing Systems. ; 2017.

10.Atkinson D, Hill DLG, Stoyle PNR, Summers PE, Keevil SF. Automatic correction of motion artifacts in magnetic resonance images using an entropy focus criterion. IEEE Trans Med Imaging. Published online 1997. doi:10.1109/42.650886

Figures