1352

DeepResp: Deep Neural Network for respiration-induced artifact correction in 2D multi-slice GRE1Department of Electrical and computer Engineering, Seoul National University, Seoul, Korea, Republic of, 2Division of Biomedical Engineering, Hankuk University of Foreign Studies, Gyeonggi-do, Korea, Republic of

Synopsis

Respiration-induced B0 fluctuation can generate artifacts by inducing phase errors. In this study, a new deep-learning method, DeepResp, is proposed to correct for the artifacts in multi-slice GRE images without any modification in sequence or hardware. DeepResp is designed to extract the phase errors from a corrupted image using deep neural networks. This information was applied to k-space data, generating an artifact-corrected image. When tested, DeepResp successfully reduced the artifacts of in-vivo images, showing improvements in normalized-root-mean-square error (deep breathing: from 13.9 ± 4.6% to 5.8 ± 1.4%; natural breathing: from 5.2 ± 3.3% to 4.0 ± 2.5%).

Introduction

A number of approaches such as navigator echo1 and external tracking device2 have been proposed to correct for the respiration-induced B0 fluctuation artifacts. However, these methods require modification of software or hardware. Recently, deep learning has been widely applied in artifact corrections, but no study proposed to correct for this type of artifacts. In our last abstract, we suggested a method3 that estimated sinusoidal phase errors from a single slice simulated images, demonstrating reduction of the artifacts. In this study, we propose a new deep learning solution, DeepResp, to correct for the (non-sinusoidal) respiration-induced artifacts in in-vivo multi-slice GRE images. This method is tested using multi-echo data from 3T and 7T scanners.Methods

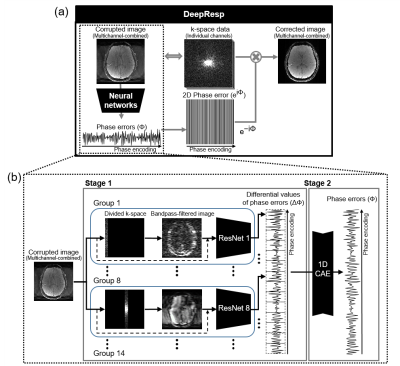

[DeepResp]DeepResp was designed to extract the respiration-induced phase errors from a multichannel-combined complex image using deep neural networks (Fig. 1). The network-generated phase errors were conjugated and applied to the k-space of each channel, correcting for the errors. Finally, the multichannel k-space data were reconstructed to an artifact-corrected image.

[Deep neural networks]

DeepResp had two stages of neural networks (Fig. 1b). In the first stage, groups of networks generate “differential values” of the phase errors, which were phase differences between neighboring k-space lines, as the output. Each group contained modified ResNet504, which utilized both an original input image and a bandpass-filtered image that consists of PE lines including information of the output differential values. The second stage was designed to accumulate the differential values to produce the respiration-induced phase errors by utilizing a 1D convolutional autoencoder.

[Training Dataset]

To train the networks, complex-valued GRE images of 18 subjects from Yoon et al.5 and Jung et al.6 were utilized. A total of 1,655 complex images (2D) were used. The respiration data were measured using a temperature sensor (390 seconds). A median filter and a bandpass-filter (passband: 0.1 Hz ~ 1 Hz) were applied to reduce noise. Using the GRE images and the respiration data, respiration-corrupted images were simulated for training dataset. The respiration data were sampled with a TR of 1.2 sec. The sampled data were scaled to have a peak amplitude of value between 0.03 rad and 0.63 rad. The data were reformatted to a 2D phase error matrix. The matrix was multiplied to the k-space of a randomly-chosen 2D complex image. Using this procedure, 1 million pairs of respiration-corrupted images and phase errors were simulated.

[Evaluation]

The evaluation of DeepResp was performed using newly acquired in-vivo data, which were from 10 subjects were scanned using a multi-slice GRE sequence7 at 3T. The sequence contained a navigator echo, generating reference phase errors. The subjects were instructed to breathe naturally for the first scan and then to breathe deeply for the second scan to test the two different breathing conditions. The scan parameters were as follows: TR = 1.2 sec., TEs were from 6.9 ms to 41.5 ms (7 echoes) for the images, 55.0 ms for the navigator, flip angle = 70°, FOV = 224 × 224 mm2, in-plane resolution = 1 × 1 mm2, slice thickness = 2 mm, and total 178 slices were acquired. As an additional evaluation, DeepResp was applied to 7T data (2 subjects)8 to demonstrate applicability to ultra-high-field that induces larger respiration-induced artifacts. After the correction, the respiration corrected-images were evaluated by normalized-root-mean-squared error (NRMSE), structural similarity (SSIM) with the navigator-corrected images as references.

Results

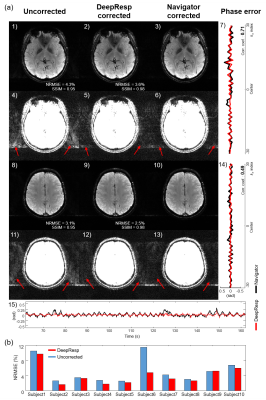

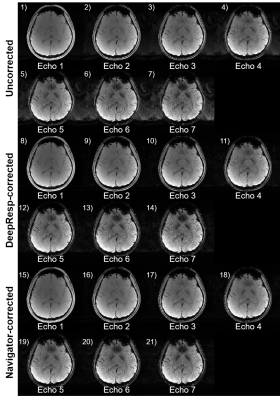

Figures 2 and 3 show correction results and estimated phase errors from DeepResp and navigator in the two breathing conditions (the last echo images of 3T data). In the deep breathing condition (Fig. 2), the uncorrected images revealed artifacts that were successfully removed after the corrections. In the natural breathing condition (Fig. 3), artifacts outside of the brain were substantially reduced after the corrections. The mean quantitative metrics of all subjects were substantially improved after the correction using DeepResp. NRMSE was reduced from 13.9 ± 4.6% to 5.8 ± 1.4% (deep breathing) and 5.2 ± 3.3% to 4.0 ± 2.5% (natural breathing). SSIM was improved from 0.86 ± 0.03 to 0.95 ± 0.01 (deep breathing) and from 0.94 ± 0.04 to 0.97 ± 0.02 (natural breathing). The mean correlation coefficient of the phase was 0.83 ± 0.13 (deep breathing) and 0.55 ± 0.24 (natural breathing).The correction results of all echoes are illustrated in Figure 4 for the deep breathing condition, showing generalized performance for the reduction of the artifacts.

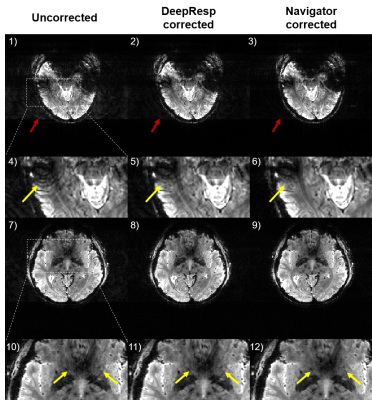

Finally, the correction results of 7T data are displayed in Figure 5, demonstrating effectiveness of the method in ultra-high-field (NRMSE: from 7.0 ± 2.5% to 5.3 ± 2.2%; SSIM: from 0.93 ± 0.02 to 0.96 ± 0.02).

The inference time for the neural networks was only 0.57 ± 0.01 sec for the whole brain (18 slices) when using a single GPU.

Discussion and Conclusion

In this work, a new deep-learning-powered artifact correction method that compensated for the B0 fluctuation from respiration was proposed. The method extracted the respiration-induced phase errors from a multi-slice GRE image with no additional information. The results revealed significantly reduced respiration-induced artifacts of in-vivo images. As compared to end-to-end based deep learning methods, our network is designed for a well-characterized respiration function and, therefore, the result is interpretable.Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF-2017M3C7A1047864, NRF-2018R1A2B3008445, NRF-2018R1A4A1025891, and NRF-2020R1A2C4001623) and Institute of Engineering Research at Seoul National University.References

[1] Ehman, R.L., Felmlee, J.P., 1989. Adaptive technique for high-definition MR imaging of moving structures. Radiology 173, 255-263.

[2] Ehman, R.L., McNamara, M., Pallack, M., Hricak, H., Higgins, C., 1984. Magnetic resonance imaging with respiratory gating: techniques and advantages. American Journal of Roentgenology 143, 1175-1182.

[3] An, H., Shin, H.-G., Jung, W., Lee, J., 2020. DeepRespi: Retrospective correction for respiration-induced B0 fluctuation artifacts using deep learning. Proceeding of the 28th Annual Meeting of the ISMRM 0673.

[4] Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Deep Residual Learning for Image Recognition. CVPR, 2016, pp. 770-778

[5] Yoon, J., Gong, E., Chatnuntawech, I., Bilgic, B., Lee, Jingu, Jung, W., Ko, J., Jung, H., Setsompop, K., Zaharchuk, G., Kim, E.Y., Pauly, J., Lee, Jongho, 2018. Quantitative susceptibility mapping using deep neural network: QSMnet. NeuroImage 179, 199–206.

[6] Jung, W., Yoon, J., Ji, S., Choi, J.Y., Kim, J.M., Nam, Y., Kim, E.Y., Lee, J., 2020. Exploring linearity of deep neural network trained QSM: QSMnet+. NeuroImage 211, 116619.

[7] Nam, Y., Kim, D.-H., Lee, J., 2015. Physiological noise compensation in gradient-echo myelin water imaging. NeuroImage 120, 345-349. [8] Shin, H.-G., Oh, S.-H., Fukunaga, M., Nam, Y., Lee, D., Jung, W., Jo, M., Ji, S., Choi, J.Y., Lee, J., 2019. Advances in gradient echo myelin water imaging at 3T and 7T. NeuroImage 188, 835-844.

Figures