1341

Simultaneous Reconstruction of High-resolution Multi b-value DWI with Single-shot Acquisition

Fanwen Wang1, Hui Zhang1, Fei Dai1, Weibo Chen2, Chengyan Wang3, and He Wang1,3

1Institute of Science and Technology for Brain-Inspired Intelligence, Fudan University, Shanghai, China, 2Philips Healthcare, Shanghai, China, 3Human Phenome Institute, Fudan University, Shanghai, China

1Institute of Science and Technology for Brain-Inspired Intelligence, Fudan University, Shanghai, China, 2Philips Healthcare, Shanghai, China, 3Human Phenome Institute, Fudan University, Shanghai, China

Synopsis

Compared with the standard imaging method, i.e. single-shot echo planar imaging for diffusion MRI, Multi-Shot EPI (MS-EPI) is featured with high spatial resolution, though suffering from longer scanning time and severer phase variations. This study proposed a novel method to reconstruct four-shot high-resolution DWIs from one-shot data for multiple b-values simultaneously, enabling the physiological feature transformation through different b-values. Trained on healthy volunteers, we succeeded in recovering the aliasing images which violates the Nyquist-Shannon theorem from one-shot DWI both on healthy people and tumor patients, showing great clinical generalization.

Introduction

With great potential in probing tissue cellularity and detecting of acute brain stroke non-invasively, DWI has been widely used for clinical assessment. Compared with single-shot DWI, we can be benefited from multi-shot DWI in aspects like less distortion, sub-millimeter resolution and shorter echo times, while hampered by phase errors and longer scanning and reconstruction time. Hence, we proposed a novel method to jointly recover high resolution multiple b-value DWIs with single-shot data, showing high-quality Apparent Diffusion Coefficients (ADC) mapping for clinical generalization.Methods

DatasetsThe study recruited a total of 32 healthy volunteers, each contains 16 slices. Subjects were randomly grouped into training (subjects/slices = 22/352), validation (5/80) and testing sets (5/80) respectively. Tumor patients with lymphatic metastasis were recruited additionally for testing. All data were acquired on a 3.0 T MRI scanner (Ingenia CX, Philips Healthcare, Best, the Netherlands) equipped with a 32-channel head coil. MS-DWI was obtained using four-shot interleaved EPI sequence without navigator, with parameters as follows: TE, 75 ms; TR, 2800 ms; matrix size, 228x228; slice thickness, 4 mm; partial Fourier factor, 0.702; voxel size, 1.0x1.0 mm2. SS-DWI and MS-DWI sequences, including four b-values of 0, 500, 750 and 1000 s/mm2, were applied in the anterior-posterior direction. SS-DWI was performed with the same EPI sequence as MS-DWI, but using SENSE with an acceleration factor of 2.

MUSE Reconstruction

We used MUSE1 to generate ground truth images in our network by estimating the motion-induced phase variations among multiple shots, and jointly calculating the magnitude signals of aliased voxels from all segments.

Network Setup

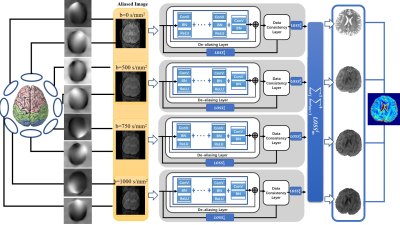

We extended a cascaded CNN (Figure 1) into a multi-channel setup: $$\sum_1^mu_{j}=argmin_{u_{1,2,3,4}}\sum_1^m||Eu_j-f_j||_2^{2}+\lambda_j||u_j-D(u_j;\theta_j)||_2^2$$where $$$D(u_j;\theta_j)$$$is a residual network for the jth channel with the parameter $$$\theta_j$$$, $$$u_j$$$ represents the predicted image of jth b-value and $$$f_j$$$ is the undersampled k-space data. The proposed model is an end-to-end multi-channel network with a data consistency layer and a de-aliasing layer2,3. Single-shot images with b-values of 0, 500, 750 and 1000 s/mm2 and corresponding coil sensitivity maps are fed into the network. Subsequently, real and imaginary parts of images are reconstructed through two separate networks. The final outputs are joined to form a coil-combined complex image. Each de-aliasing layer contains five convolutional layers, including 64 filters with a size of 3x3 and a stride of 1. The first four convolutional layers are followed by batch normalization and ReLU. The last layer is without ReLU. The total losses of four channels are combined together and back propagated to get the best weightings between data fidelity and de-aliasing of each channel. The model was implemented with Tensorflow using 4 GPUs of NVIDIA Tesla DGX (4 cores, each with 32 GB memory). The filter weights of each layer were initialized by Xavier4 and updated using ADAM optimizer algorithm5 with a fixed learning rate of 10-3. The training epoch was set to 1000, and lasting about 50 hours.

Evaluation Metrics

The proposed network was compared to ESPIRiT6 and ResNet. The reconstruction performance was measured by Peak SNR (PSNR) and the Structure SIMilarity index (SSIM). ADC maps were obtained by fitting a pixel-wise mono-exponential function using DWI with different b-values.

Results

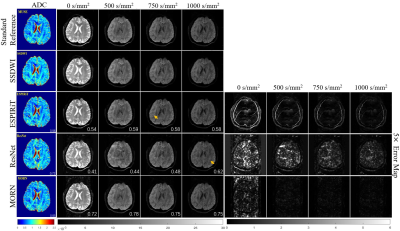

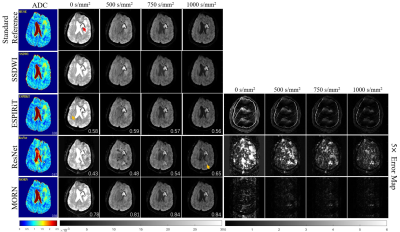

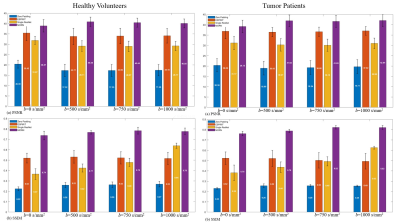

Compared with SS-DWI, the proposed method achieved much higher spatial resolution with single-shot scanning time. (Figure 2 and Figure 4) The aliasing artifact was quite clear in the error map of ESPIRiT reconstruction (SSIM = 0.58 for b = 1000 s/mm2). Single-channel ResNet converged faster during training, while showing severe over-smoothing effects with lower SSIM and PSNR for each b-value image compared with the proposed method (SSIM = 0.62 for b = 1000 s/mm2). By removing aliasing artifacts, the proposed method achieved accurate reconstruction, outperforming ESPIRiT and single-channel ResNet among all quality metrics for all b-values (SSIM = 0.75 for b = 1000 s/mm2). Tumor patients with lymphatic metastasis were further evaluated for model generalization. (Figure 3, Figure 4 and Figure 5) It should be noted that no pathological images were used for training. Compared with SS-DWI with the same scanning time, ESPIRiT could provide better reconstruction results with a higher SSIM of 0.56 at b = 1000 s/mm2, but had artifacts indicated by an orange arrow. Furthermore, tumor aliasing was quite obvious in ResNet, as pointed by an orange arrow. The proposed method demonstrated superior reconstruction and better-defined brain tumor textures at all b-values. Then, ADC maps through different methods for the tumor case were obtained. (Figure 3 and Figure 5) Single-channel ResNet showed over-smoothness in the cerebrospinal fluid region and ESPIRiT still had structural artifacts pointed by arrows. The proposed method recovered the brain texture with the highest SSIM of 0.94.Conclusion

We proposed a multi-channel CNN for reconstructing four-shot high-resolution DWIs from single-shot data for multiple b-values simultaneously. Cascaded with data consistency layer and ResNet, we succeeded in recovering high resolution artifact-free images using only one-fourth scanning time. Although the model is initially trained on healthy volunteers, we succeeded in inferring the pathological details of tumor patients with great generalization.Acknowledgements

The work was supported in part by the National Natural Science Foundation of China (No. 81971583), National Key R&D Program of China (No. 2018YFC1312900), Shanghai Natural Science Foundation (No. 20ZR1406400), Shanghai Municipal Science and Technology Major Project (No.2017SHZDZX01, No.2018SHZDZX01) and ZJLab.References

- Chen NK, Guidon A, Chang HC, Song AW. A robust multi-shot scan strategy for high-resolution diffusion weighted MRI enabled by multiplexed sensitivity-encoding (MUSE). NeuroImage. 2013;72:41-47.

- Aggarwal HK, Mani MP, Jacob M. MoDL: Model-based deep learning architecture for inverse problems. IEEE Trans on Med Imag. 2018;38(2):394-405.

- Schlemper J, Caballero J, Hajnal JV, Price AN, Rueckert D. A deep cascade of convolutional neural networks for dynamic MR image reconstruction. IEEE transactions on Medical Imaging. 2017;37(2):491-503.

- Glorot X, Bengio Y. Understanding the difficulty of training deep feedforward neural networks. Paper presented at: Int. Conf. Artif. Intell. Stat2010.

- Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv:14126980. 2014. https://arxiv.org/abs/1412.6980.

- Uecker M, Lai P, Murphy MJ, et al. ESPIRiT--an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA. Magn Reson Med. 2014;71(3):990-1001.

Figures

Figure 1: The proposed model for multi-coil

high-resolution DWI reconstruction. The network combines losses from four channels

to back propagate. Each channel consists of a de-aliasing layer and a data consistency

layer to recover from aliasing artifacts.

Figure 2: Comparison of

reconstructed images for multiple b-values in healthy volunteers. The

calculated ADC maps are shown leftmost. The b-values are listed on each column.

SSIMs are listed at the bottom-right of each image. The rightmost four columns

represent the corresponding error maps. The error was x5 amplified for better

visualization. The aliasing artifacts are shown by arrows.

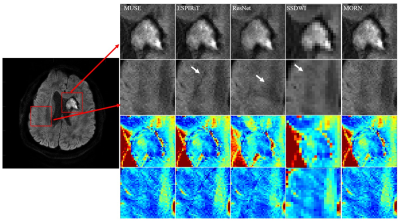

Figure 3: Comparison of

reconstructed images for multiple b-values in tumor patients. The

calculated ADC maps are shown leftmost. The b-values are listed on each column.

SSIMs are listed at the bottom-right of each image. The rightmost four columns

represent the corresponding error maps. The error was ×5 amplified for better

visualization. The tumor lesion is pointed by a red arrow, and aliasing

artifacts are shown by orange arrows.

Figure 4: Quantitative metrics

of reconstructed images for multiple b-values for healthy patients and tumor

patients. Testing datasets were validated with the zero padding, ESPIRiT,

single-channel ResNet and the proposed method.

Figure 5: Zoomed high b-value reconstructions and the

corresponding regions in ADC maps. The artifacts are shown by arrows in

ESPIRiT, ResNet and SS-DWI.