1277

Interleaved Black- and Bright-Blood Acquisition for Automatic Brain Metastasis Detection using Deep Learning Convolutional Neural Network1Philips Japan, Tokyo, Japan, 2Departments of Clinical Radiology, Graduate School of Medical Sciences, Kyushu University, Fukuoka, Japan, 3Philips GmbH Innovative Technologies, Aachen, Germany, 4Philips Healthcare, Tokyo, Japan

Synopsis

Volume isotropic simultaneous interleaved bright- and black-blood examination (VISIBLE) is an established approach for brain metastasis screening. We investigated the potential of VISIBLE in deep learning model development. The results suggest VISIBLE’s usefulness for automatic metastasis detection.

Introduction

Brain metastases (BM) are the most common neurologic complications due to cancer. Incidence is quite high, up to one-third of patients with cancer1. An accurate diagnosis is crucial because the therapeutic strategy is related to the BM size and number. Therefore, there is increasing interest in automatic BM detection using deep learning2-6. In general, a challenge in automatic detection is to achieve both high sensitivity and high specificity. In other words, ensuring clinically reliable sensitivity to small lesions while keeping the number of false positive cases (#FPs) low is challenging. This is critical in BM detection as the number of lesions is directly related to the treatment strategy1.Volume isotropic simultaneous interleaved bright- and black-blood examination (VISIBLE) is an established approach for BM screening7. Reading tests by radiologists have shown that VISIBLE provides high sensitivity, low #FPs and shorter reading time, compared with the single contrast images such as black-blood (BLACK) or bright-blood images (BRIGHT). From this result, it is expected that using VISIBLE images as input will improve the sensitivity and specificity of deep learning (DL) based automatic detection.

The aim of this study is to investigate whether automatic metastasis detection with a deep convolutional neural network (CNN) trained with VISIBLE images has advantages compared to a CNN trained with either BLACK or BRIGHT only images. The lesion size was also assessed in the evaluation of the performance.

Materials and Methods

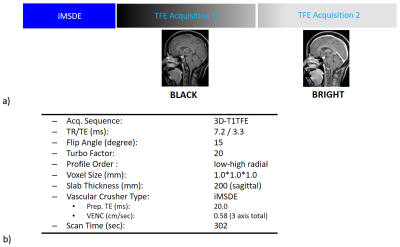

VISIBLE StrategyThe VISIBLE scheme is illustrated in Figure 1a. BLACK and BRIGHT images are acquired in an interleaved manner. To suppress blood signals, improved motion-sensitized driven-equilibrium (iMSDE) was applied right before the sequence followed by two consecutive acquisitions. The blood signal is suppressed in the first acquisition as data is acquired immediately following iMSDE. Conversely blood signal is increased in the second acquisition due to T1 recovery. The radiologist will first use BLACK for lesion detection, as ambiguous blood signal is mostly suppressed, and then BRIGHT is used to check if the high signal detected in BLACK is indeed a lesion or residual blood signal. This strategy was established to ensure improved diagnostic performance in terms of sensitivity, #FPs and radiologist reading time7.

Magnetic Resonance (MR) Experiments

The VISIBLE scheme was implemented on a 3.0T Ingenia scanner (Philips, Best, The Netherlands). The 3D-gradient-echo sequence (Turbo Field Echo: TFE) was used for the acquisition. Detailed sequence parameters are summarized in Figure 1b. Seventy-one BM patients’ data from VISIBLE examinations were retrospectively used for this study.

DL Model Architecture

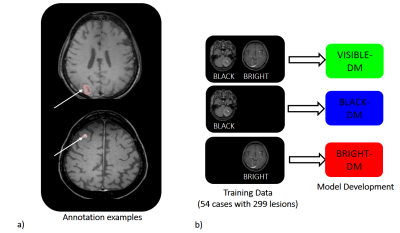

Ground truth images for all lesions used in DL training and test were annotated by two radiologists in consensus using IntelliSpace-Discovery Version 3 (Philips Healthcare, Best, The Netherlands). Annotation examples are shown in Figure 2a. A 3D-CNN model architecture based on DeepMedic (DM), originally developed for brain lesion segmentation8, was trained on 54 patients with 299 metastases for automatic BM detection and segmentation. The training was conducted for three kinds of input data, black and bright images (VISIBLE-DM), black images (BLACK-DM) and bright images (BRIGHT-DM), as illustrated in Figure 2b. The three developed models were tested using data from 17 patients with a total of 48 metastases, and sensitivity, #FPs per case (#FPs/case) and similarity index or dice-coefficient (DICE) score were compared.

Results

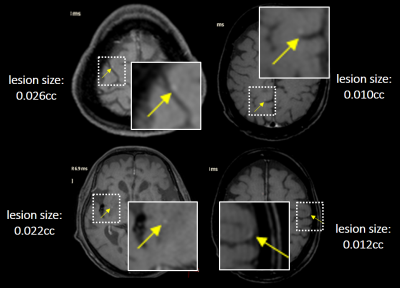

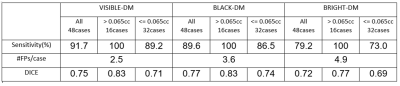

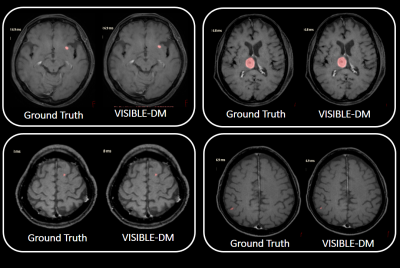

Representative BM detections in four cases using VISIBLE-DM are demonstrated in Figure 3, comparing with ground truth. In this test the number of missed lesions by VISIBLE-DM was four. All missed cases with lesion size less than 0.03cc are shown in Figure 4.The comparison results among the three models are shown in Figure 5. The sensitivity in VISIBLE-DM 91.7% was higher than BLACK-DM 89.6% and BRIGHT-DM 79.2% respectively. The #FPs/case of VISIBLE-DM 2.5 was lower than that of BLACK-DM 3.6 and BRIGHT-DM 4.9 respectively. The DICE score was 0.75 in VISIBLE-DM, 0.77 in BLACK-DM and 0.72 in BRIGHT-DM.

Here lesions are subdivided into two groups with a lesion size of 0.065cc as the threshold. This corresponds to a diameter of 5 mm when the lesion is regarded as a sphere. The number of lesions with volume higher than 0.065cc was 16. In that group, all three models achieved 100% sensitivity. For the small lesion group, sensitivity was 89.2% in VISIBLE-DM, 86.5% in BLACK-DM and 73.0% in BRIGHT-DM. On the other hand, the DICE score was higher in BLACK-DM in all lesions and small lesions group.

Conclusion

Using VISIBLE as DL model input reduced the number of #FPs/case compared with BLACK-DM and BRIGHT-DM, while ensuring high sensitivity. This is similar to the reading test by radiologists7. In addition, VISIBLE-DM showed reliable diagnostic performance even in small lesions. The results suggest using VISIBLE is advantageous in 3D-CNN model development for automatic brain metastasis detection compared to BLACK and BRIGHT only images.Acknowledgements

No acknowledgement found.References

[1] Mark O'Beirn et al. The Expanding Role of Radiosurgery for Brain Metastases. Medicines (Basel). 2018;5(3): 90.

[2] Khaled Bousabarah et al. Deep convolutional neural networks for automated segmentation of brain metastases trained on clinical data. Radiat Oncol. 2020;15(1): 87.

[3] Endre Grøvik et al. Deep learning enables automatic detection and segmentation of brain metastases on multisequence MRI. J Magn Reson Imaging 2020;51(1): 175-82.

[4] Yohan Jun et al. Deep-learned 3D black-blood imaging using automatic labelling technique and 3D convolutional neural networks for detecting metastatic brain tumors. Sci Rep 2018;8(1): 9450.

[5] Odelin Charron et al. Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput Biol Med. 2018;95: 43-54.

[6] Yan Liu et al. A deep convolutional neural network-based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery. PLoS One. 2017;12(10): e0185844.

[7] Kikuchi K et al. 3D MR sequence capable of simultaneous image acquisitions with and without blood vessel suppression: utility in diagnosing brain metastases. Eur Radiol 2015;25: 901-10.

[8] Konstantinos Kamnitsas et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal. 2017;36: 61-78.

Figures

Figure 3: Four representative segmentation cases by VISIBLE-DM:

Segmented regions are shown in pink. Ground truth created by radiologists are also shown for comparison.