1174

Single ProjectIon DrivEn Real-time (SPIDER) Multi-contrast MR Imaging Using Pre-learned Spatial Subspace1Biomedical Imaging Research Institute, Cedars-Sinai Medical Center, Los Angeles, CA, United States, 2Department of Bioengineering, UCLA, Los Angeles, CA, United States, 3Siemens Medical Solutions USA, Inc., Los Angeles, CA, United States, 4Departments of Radiology and Radiation Oncology, University of Southern California, Los Angeles, CA, United States

Synopsis

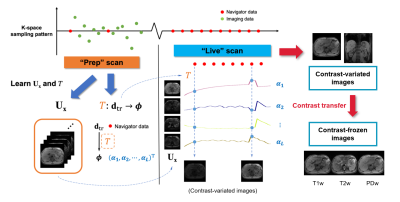

We propose SPIDER, a new technique for real-time multi-contrast 3D imaging. A “Prep” scan is first performed to learn the static information; a “Live” scan is then performed to acquire only single k-space projection for dynamic information. With the information learned in the “Prep” scan, 3D multi-contrast images can be generated with simple matrix multiplication, which yields a latency of 50ms or less.

Introduction

MR-guided radiation therapy (MRgRT) has gained growing interest since the introduction of MR-Linac1. Real-time imaging based on 2D acquisitions is the current standard method for tracking the moving treatment target and controlling the timing of radiation beams during MRgRT. With motion commonly occurring in 3D space, real-time volumetric imaging is more desirable for treatment precision. However, this remains challenging due to compromised spatiotemporal resolution and considerable latency.The recently proposed MR Multitasking technique2 can generate real-time 3D MR images with high spatiotemporal resolution and flexible contrast weighting, without the assistance of external gating or triggering3,4. All of these characteristics are desirable in an ideal monitoring technique on MR-linac. However, these images need to be reconstructed retrospectively rather than on the fly. In this work, a new technique called Single ProjectIon DrivEn Real-time (SPIDER) multi-contrast MR imaging is proposed to fill this gap.

Theory

Framework:The SPIDER technique builds on the MR Multitasking framework (Fig.1). A “Prep” scan is first performed to learn and store static information; a “Live” scan is then performed to dynamically acquire a single repeated k-space projection, which is adequate to generate on-the-fly 3D images, given the spatial information learned in the “Prep” scan.

Image generation using pre-learned spatial subspace5:

Our 4D image $$$I(\textbf{x},t)$$$ can be modeled as low-rank6, i.e. $$$\bf{A=U_x\Phi}$$$, where $$$\bf{U_x}$$$ and $$$\bf\Phi$$$ represent spatial basis functions and temporal weighting functions respectively. At a specific time point $$$t=t_s$$$, the real-time image $$$\textbf{a}_{t_s}$$$ (the $$$t_s$$$th column of $$$\bf{A}$$$) is a linear combination of the spatial basis functions, weighted by a vector $$${\bf{\phi}}_{t_s}$$$. In practice, $$$\bf{A}$$$ as a whole can be reconstructed in a two-step approach, by first recovering $$$\bf\Phi$$$ from “navigator data” $$$\textbf{D}_\text{tr}$$$, and then reconstructing $$$\bf{U_x}$$$ by fitting the known $$$\bf\Phi$$$ to the “imaging data” $$$\textbf{D}_\text{im}$$$. Because $$$\bf\Phi$$$ is often extracted from the right singular vectors of $$$\textbf{D}_\text{tr}$$$, there exists a linear transformation given by $$$\textbf{T}:\textbf{TD}_\text{tr}\rightarrow{\bf\Phi}$$$. Therefore the entire 3D image at $$$t=t_s$$$ can be generated from the navigator data $$$\textbf{d}_{\text{tr},t_s}$$$ with a simple matrix multiplication:

$$\textbf{a}_{t_s}={\bf{U_x}}{\bf{\phi}}_{t_s}={\bf{U_x}}\textbf{T}\textbf{d}_{\text{tr},t_s}\qquad\text{(1)}$$

Contrast regeneration: Towards multi-contrast real-time imaging

By projecting $$$\bf\Phi$$$ onto Bloch-simulation-derived subspaces, real-time images can be displayed with a stable contrast while maintaining the true motion state. First, an auxiliary temporal basis $$${\bf\Phi}_{\text{Bloch}}$$$ is calculated from the SVD of a Bloch simulated training dictionary. It contains the information only about contrast change. Then, the temporal subspace $$$\bf\Phi $$$ that was learned from the “Prep” scan is projected onto $$${\bf\Phi}_{\text{Bloch}}$$$. This gives $$${\bf\Phi}_{\text{cc}}$$$, the “contrast change” component of $$$\bf\Phi$$$:

$${\bf\Phi}_{\text{cc}}={\bf\Phi}{\bf\Phi}_{\text{Bloch}}^{+}{\bf\Phi}_{\text{Bloch}}\qquad\text{(2)}$$

Finally, the image contrast at any time $$$t=t_c$$$ can be synthesized in the “Live” scan by replacing $$${\bf\phi}_{t_s}$$$ with $$${\bf{\tilde{\phi}}}_{t_s}(t_c)$$$:

$${\bf{\tilde{\phi}}}_{t_s}(t_c)={\bf\phi}_{t_s}+\Delta{\bf\phi}_{\text{cc},t_s}(t_c)\qquad\text{(3)}$$

where $$$\Delta{\bf\phi}_{\text{cc},t_s}(t_c)={\bf\phi}_{\text{cc},t_c}-{\bf\phi}_{\text{cc},t_s}$$$ denotes the contrast adjustment term, and $$$t_c$$$ refers to the time point with the contrast of interest, e.g., T1w, T2w.

Therefore, Eq.[1] can be adapted as follows for contrast-frozen, motion-maintained real-time imaging:

$$\textbf{a}_{t_s}(t_c)={\bf{U_x}}{\bf{\tilde{\phi}}}_{t_s}(t_c)={\bf{U_x}}(\textbf{T}\textbf{d}_{\text{tr},t_s}+\Delta{\bf\phi}_{\text{cc},t_s}(t_c))\qquad\text{(4)}$$

For simplicity, $$$\textbf{a}_{t_s}$$$ generated with Eq.[1] and Eq.[4] are denoted as contrast-variated and contrast-frozen images hereinafter.

Methods

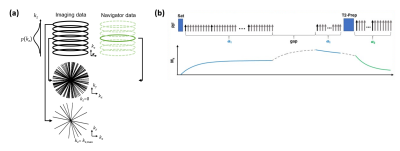

The proposed method was tested with an abdominal T1/T2 MR Multitasking sequence7 (Fig.2). Specific imaging parameters were: axial orientation, matrix size=160x160x52, FOV=275x275x240mm3, voxel size=1.7x1.7x6mm3, TR/TE=6.0/3.1ms, FA=5° (following SR-prep) and 10° (following T2-prep), water-excitation for fat suppression. The total imaging time was approximately 8min.Experiments were performed in four healthy subjects (n=4) on a 3.0T clinical scanner (Biograph mMR, Siemens Healthineers) equipped with an 18-channel phase array body coil. For each subject, two identical Multitasking scans were performed successively, serving as the “Prep” scan and the “Live” scan, respectively. Data from the “Live” scan were reconstructed with the proposed SPIDER method (discarding all data but the navigator lines) and the regular Multitasking algorithm (using its own imaging and navigator data) respectively, with the latter serving as a reference for validating the SPIDER images. Real-time images were compared, and delineation of motion state were evaluated. To investigate the limit to which the SPIDER technique cannot adequately depict the motion, we performed a case study where a subject was instructed to take a deep breath in the middle of the live scan.

Results

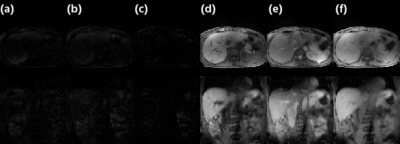

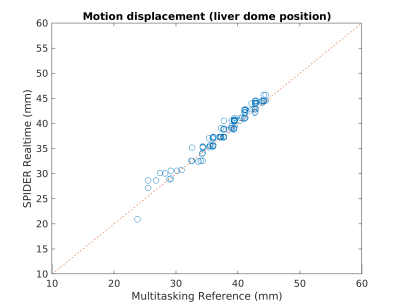

The average elapsed time to perform computation described in Eq.[4] was ~40ms per entire 3D volume. Since the navigator data (central k-space projection) was acquired in 6ms, a latency of 50ms or less was reached. Fig.3 compares Multitasking reconstruction (reference) and the proposed SPIDER reconstruction. There was no visually considerable difference between contrast-variated real-time images generated by SPIDER and regular Multitasking reconstruction. Contrast-frozon T1w, T2w and PDw images show appropriate contrasts correspondingly. The motion displacements of the liver dome measured from reference images and SPIDER real-time images were strongly correlated (R2=0.97), suggesting an excellent correlation (Fig.4). Fig.5 illustrates how images with abrupt motion caused by sudden deep breath were detected with SPIDER.Discussion and conclusion

SPIDER provides a solution to generate multi-contrast 3D images of high spatial (1.7mm) and temporal (up to one single imaging line: 6ms) resolution with low latency (<50ms). In radiation therapy, the “Prep” scan can naturally serve as an on-board pre-treatment planning scan, and the “Live” scan can serve as a viable real-time monitoring approach with tumor-tailored image contrast. As a whole, the SPIDER framework can potentially become a standalone package for MRgRT.Acknowledgements

This work was supported by NIH R21CA234637, R01EB029088, and R01EB028146.References

1. Raaymakers BW, Lagendijk JJ, Overweg J, Kok JG, Raaijmakers AJ, Kerkhof EM, et al. Integrating a 1.5 T MRI scanner with a 6 MV accelerator: proof of concept. Phys Med Biol. 2009;54(12):N229-37.

2. Christodoulou AG, Shaw JL, Nguyen C, Yang Q, Xie Y, Wang N, Li D. Magnetic resonance multitasking for motion-resolved quantitative cardiovascular imaging. Nature biomedical engineering. 2018 Apr;2(4):215.

3. Wang N, Gaddam S, Wang L, Xie Y, Fan Z, Yang W, et al. Six-dimensional quantitative DCE MR Multitasking of the entire abdomen: Method and application to pancreatic ductal adenocarcinoma. Magn Reson Med. 2020;84(2):928-48.

4. Shaw JL, Yang Q, Zhou Z, Deng Z, Nguyen C, Li D, Christodoulou AG. Free-breathing, non-ECG, continuous myocardial T1 mapping with cardiovascular magnetic resonance multitasking. Magnetic resonance in medicine. 2019 Apr;81(4):2450-63.

5. Han P, Han F, Li D, Christodoulou AG, Fan Z. On-the-fly 3D images generation from a single k-space readout using a pre-learned spatial subspace: Towards MR-guided therapy and intervention. In Proceedings of the 28th Annual Meeting of ISMRM 2020 (p. 4129).

6. Liang ZP. Spatiotemporal imaging with partially separable functions. In 2007 4th IEEE International Symposium on Biomedical Imaging: From Nano to Macro 2007 Apr 12 (pp. 988-991). IEEE.

7. Deng Z, Christodoulou AG, Wang N, Yang W, Wang L, Lao Y, Han F, Bi X, Sun B, Pandol S, Tuli R, Li D, Fan Z. Free-breathing Volumetric Body Imaging for Combined Qualitative and Quantitative Tissue Assessment using MR Multitasking. In Proceedings of the 27th Annual Meeting of ISMRM 2019 (p. 0698).

Figures