0813

CycleSeg: MR-to-CT Synthesis and Segmentation Network for Prostate Radiotherapy Treatment Planning1Magnetic Resonance Imaging Laboratory, Department of Bio and Brain Engineering, Korea Advanced Institute of Science and Technology, Daejeon, Korea, Republic of, 2Department of Radiation Oncology, Samsung Medical Center, Seoul, Korea, Republic of

Synopsis

MR-only radiotherapy planning can reduce the radiation exposure from repeated CT scanning. Most researches focus on generating synthetic CT images from MR, but not the contouring of organs-of-interest in said images, which requires manual labor and expertise. In this study, we proposed CycleSeg, a CycleGAN-based network that can accomplish both tasks to streamline the process and reduce human efforts. Experiments showed that the proposed CycleSeg can generate realistic synthetic CT images along with accurate organ segmentation in the pelvis of patients with prostate cancer.

Introduction

Prostate cancer is one of the most commonly diagnosed cancers, however with early screening and timely treatment, the survival rate of patients has been improved significantly. Radiotherapy treatment planning is conventionally performed through CT scanning, however, repeated CT scans increase radiation exposure. This motivates many studies on MR-only radiotherapy planning, which attempts to derive electron density information of CT from MR images. Recent studies1,2 have shown that deep learning can generate synthetic CT images (sCT) with good dosimetry comparable to real CT acquisition. Contouring or delineation of the prostate and surrounding tissues to inspect radiation dose distribution is also an important step that requires precious time and manual labor. In this study, we propose CycleSeg, a network that can perform sCT generation and organ segmentation from an input T2-weighted MR image to streamline the treatment process.Method

Data acquisition and processingWe utilized 115 sets of simulation CT scans of patients with prostate cancer who underwent radiotherapy with or without surgery between January 2016 and July 2018 at our medical center. The simulation CT scans were performed with GE Healthcare Discovery CT590 RT. The field of view (FOV) was 50cm, the slice-thickness between the consecutive axial CT images was 2.5 mm, and the matrix size and resolution were 512×512 and 0.98×0.98 mm2. Contours of organs-of-interest (prostate, left and right femur head, bladder, rectum, and penile bulb) were manually annotated on the CT data.

MRI scans were conducted immediately after the simulation CT scans with a Philips 3.0 T Ingenia MR. T2 weighted MR images were obtained with FOV = 400×400×200mm3, matrix size = 960×960, TR/TE = 6152 ms/100 ms.

The data were manually inspected to remove those with unusual artifacts, resulting in 96 pairs of MRI/CT volumes. To process the data for training the networks, we first resampled both MRI and CT volumes to a common 1.3×1.3×2.5mm3 spacing and register the MRI volume to CT volume with affine transformation in simpleITK3-5. We cropped the central 384×384 in the axial plane to remove the background. To perform intensity normalization, we clipped Hounsfield units (HU) of CT data to [-200, 1300] range, which was linearly mapped to [-1, 1] range. For the MRI data, we normalized the intensity by subtracting the mean and dividing by 2.5 times the standard deviation of the voxels inside the body and clipped the normalized values to [-1,1] range.

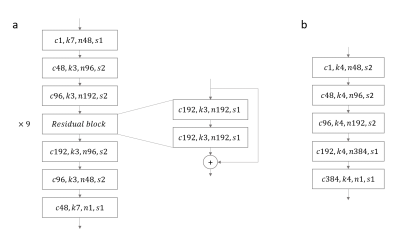

Network architecture and training:

CycleSeg consists of a CycleGAN6 and 2 UNets7, whose structures are shown in Figures 1 and 2. Similar to (8), CycleSeg enforces shape consistency to improve the segmentation and synthesis results. Since only the CT segmentation is available, we proposed to use pseudo-label created from the MRI images by the UNet-MRI to train the UNet-CT with sCT images. The following loss is minimized during the training process:

$$L(x_{MRI},x_{CT}) = L_{LSGAN} + \lambda_{cycle} L_{cycle} + \lambda_{idt} L_{idt} + \lambda_{ref} L_{ref} + \lambda_{seg} L_{seg} (1)$$

$$$L_{LSGAN}$$$: least squared GAN loss9

$$$L_{cycle}$$$: cyclic loss between reconstructed ($$$x'_{MRI}, x'_{CT}$$$) and original image ($$$x_{MRI}, x_{CT}$$$)

$$$L_{idt}$$$: identity loss of transforming image from one domain with the same corresponding generator ( $$$G_{MRI}$$$ should not modify $$$x_{CT}$$$)

$$$L_{ref}$$$ : pixel-wise loss between anatomically close pairs of synthetic CT/MRI and real CT/MRI

$$$L_{seg}$$$: cross-entropy loss with real CT label and pseudo-label from $$$U_{MRI}(x_{MRI})$$$

$$$\lambda_{cycle},\lambda_{idt},\lambda_{ref},\lambda_{seg}$$$ : hyperparameters that control the contribution from each loss term. These are set as 10, 5, 10, 1 respectively.

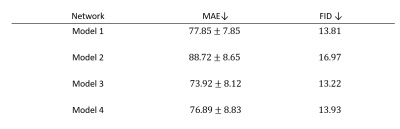

Four models were trained with different losses to verify the effectiveness of the approach.

Model 1: CycleGAN with Eq. (1) loss (proposed "CycleSeg")

Model 2: CycleGAN, trained without $$$L_{ref}$$$ and $$$L_{seg}$$$

Model 3: CycleGAN with $$$L_{ref}$$$

Model 4: CycleGAN with $$$L_{ref}$$$ and segmentation reference loss $$$L_{ref, seg}$$$ (using CT segmentation label of CT slice anatomically close to MRI slice as sCT segmentation label).

We calculated mean absolute error (MAE) of HU between the closely matched slices of real and synthetic CT. We also used Frechet inception distance (FID)10 as it is more indicative of visual quality.

Result

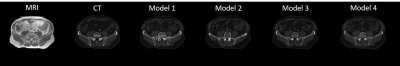

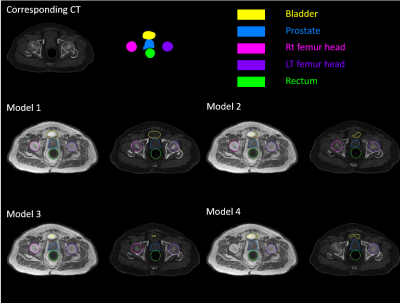

Table 1 shows the quantitative result on the test set of the four networks. The addition of reference loss improves the MAE and FID while the inclusion of segmentation network does not seem to improve the synthesis result. Figure 3 showed the qualitative comparison of a representative sCT from the four networks. Addition of the reference loss improves the appearance of the bones while segmentation loss does not seem to provide any visible benefit. Figure 4 showed the qualitative comparison of the segmentation performance for a representative slice and the effectiveness of Model 1 that uses Eq (1) as the loss function. Model 1 showed good segmentation results for all organs when compared to the segmentation of the matched CT slice. The other three models failed to successfully segment the bladder. Notably, Model 4 that was trained with the reference segmentation loss appeared to perform worse compared to the pseudo label loss of Model 1, possibly due to registration mismatch for Model 4.Conclusion

By the addition of reference and segmentation losses, CycleSeg (Model 1) provides both high quality sCT synthesis and organ segmentation (contour) in prostate area, which can be beneficial for MR-only prostate cancer radiotherapy planning.Acknowledgements

No acknowledgement found.References

1. Liu Y, Lei Y, Wang Y, Shfai-Erfani G et al. “Evaluation of a deep learning-based pelvic synthetic CT generation technique for MRI-based prostate proton treatment planning”. Phys. Med. Biol. 64 205022, 2019

2. Liu Y, Lei Y, Wang T, Kayode O et al. “MRI-based treatment planning for liver stereotactic body radiotherapy: validation of a deep learning-based synthetic CT generation method”. Br J Radiol. 2019 Aug;92(1100):20190067

3. R. Beare, B. C. Lowekamp, Z. Yaniv, "Image Segmentation, Registration and Characterization in R with SimpleITK", J Stat Softw, 86(8), 2018.

4. Z. Yaniv, B. C. Lowekamp, H. J. Johnson, R. Beare, "SimpleITK Image-Analysis Notebooks: a Collaborative Environment for Education and Reproducible Research", J Digit Imaging., 31(3): 290-303, 2018.

5. B. C. Lowekamp, D. T. Chen, L. Ibáñez, D. Blezek, "The Design of SimpleITK", Front. Neuroinform., 7:45, 2013.

6. Zhu J-Y, Park TS, Isola P, Efros A. "Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks", in IEEE International Conference on Computer Vision (ICCV), 2017.

7. Ronneberger O, Fischer P, Brox T. “U-Net: Convolutional Networks for Biomedical Image Segmentation”, Cham, Springer International Publishing, 2015.

8. Zhang Z, Yang L, Zheng Y. "Translating and Segmenting Multimodal Medical Volumes with Cycle- and Shape-Consistency Generative Adversarial Network." In Conference on Computer Vision and Pattern Recognition (CVPR), 2018

9. Mao X, Li Q, Xie H, Lau R, Wang Z, Smolley S-P. “Least Squares Generative Adversarial Networks”. In IEEE International Conference on Computer Vision (ICCV), 2017

10. Heusel M, Ramsauer H, Unterthiner T, Nessler B et al. “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium”, in Advances in Neural Information Processing Systems (NIPS), 2017

Figures