0811

Data augmentation using features from activation maps improved performance for deep learning based automated knee prescription1GE Healthcare, Bangalore, India, 2GE Global Research, Niskayuna, NY, United States

Synopsis

Data augmentation techniques have been routinely used in computer vision for simulating variations in input data and avoid overfitting. Here we propose a novel method to generate simulated images using features derived from activation maps of a deep neural network, which could mimic image variations due to MRI acquisition and hardware. Gradient-weighted Class Activation Mappings were used to identify regions important to classification output, and generate images with these regions obfuscated to mimic adversarial scenarios relevant for imaging variations. Training with images using the proposed data augmentation framework resulted in improved accuracy and enhanced robustness of knee MRI image classification.

Introduction

While it is generally accepted that larger datasets result in improved performance of Deep learning (DL) models, it is especially difficult to build big medical image datasets due to the rarity of diseases and variations in image data due differences in MRI acquisition and hardware. One solution to search the space of possible augmentations is adversarial training, wherein injecting minimal noise and small perturbations (adversarial attack) result in misclassification[1]. Adversarial training has been shown to be an effective technique for data augmentation and resulted in improved performance at weak spots in the learned decision boundary[2]. Activation mapping techniques can be used to visualize pertinent features emphasized by the trained DL network[3]. Gradient-weighted Class Activation Mappings generate heatmaps that explain the relevance for the decision of individual pixels or regions in the input image. Here we propose a novel data augmentation framework, based on features derived from the convolutional neural network (CNN), to simulate adversarial-like examples which could mimic variations in MR images due to reconstruction methods, coil sensitivity patterns and contrast changes due to tissue variations, to ultimately improve model performance.Methods

Knee MRI data were acquired from multiple sites. A total of 513 knee exams from volunteers as well as patients were included in the study. All the studies were approved by respective IRBs. Localizer Data was acquired on multiple MRI scanners (GE 3T Discovery MR 750w, GE Signa HDxt 1.5T, GE 1.5T Optima 450w, GE Signa Premier 3.0T, GE Signa Architect 3.0T and GE Signa Pioneer 3.0T) and with different coil configurations (16/18-channel TR Knee coil, 16ch TDI AA coil, GEM Flex coil, 30 channel Air AA coil, 20 and 21 channel Multipurpose Air coil etc.).The annotation of the knee image data was performed by a trained radiologist to classify axial, sagittal and coronal knee data into relevant or irrelevant classes. We trained a deep learning network to classify anatomically relevant slices of knee MRI images for automated scan plane prescription[4,5]. Deep learning CNN based LeNet architecture with cross-entropy loss was adopted for classification of MRI images into relevant and irrelevant categories (Model A). Grad-CAM maps were used to identify important regions in the image for predicting the classification output. For data augmentation, masks generated from inverted, weighted (reduced intensity) Grad-CAM and Grad-CAM++ maps were used to obfuscate or generate intensity variations in the region of interest important to the classification. To demonstrate the effectiveness of activation map based augmentations, we consider two experimental setups: (1) Model A: Model trained on the pool of normal training data (2) Model B: Model trained on the pool of normal and activation map based augmented data. The test data consisted of three cohorts of data: (1) Normal data without any artifacts, (2) Data with metal implant artifacts and (3) Data with activation map-based corruptions derived from Model A activation maps.Results

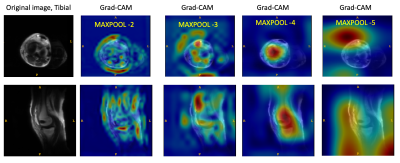

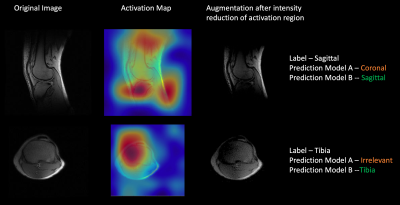

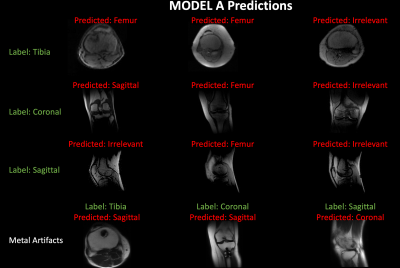

Figure 1 shows examples of Grad-CAM generated activation or heat maps at multiple max-pool layers of the DL network, indicating that visualized features from layer 4 were most relevant to the classification. Regions pertinent to classification highlighted in the activation maps were degraded to generate the augmented image. Examples of augmentation using inverted Grad-CAM activation maps are shown in Figure 2. Testing the augmented image with Model A resulted in misclassification (Figure 2), indicating weak spots in the classification performance of the trained deep learning network. Retraining with activation-map based augmentations (Model B) resulted in improved accuracy of the classification for test cohort 1; from 83.1% using Model A compared to 86.6% accuracy using Model B. Models trained on normal images (Model A) show drop in performance in the presence of imaging artifacts due to metal implants. The proposed augmentation method resulted in an improvement in classification accuracy in test cohort 2 from 70.6% accuracy using Model A to 80.3% accuracy using Model B. For test cohort 3, it showed an improvement from 67.5% accuracy with Model A to 85.5% accuracy with Model B. Figure 3 shows examples of images misclassified using models without the proposed augmentation scheme which were subsequently correctly classified using the proposed model with activation map based augmentations (Model B).Conclusion and Discussion

Performance of deep learning models can be easily altered by adding relatively small perturbations to the input vector. These perturbations can be caused in MRI images by slight changes to acquisition parameters or changes in hardware including magnetic strength, coil etc. Here we used activation maps indicating regions important to performance of the deep learning network to generate augmentations to guard against these perturbations and improve model robustness. Prediction with the generated images resulted in incorrect classification demonstrating model behavior in adversarial scenarios. Training with images using the proposed data augmentation framework resulted in improved accuracy of classification knee MRI images, and enhanced robustness against image changes due to metal implants and coil intensity variations, which are common in clinical practice.Acknowledgements

No acknowledgement found.References

1. Su, J., Vargas, D. V. & Kouichi, S. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 23, 828–841 (2019).

2. Goodfellow, I. J., Shlens, J. & Szegedy, C. Explaining and Harnessing Adversarial Examples. ArXiv14126572 Cs Stat (2015).

3. Selvaraju, R. R. et al. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 128, 336–359 (2020).

4. D Shanbhag et al. A generalized deep learning framework for multi-landmark intelligent slice placement using standard tri-planar 2D localizers. in Proceedings of ISMRM (2019).

5. Bhushan, C. et al. Consistency in human and machine-learning based scan-planes for clinical knee MRI planning. in Proceedings of ISMRM (2020).

Figures