0810

Generative Adversarial Network for T2-Weighted Fat Saturation MR Image Synthesis Using Bloch Equation-based Autoencoder Regularization1Electrical and electronic engineering, Yonsei University, Seoul, Korea, Republic of, 2Yonsei University, Seoul, Korea, Republic of, 3Gangnam Severance Hospital, Seoul, Korea, Republic of, 4Department of Radiology, University of California-San Diego, San Diego, CA, United States, 5Department of Radiology, VA San Diego Healthcare System, San Diego, CA, United States, 6Yonsei University College of Medicine, Seoul, Korea, Republic of

Synopsis

We proposed a Bloch equation-based autoencoder regularization Generative Adversarial Network (BlochGAN) to generate T2-weighted fat saturation (T2 FS) images from T1-weighted (T1-w) and T2-weighted (T2-w) images for spine diagnosis. Our method can reduce the cost for acquiring multi-contrast images by reducing the number of contrasts to be scanned. BlochGAN properly generates the target contrast images by using GAN trained with the autoencoder regularization based on bloch equation, which is the basic principal of MR physics for identifying the physical basis of the contrasts. Our results demonstrate that BlochGAN achieved quantitatively and qualitatively superior performance compared to conventional methods.

Purpose

Magnetic resonance imaging (MRI) provides various contrasts between tissues for each imaging target to provide a variety of information on the subject. In general, T1-weighted spin echo (T1-w) and T2-weighted spin echo (T2-w) images are routinely scanned to investigate spinal infection [1]. Fat-saturated (FS) imaging is generally used in the evaluation of bone edema and ligament inflammation [1]. Therefore, acquiring FS images as well as T1-w and T2-w images helps to make an accurate diagnosis. However, because MRI has a long scanning time, acquiring FS images in addition to T1-w and T2-w images can place a burden on the patients. MR image synthesis could be a good way to overcome this problem with low cost. In this study, we propose a Bloch equation-based autoencoder regularization GAN (BlochGAN) which generates T2 FS images from T1-w and T2-w images without additional scanning.Method

We propose BlochGAN, which generates spine T2 FS images from multi-contrast (T1-w and T2-w) spine MR images. our BlochGAN can efficiently learn the relationship between MR images of various contrasts given in the training process based on the Bloch equation and can properly generate target contrast images. To our knowledge, there has been no study about medical image synthesis using the Bloch equation with generative adversarial network (GAN) or convolutional neural network (CNN) models. BlochGAN is composed of four sub-networks: an encoder, a decoder, a generator, and a discriminator. The encoder extracts important features from input images for the generator and the decoder. The generator creates a target contrast image based on the features extracted by the encoder. The discriminator distinguishes whether the generated target image is real or not. The decoder generates MR parameter maps based on the features from the encoder and restores the input images like an autoencoder with the Bloch equation functions. Because the relationships between the input and the target contrast images are based on the MR parameter maps and the Bloch equations of each sequence, the decoder in the training process could help the encoder to learn the efficient feature extraction for generating the target contrast images. The detailed architecture of BlochGAN is shown in Fig. 1. Our experiment is implemented with 4-fold cross validation under the approval of the institutional review board. We used two datasets, dataset 1 and 2. Dataset 1 was obtained from a total of 240 subjects, 40 subjects for each age group from their 20s to 70s. It was acquired from 2015 to 2016, regardless of diseases. Dataset 2 was obtained from a total of 70 subjects suspected of bone metastasis or red marrow hyperplasia. It was acquired from 2012 to 2019 regardless of age and gender. Both datasets were obtained by clinical 1.5 T MRI scanners from the same hospital. Two CNN-based methods, Multimodal Imaging [2] and CC-DNN [3], and two GAN-based methods, Pix2pix [4] and pGAN [5] are compared with BlochGAN.Result and Discussion

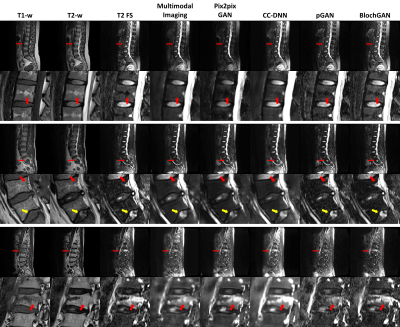

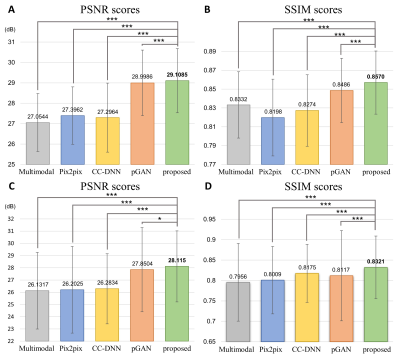

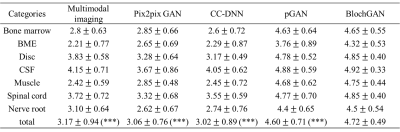

Fig. 2 compares the synthetic T2 FS images generated by various MR image synthesis methods for dataset 1. The second, fourth, and sixth rows of Fig. 2 are enlarged images of the area indicated by the red arrows in the first, third, and fifth rows, respectively, and the red arrows of the second, fourth, and sixth rows indicate degenerated area, blood vessel, and bone marrow edema. The yellow arrow indicates the nucleus pulposus of intervertebral disc. It shows that BlochGAN generates T2 FS images have more similar contrast and detailed structures to the reference images than the images generated by the conventional methods. Fig. 3 shows the quantitative evaluation results of the compared MR image synthesis methods. A and B are for dataset 1, and C and D are for dataset 2. A and C show peak signal-to-noise ratio (PSNR) scores, and B and D show structural similarity (SSIM) scores. Fig. 3 shows that BlochGAN achieved statistically higher PSNR and SSIM scores than conventional methods. Fig. 4 shows the expert evaluation results. Expert evaluation was conducted by two musculoskeletal radiologists for 30 subjects (15 subjects were randomly chosen from each dataset), and their interobserver agreement was excellent (Intraclass correlation coefficient, 0.7912). In the expert evaluation, BlochGAN achieved the highest scores and the lowest standard deviation among the compared methods for all categories. There was a significantly large difference between the BlochGAN results and the results of all other conventional methods. In particular, BlochGAN showed highest degree of agreement for bone marrow edema, which is the main clinical goal to be obtained through the acquisition of fat-suppressed T2-weighted images. BlochGAN is the only method that scored 4 points or more in all categories among the compared methods.Conclusion

We demonstrated the feasibility of our proposed network termed BlochGAN, which generates T2 FS spine MR images from T1-w and T2-w images without additional scanning. It is a first attempt to train the network in medical image synthesis using a Bloch equation. Our network achieved quantitatively and qualitatively higher performance compared to other conventional image synthesis methods. Our method can potentially be used to shorten the scanning time for acquiring multi-contrast MRIs.Acknowledgements

Dosik Hwang and Sungjun Kim are co-corresponding authors.

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT (2019R1A2B5B01070488), Bio & Medical Technology Development Program of the National Research Foundation (NRF) funded by the Ministry of Science and ICT (2018M3A9H6081483), and the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 202011C04-03). Dr. Bae received grant support (Grant Number R01 AR066622) from the National Institute of Arthritis and Musculoskeletal and Skin Diseases of the National Institutes of Health.

References

1. Helms, C. A. et al. Musculoskeletal MRI E-Book. (Elsevier Health Sciences, (2008)

2. Chartsias, A. et al. Multimodal MR synthesis via modality-invariant latent representation. IEEE transactions on medical imaging, 37, 803-814 (2017)

3. Kim, S. et al. Deep‐learned short tau inversion recovery imaging using multi‐contrast MR images. Magnetic Resonance in Medicine (2020)

4. Dar, S. U. H. et al. Image synthesis in multi-contrast MRI with conditional generative adversarial networks. IEEE transactions on medical imaging, 38, 2375-2388 (2019)

5. Isola, P. et al. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). 1125-1134 (2017)

Figures

Expert evaluations of synthetic T2 fat saturation images generated by Multimodal imaging, Pix2pix GAN, CC-DNN, pGAN, and our proposed Bloch equation-based autoencoder regularization generative adversarial network (BlochGAN) for 30 subjects randomly selected from dataset 1 and 2.

(Abbreviations: BME, bone

marrow edema; CSF, cerebrospinal fluid.

Notes: p-values

between the proposed method and other methods were calculated by Wilcoxon

signed rank tests. *, p<0.05; **, p<0.01; ***, p<0.001)