0664

Deep learning based prediction of H3K27M mutation in midline gliomas on multimodal MRI

Priyanka Tupe Waghmare1, Piyush Malpure2, Manali Jadhav2, Abhilasha Indoria3, Richa Singh Chauhan4, Subhas Konar5, Vani Santosh3, Jitender Saini6, and Madhura Ingalhalikar7

1E &TC, Symbiosis Institute of Technology, Pune, India, 2Symbiosis Center for Medical Image Analysis, Pune, India, 3National Institute of Mental Health and Neurosciences, Bangalore, India, 4National Institute of Mental Health & Neurosciences, Pune, India, 5National Institute of Mental Health & Neurosciences, Bangalore, India, 6Department of Neuroimaging & Interventional Radiology, National Institute of Mental Health & Neurosciences, Bangalore, India, 7Symbiosis Center for Medical Image Analysis and Symbiosis Institute of Technology, Pune, India

1E &TC, Symbiosis Institute of Technology, Pune, India, 2Symbiosis Center for Medical Image Analysis, Pune, India, 3National Institute of Mental Health and Neurosciences, Bangalore, India, 4National Institute of Mental Health & Neurosciences, Pune, India, 5National Institute of Mental Health & Neurosciences, Bangalore, India, 6Department of Neuroimaging & Interventional Radiology, National Institute of Mental Health & Neurosciences, Bangalore, India, 7Symbiosis Center for Medical Image Analysis and Symbiosis Institute of Technology, Pune, India

Synopsis

In midline gliomas, patients with H3K27M mutation have poor prognosis and shorter median survival. Moreover, since these tumors are located in deep locations biopsy can be challenging with substantial risk of morbidity. Our work proposes a non-invasive deep learning-based technique on pre-operative multi-modal MRI to detect the H3K27M mutation. Results demonstrate a testing accuracy of 69.76% on 51 patients. Furthermore, the class activation maps illustrate the regions that support the classification. Overall, our preliminary results provide a testimony that multimodal MRI can support identifying H3K27M mutation and with further larger studies can be translated to clinical workflow.

Introduction

The 2016 World health organization (WHO) classification of CNS tumors include a new subtype known as diffuse midline glioma with H3K27M mutation [1]. These tumors have poor prognosis and shorter median survival [2]. Moreover, since these tumors are located in deep locations such as thalamus and brainstem, biopsy can be challenging with substantial risk of morbidity. Early determination of the mutation is crucial for treatment planning that may lead to better therapeutic efficacy and outcomes. However, identifying the H3K27M status is challenging on multi-modal MRI visually. To this end, our work proposes to employ convolutional neural networks (CNNs) on multi-modal MRI to identify the H3K27M mutation. We also compute class activation maps for CNN explainabilty.Methods

Fifty-one subjects with midline gliomas (mutant: n=28, age=33.39±16.70, M/F=16/12; wildtype: n=23, age=25.29±13.88, M/F:13/10) were considered for the study that was approved by institutional ethical committee. These subjects had undergone surgical resection, standard post-surgical care and were identified retrospectively after reviewing the medical records. Immuno-histochemical staining was performed to detect the histone H3K27 M mutant protein. Twenty-eight subjects were found with the mutation. Subjects were scanned on Philips and Siemens scanners with T1 weighted (T1ce): 1.5T-TR/TE=1800-2200/2.6-2.9ms; 3T-TR/TE=1900-2200/2.3-2.4ms in 1x1mm resolution (2) FLAIR:1.5T-TR/TE=7500-9000/8.2-9.7ms;3T-TR/TE=9000/8.1-9.4ms in plane resolution=5x5mm (3) T2:1.5T- TR/TE=4900-6700/89-99ms and 3T- TR/TE=5200-6700/80-99ms in 5x5mm resolution. Pre-processing included brain extraction, inhomogeneity correction and intensity normalization followed by intra-subject affine registration using ANTS [3] and tumor segmentation that was performed using a U-net proposed by Isensee et al [4] and later corrected manually. The dataset was divided into a training cohort (22 mutant, 18 wildtype) and testing cohort (6 mutant, 5 wildtype). FLAIR, T1ce and T2 modalities were stacked together after pre-processing. Image augmentation was required to increase the data size and was performed by flipping the images vertically and horizontally, rotating them at an angle, random vertical and horizontal shifts, random zoom and random sheering. The CNNs were applied on a boxed region around the tumor for each 2D-axial slice consisting of the tumor. We implemented a novel 23 layered architecture containing 8 convolutional layers, 5 dropouts, 8 max-pool and 2 dense layers. The learning rate started from 0.0003 and then was decreased as the learning progressed by observing the test errors. Multiple standard CNN architectures were also trained/tested for comparison. We used the VGG16, Xception, ResNet50 and DenseNet121 for training and tuned these to obtain the best accuracy. For the classifier with best performance, we computed class activation maps (CAMs) from the last convolutional layer using the method by Zhou et al. [5]Results

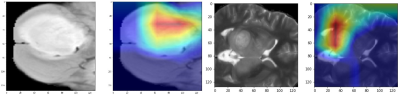

A schematic representation of the method is given in Figure 1. The performance of our model was superior to other standard models as shown in Figure 2. Architectures, having a small number of layers like VGG16 performed poorly on unseen data. Performance of deeper models like DenseNet121 degraded due to overfitting of the model. Among other architectures, ResNet50 gave a better performance with training accuracy of 64.42% and F1 score of 0.59. Figure 2 provides all the comparative results. Figure 3 illustrates the heat-maps, that shows the most discriminative region for each subject under consideration. The red area is highly weighted by CNNDiscussion

Our study illustrates the feasibility of employing deep neural nets to identify H3K27M mutation in midline gliomas. Deep models can rapidly test new subjects when compared to radiomics based models that are computationally expensive and take long time for computing the textures. Moreover, the class activation maps add to the interpretability. Identifying H3K27M status non-invasively from the first MRI scan is crucial for consequent tailored treatment planning and therapeutic intervention for improved outcomes. Although our dataset is petite, the study is first of its kind and provides evidence that deep models can be used in midline glioma analysis. Further validation on larger datasets as well as prospective validation is important.Acknowledgements

No acknowledgement found.References

1. Louis, D. N., Perry, A., Reifenberger, G., Von Deimling, A., Figarella-Branger, D., Cavenee, W. K., ... & Ellison, D. W. (2016). The 2016 World Health Organization classification of tumors of the central nervous system: a summary. Acta neuropathologica, 131(6), 803-820.2. Enomoto, T., Aoki, M., Hamasaki, M., Abe, H., Nonaka, M., Inoue, T., & Nabeshima, K. (2019). Midline glioma in adults: clinicopathological, genetic, and epigenetic analysis. Neurologia medico-chirurgica, oa-2019.

3. Avants, B. B., Tustison, N., & Song, G. (2009). Advanced normalization tools (ANTS). Insight j, 2(365), 1-35.

4. Ronneberger, O., Fischer, P., & Brox, T. (2015, October). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234-241). Springer, Cham.

5. Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., & Torralba, A. (2016). Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2921-2929).