0601

Deep learning super-resolution for sub-50-micron MRI of genetically engineered mouse embryos1Biomedical Imaging Research Institute, Cedars-Sinai Medical Center, Los Angeles, CA, United States, 2Department of Bioengineering, UCLA, Los Angeles, CA, United States, 3Department of Developmental Biology, University of Pittsburgh, Pittsburgh, PA, United States

Synopsis

Genetically engineered mouse models (GEMM) are indispensable in modeling human diseases. High resolution MRI with spatial resolution less than 100 μm has made incredible progress for phenotyping mouse embryos. However, it takes more than 10 hours' acquisition time to reach such high resolution, so reducing the scan time is of great need. Here we propose a deep learning based super-resolution approach for 3x3 super-resolution (SR) of mouse embryo images using raw k-space data. Our method can reduce the scan time by a factor of 9 while preserving the diagnostic details and shows better quantitative results than previous SR methods.

Introduction

Genetically engineered mouse models (GEMM) are indispensable in modeling human diseases and developing therapeutic strategies, because mice and humans share related physiology, anatomy and genes. High resolution MRI with spatial resolution less than 100 μm has made tremendous progress for phenotyping formalin-fixed mouse embryos1. However, it takes more than 10 hours' acquisition time to reach such high resolution, so reducing the scan time is of great need. There are several approaches to accelerate imaging, such as compressed sensing4; however, super-resolution neural networks are a particularly attractive option with wide applicability, as they can accept scanner-generated low-resolution images as inputs without requiring custom sequences and reconstructions.Because of the general lack of availability of large high-resolution (HR) raw datasets, most previous MR single image super-resolution (SISR) studies2,3 have applied magnitude degradation (MD) to simulate low-resolution (LR) training images from available datasets of HR magnitude images (Figure 1). However, this is a physically unrealistic resolution degradation model, because the source HR magnitude images lack phase information, so the simulated LR images are not fully representative of the true LR images that would be prospectively produced by the scanner, potentially limiting practical application.

Here we propose a deep learning based super-resolution approach for 3x3 super-resolution of mouse embryo images; our approach is trained with raw k-space data, which allowed us to apply physically realistic complex degradation (CD) to obtain our LR training images.

Methods

Data acquisitionHigh-resolution ex-vivo mice embryos MRI was carried out on a Bruker BioSpec 70/30 USR spectrometer (Bruker BioSpin MRI, Billerica, MA, USA) operating at 7T field strength with a 3D RARE pulse sequence. It takes 15 hours for each scan (FOV=40 x 14 x 9 mm3), with spatial resolution of 39 x 46 x 46 μm3. A total of 57 litters were imaged, typically with 6-7 embryos per litter for a total of over 300 embryos.

Image preprocessing

Resolution was degraded in the two phase-encoding directions. We performed 3x3 physically realistic CD by truncating the raw k-space; for comparison to previous MD approaches, we also generated 3x3 MD images by reconstructing the magnitude HR image and truncating its simulated k-space (Figure 1).

Network structure and training

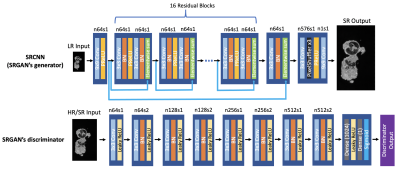

We trained two sets of networks (SRCNN/SRGAN5) to compare network types to each other, as well as to determine the importance of CD to each network type. The SRGAN structure is shown in Figure 2; the SRCNN is identical to SRGAN’s generator. We used 2D slices of the two phase-encoding directions for training, decoupling the problem along the readout dimension. Among 57 scans, 45 cases were used for training, 6 for validation, and 6 for testing, corresponding to 23040, 3072 and 3072 slices. Patch training was performed with patch size 96x96. The loss function for SRCNN was mean absolute error (MAE) between SR and HR images, and for SRGAN the GAN loss was added. For each resolution degradation method, the SRCNN was trained with Adam optimizer for 200000 steps, and the SRGAN was trained for another 40000 steps with SRCNN’s results as initial weights.

Testing process

The 6 testing cases (3072 slices) were preprocessed with 3x3 CD and fed into both networks. The SR output images for both networks and both resolution degradation methods, as well as the bicubic interpolation of LR images, are compared to the HR ground truth images using SSIM and NRMSE.

Results

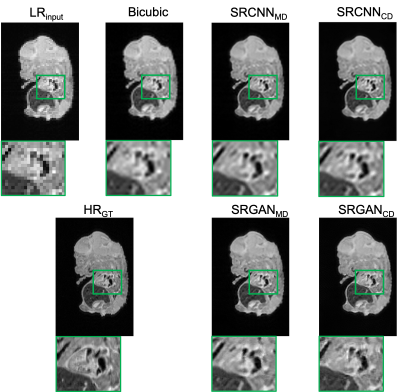

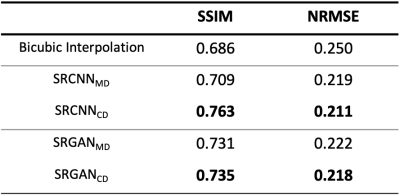

Figure 3 shows an example testing slice with LR image, bicubic interpolation image, each network output image, and the HR ground truth. “SRCNNMD” and “SRGANMD” indicate the SR networks trained similarly to previous MD approaches. “SRCNNCD” and “SRGANCD” indicate the SR networks trained with the proposed CD method. Both SRGAN results appear to be sharper than SRCNN results.The quantitative image comparisons with the ground truth among all testing slices are shown in Figure 4. All the network outputs are better than bicubic interpolation results, and both CD networks have better scores than both MD networks. The SRCNN trained with proposed CD method has best quantitative scores.

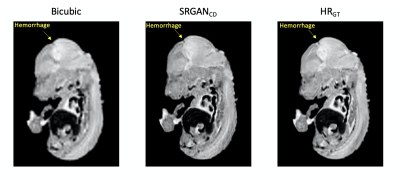

To demonstrate the impact of super-resolution on diagnosis, Figure 5 shows an example where hemorrhage in mouse brain is not clear in the bicubic interpolation image, but very clear in the SRGANCD image.

Discussion

Although SRCNNCD had the best quantitative scores, the SRGANCD have sharper edges and are qualitatively perceptually more similar to the ground truth. Figure 4 also showed that the improvement of CD over MD was greater for the SRCNN than the SRGAN; this may suggest that GAN is more robust to imperfect training data, whereas CNN without GAN may need more physically realistic training data.This study suggests that for MR super-resolution, training should include raw data wherever possible. When raw data are not available, transfer learning/domain adaptation incorporating datasets such as ours may be useful.

Conclusions

Our study suggests that deep-learning based MRI super-resolution benefits from training on raw data. Because diagnostic details were preserved in the SR outputs, it is possible to reduce high-resolution mouse embryo MRI scan time by a factor of 9, from 15 hrs to 1.7 hrs. A prospective validation of this scan time reduction will be the subject of future study.Acknowledgements

No acknowledgement found.References

1. Wu, Yijen L, and Cecilia W Lo. “Diverse application of MRI for mouse phenotyping.” Birth defects research vol. 109,10 (2017): 758-770. doi:10.1002/bdr2.1051

2. Lyu, Qing, Hongming Shan, and Ge Wang. "MRI super-resolution with ensemble learning and complementary priors." IEEE Transactions on Computational Imaging 6 (2020): 615-624.

3. Chen, Yuhua, et al. "Brain MRI super resolution using 3D deep densely connected neural networks." 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018). IEEE, 2018.

4. Lustig, Michael, et al. "Compressed sensing MRI." IEEE signal processing magazine 25.2 (2008): 72-82.

5. Ledig, Christian, et al. "Photo-realistic single image super-resolution using a generative adversarial network." Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

Figures