0599

CT-to-MR image synthesis: A generative adversarial network-based method for detecting hypoattenuating lesions in acute ischemic stroke1Huaxi MR Research Center (HMRRC), Department of Radiology, West China Hospital of Sichuan University, Chengdu, China, 2Department of Computer and Engineering, University of Electronic Science and Technology of China, Chengdu, China, 3Department of Bioengineering, University of Pennsylvania, Philadelphia, PA, United States

Synopsis

We aimed to develop a method of CT-to-MR image synthesis to assist in detecting hypoattenuating brain lesions in acute ischemic stroke. Emergency head CT images of 193 patients with suspected stroke and follow-up MR images were collected. A generative-adversarial-network model was developed for CT-to-MR image synthesis. With synthetic MRI compared to CT, sensitivity was improved by 116% in patient detection and 300% in lesion detection, and extra 75% of patients and 15% of lesions missed on CT were detected on synthetic MRI. Our method could be a rapid tool to improve readers’ detection of hypoattenuating lesions in AIS.

Introduction

Brain assessment of acute ischemic stroke requires both immediacy and sensitivity. We aimed to develop a rapid method of CT-to-MR image synthesis to assist readers in detecting hypoattenuating brain lesions in acute ischemic stroke.Methods

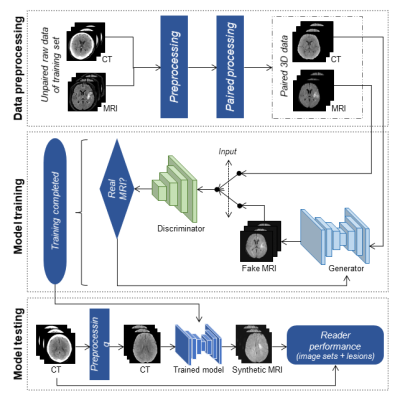

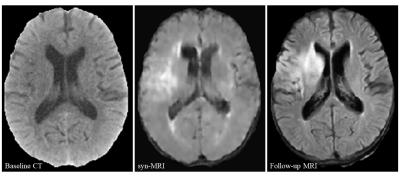

Emergency head CT images of 193 patients with primarily suspected stroke and follow-up fluid-attenuation inversion-recovery MR images were retrospectively collected from September 2015 to November 2018. Paired CT and MR images were randomly selected from 140 patients for training, and the remaining images were used for testing.Following image preprocessing (format conversion, registration, skull stripping, and spatial normalization), CT and MR images of the training set were resampled to paired three-dimensional data (matrix, 256×256; voxel size, 0.45×0.45×7 mm3; slice, 12). Based on a formulation of pix2pix generative adversarial networks (GANs),1 a modified GAN model was developed to reach the lesion-wise consistency between CT images and synthetic MRI (syn-MRI) images. This model operated in a competitive manner, with a generator for translating images from CT to syn-MRI and a discriminator for distinguishing images of syn-MRI from follow-up MRI (Figure 1). For each patient, the final model took an average of 52.23 seconds for preprocessing and 0.01 seconds for synthesis. Of the testing set, brain hyperintense lesions were manually segmented on follow-up MRI as reference standards.

Reader performance (a technician, a radiology resident, and a radiologist) was analyzed with sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), accuracy, and F measure. Performance improvements were measured by the percentage changes with syn-MRI relative to CT. Detection time and self-confidence scores for patient detection were recorded via in-house software. Performance of lesion detection, detection time, and self-confidence scores were compared between syn-MRI and CT by using paired t-tests or Wilcoxon signed-rank tests.

Results

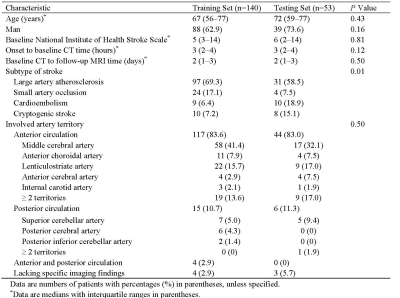

Patient characteristics are shown in Table 1. Of the testing set, 540 hyperintense lesions were identified as reference standards, 84% (453/540) of which were smaller than 10 cm2. When labeling on syn-MRI compared to CT, sensitivity was improved by 116% in patient detection (syn-MRI: 82% [123/150; 95% CI: 75%, 88%] vs CT: 38% [57/150; 95% CI: 30%, 46%]) and 300% in lesion detection (syn-MRI: 16% [262/1620; 95% CI: 14%, 18%] vs CT: 4% [68/1620; 95% CI: 3%, 5%]; R=0.32, P<0.001), although specificity was reduced by 50% in patient detection (syn-MRI: 33% [3/9, 95% CI: 9%, 69%] vs CT: 67% [6/9; 95% CI: 31%, 91%]) (Figure 2). An additional 75% (70/93) of patients and 15% (77/517) of lesions missed on CT were detected on syn-MRI (Figure 3).No differences were found in overall detection time (P=0.29) and self-confidence (P=0.14) when labeling patients between syn-MRI and CT. Individually, both resident (R=-0.40, P<0.001) and radiologist (R=-0.22, P=0.03) labeled faster with syn-MRI than CT, although self-confidence remained unchanged. The technician had prolonged detection time (R=-0.34, P<0.001) and mildly reduced self-confidence (R=-0.33, P=0.001).

Discussion

We developed a rapid method of CT-to-MR image synthesis using GANs for sensitive detection of hypoattenuating brain lesions in acute ischemic stroke. To our knowledge, cross-modality synthesis of stroke imaging has not previously been well established in the literature.Many methods have been developed for detecting ischemic stroke on CT, mostly focusing on visibility improvement of hypoattenuating lesions, e.g., contrast enhancement,2-4 voxel-wise comparisons,5-7 texture analyses,8-10 support-vector machines,11-13 neural networks,14, 15 and hybrid approaches.16 In comparison, our GAN-based method has several distinctions. First, MRI-mimic images were synthesized from CT before reader interpretation, which incorporated rapid scanning and sensitive detection in a single examination. Second, neither trans-hemisphere comparisons nor corrections of brain asymmetry were needed, thus reducing the risks of misdiagnosis of symmetrical infarcts and overdiagnosis of other contralateral pathologies. Third, the algorithm dispensed with input of clinical data as prior information, consequently providing a convenient approach to analysis. Forth, the GAN-based method could generate better images than typical methods of convolutional neural network.17

Considering expertise, sensitivity improvement was larger in the technician than in the radiologist, suggesting a greater benefit to novice than expert readers. Novices could be either the radiologists in remote regions, or the frontline staff such as paramedics in the mobile stroke units, technicians, and emergency physicians. Time reduction for resident and radiologist also suggested a possible practicality for specialist doctors. In the emergency settings where non-radiologists act as readers, fast recognition of suspected stroke upon hospital arrival or even on the scene, thereby bypassing the formal reports from radiologists, could trigger earlier notification to the in-hospital team and subsequently shorten the time from CT to decision making.18

Conclusion

CT-to-MR image synthesis using GANs could be a rapid and convenient method for sensitivity improvement in readers’ detection of hypoattenuating lesions in acute ischemic stroke. This might be a screening tool for both inexperienced radiologists and non-radiologists in the emergency setting.Acknowledgements

We thank Xiaodan Bao, BSc (Chengdu Second People's Hospital, China) for test participation and Xue Pei, BSc (West China Hospital of Sichuan University, China) for data collection.References

1. Isola P, Zhu J, Zhou T, et al. Image-to-image translation with conditional adversarial networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017:5967-5976.

2. Bier G, Bongers MN, Ditt H, et al. Accuracy of non-enhanced CT in detecting early ischemic edema using frequency selective non-linear blending. PloS One. 2016;11:e0147378.

3. Nurhayati OD, Windasari IP. Stroke identification system on the mobile based CT scan image. 2015 2nd International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE). 2015:113-116.

4. Przelaskowski A, Sklinda K, Bargiel P, et al. Improved early stroke detection: Wavelet-based perception enhancement of computerized tomography exams. Comput Biol Med. 2007;37:524-533.

5. Gillebert CR, Humphreys GW, Mantini D. Automated delineation of stroke lesions using brain CT images. NeuroImage Clin. 2014;4:540-548.

6. Srivatsan A, Christensen S, Lansberg MG. A relative noncontrast CT map to detect early ischemic changes in acute stroke. J Neuroimaging. 2019;29:182-186.

7. Takahashi N, Tsai DY, Lee Y, et al. Z-score mapping method for extracting hypoattenuation areas of hyperacute stroke in unenhanced CT. Acad Radiol. 2010;17:84-92.

8. Davis A, Gordillo N, Montseny E, et al. Automated detection of parenchymal changes of ischemic stroke in non-contrast computer tomography: A fuzzy approach. Biomed Signal Process Control. 2018;45:117-127.

9. Kanchana R, Menaka R. A novel approach for characterisation of ischaemic stroke lesion using histogram bin-based segmentation and gray level co-occurrence matrix features. Imag Sci J. 2017;65:124-136.

10. Peter R, Korfiatis P, Blezek D, et al. A quantitative symmetry-based analysis of hyperacute ischemic stroke lesions in noncontrast computed tomography. Med Phys. 2017;44:192-199.

11. Maya BS, Asha T. Automatic detection of brain strokes in CT images using soft computing techniques. In: Hemanth J, Balas V, eds. Biologically rationalized computing techniques for image processing applications. Lecture notes in computational vision and biomechanics. Cham: Springer. 2018;25:85-109.

12. Peixoto SA, Rebouças Filho PP. Neurologist-level classification of stroke using a structural co-occurrence matrix based on the frequency domain. Comput Electr Eng. 2018;71:398-407.

13. Reboucas Filho PP, Sarmento RM, Holanda GB, et al. New approach to detect and classify stroke in skull CT images via analysis of brain tissue densities. Comput Methods Programs Biomed. 2017;148:27-43.

14. Lisowska A, O’Neil A, Dilys V, et al. Context-aware convolutional neural networks for stroke sign detection in non-contrast CT scans. In: Valdés Hernández M, González-Castro V, eds. Medical image understanding and analysis. MIUA 2017. Communications in Computer and Information Science. Cham: Springer. 2017:494-505.

15. Tang FH, Ng DK, Chow DH. An image feature approach for computer-aided detection of ischemic stroke. Comput Biol Med. 2011;41:529-536.

16. Qiu W, Kuang H, Teleg E, et al. Machine learning for detecting early infarction in acute stroke with non-contrast-enhanced CT. Radiology. 2020;294:638-644.

17. Yu B, Wang Y, Wang L, et al. Medical image synthesis via deep learning. Adv Exp Med Biol. 2020;1213:23-44.

18. Wang H, Thevathasan A, Dowling R, et al. Streamlining workflow for endovascular mechanical thrombectomy: Lessons learned from a comprehensive stroke center. J Stroke Cerebrovasc Dis. 2017;26:1655-1662.

Figures