0536

Discriminative feature learning and adaptive fusion for the grading of hepatocelluar carcinoma with Contrast-enhanced MR1School of Medical Information Engineering, Guangzhou University of Chinese Medicine, Guangzhou, China, 2Department of Radiology, Guangdong General Hospital, Guangzhou, China, 3Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Synopsis

The combination of context information from multi-modalities is remarkably significant for lesion characterization. However, there are still two remaining challenges for multi-modalities based lesion characterization including features overlapping between different tumor grades and large differences in modal contributions. In this work, we proposed a discriminative feature learning and adaptive fusion method in the framework of deep learning architecture for improving the performance of multimodal fusion based lesion characterization. Experimental results of grading of clinical hepatocellular carcinoma (HCC) demonstrate that the proposed method outperforms the previously reported fusion methods, including concatenation, correlated and individual feature learning, and deeply supervised net.

Introduction

Contrast-enhanced MR has been shown to be promising for the diagnosis and characterization of hepatocelluar carcinoma (HCC) in clinical practice1. However, it is often very challenging to determine the histological grade (or differentiation) of HCC seen on the Contrast-enhanced images, especially in patient with cirrhosis due to the structure distortion of liver parenchyma and overlapping image appearances of different grades of HCC due to the large heterogeneity of tumors2. To this end, we propose a discriminative feature learning and adaptive fusion method in the framework of deep learning architecture for lesion characterization in Contrast-enhanced MR. Specifically, we introduce a discriminative intra-class loss function to reduce the distance of features in the same class and increase the distance of features in different classes of neoplasm. Furthermore, we design an adaptive weighting strategy based on the loss values of different modalities to increase the contribution of modalities with relatively lower loss values and reduce the impact of modalities with large loss values for the final loss function.Methods

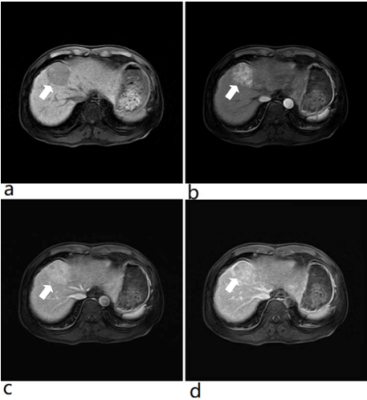

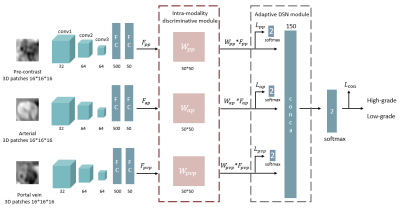

From October 2012 to December 2018, a total of 112 patients with 117 histologically confirmed HCCs were retrospectively included in the study. Gd-DTPA-enhanced MR imaging were performed with a 3.0T MR scanner (Signa Excite HD 3.0T, GE Healthcare, Milwaukee, WI, USA) and eight-channel phase-array coil using a breath hold Axial LAVA+C(liver acquisition with volume acceleration, LAVA) protocol (Figure 1). The pathological diagnosis of HCC was based on surgical specimens and the histological grade of HCC was retrieved from archived clinical histology reports, in which there were 54 low-grade and 63 High-grade. The proposed framework is shown in Figure 2. First, image patches in three phases (pre-contrast, arterial and portal vein phase) of Contrast-enhanced MR are separately put into the CNN network to generate deep features (Fpp, Fap and Fpvp) from the second fully connected layer. Then, we introduce an intra-modality discriminative module3 to reduce the distance of features in the same category and increase the distance of features in different categories. Three projection matrix Wpp, Wap and Wpvp are learned to project the deep features Fpp, Fap and Fpvp into new feature representations (Fpp*Wpp, Fap*Wap, Fpvp*Wpvp) to make the correlation of samples in the same category maximize for intra-modalities, and vice versa. Subsequently, we design an adaptive deeply supervised net (DSN)4 module for multimodal fusion, in which the learned features (Fpp*Wpp, Fap*Wap, Fpvp*Wpvp) are fed to a softmax layer each for classification, and simultaneously are concatenated to a softmax layer. Lpp, Lap, Lpvp and Lcon are the cross-entropy loss functions corresponding to the three phases and the concatenated features, respectively. The final result of the prediction is determined by the minimization of the intra-modality discriminative term and the designed adaptive deep supervised loss functions from Lpp, Lap, Lpvp and Lcon. The proposed multimodal feature fusion method was implemented using “Tensorflow”. The dataset was randomly divided into two parts: training set (77 HCCs) and test set (40 HCCs). The training and test were repetitively performed five times in order to reduce the measurement error, and values of accuracy, sensitivity, specificity and Area under the curves (AUC) were calculated in average.Results

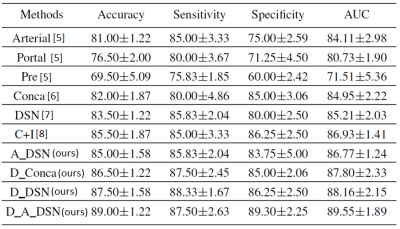

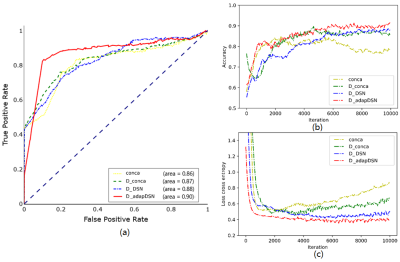

Table 1 showed the characterization performance of 3D CNN multi-phase fusion with different methods. Arterial CNN, Portal vein CNN and Pre CNN in Table 1 represent the typical 3D CNN5 of the three single phases, respectively. Typical fusion methods all obtained performance gains compared with single phases. It can be seen that DSN yields better results than the concatenation6. Importantly, DSN with the proposed adaptive weighting adjustment module (A_DSN) can obtain better performance compared to DSN7. Meanwhile, both concatenation and DSN combined with the proposed discriminative intra-modality module (D_conca and D_DSN) generate significantly improved performance. Particularly, our proposed method with the two modules (D_A_DSN) yields the best characterization performance, obviously outperforming the previously reported fusion method of correlation and individual feature analysis (C+I)8. Fianlly, receiver operating characteristic curves (ROC), accuracy curves and loss curves with different methods were plotted in Figure 3(a), 3(b) and (c), respectively.Discussion

Typical fusion methods all obtained performance gains compared with single phases, indicating the effectiveness of those fusion methods. It can be seen that DSN yields better results than the concatenation, verifying the excellent property of deeply supervision in DSN for fusion. DSN with the proposed adaptive weighting adjustment module (A_DSN) can obtain better performance compared to DSN, indicating the effectiveness of the proposed module than can adaptively adjust the weights of different modalities during the multimodal learning process. Furthermore, both concatenation and DSN combined with the proposed discriminative intra-modality module (D_conca and D_DSN) generate significantly improved performance, suggesting that large intra-class variation of neoplasm affects the performance of lesion characterization and the reduction of intra-class variation clearly improves the characterization performance.Conclusion

Our study indicates that reducing the intra-class variation and adaptively adjusting the weights of different modalities during the multimodal learning process will both significantly improve the characterization performance. The adoption of the two modules can yield further improvement of characterization performance, remarkably outperforming the previously reported fusion methods. We believe that the proposed method will be beneficial for lesion characterization with multimodality images.Acknowledgements

This research is sponsored by the grants from National Natural Science Foundation of China (81771920).References

[1] Choi JY, Lee JM, Sirlin CB. CT and MR Imaging Diagnosis and Staging of Hepatocellular Carcinoma: Part II. Extracellular Agents, Hepatobiliary Agents, and Ancillary Imaging Features. Radiology 2014;273(1):30-50.

[2]Xue R, Li R, Guo H, et al. Variable intra-tumor genomic heterogeneityof multiple lesions in patients with hepatocellular carcinoma. Gastroenterology2016;150:998-1008.

[3]Zhu H, Weibel JB , Lu S. Discriminative Multi-modal Feature Fusionfor RGBD Indoor Scene Recognition. IEEE Conference on ComputerVision and Pattern Recognition 2016:2969-2976.

[4]Lee CY, Xie S, Gallagher P, et al. Deeply-Supervised Nets. EprintArxiv,2014:562-570.

[5]Hamm CA, Wang CJ, Savic LJ, et al. Deep learning for liver tumordiagnosis part I: development of a convolutional neural network classifierfor multi-phasic mri. Eur Radiol 2019;29:3338-3347.

[6]Dou T, Zhang L, Zhou W. 3D Deep feature fusion in Contrast-enhancedMR for malignancy characterization of hepatocellular carcinoma. IEEE15th International Symposium on Biomedical Imaging 2018:29-33.

[7]Zhou W, Wang G, Xie G, Zhang L. Grading of hepatocellular carcinomabased on diffusion weighted images with multiple b-values using convolutionalneural networks. Med Phys 2019;46:3951-3960.

[8]Wang Z, Lin R, Lu J, Feng J, Zhou J. Correlated and individual multimodaldeep learning for RGB-D object recognition. arXiv:1604.01655v2[cs.CV] 2016.

Figures