0533

Automated Image Prescription for Liver MRI using Deep Learning1Radiology, University of Wisconsin-Madison, Madison, WI, United States, 2Medical Physics, University of Wisconsin-Madison, Madison, WI, United States, 3Electrical and Computer Engineering, University of Wisconsin-Madison, Madison, WI, United States, 4Medicine, University of Wisconsin-Madison, Madison, WI, United States, 5Emergency Medicine, University of Wisconsin-Madison, Madison, WI, United States, 6Biomedical Engineering, University of Wisconsin-Madison, Madison, WI, United States

Synopsis

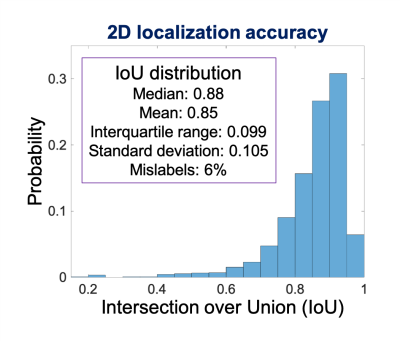

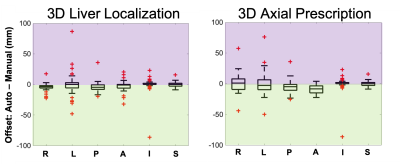

To enable fully free-breathing, single button-push liver exams, an automated AI-based method for image prescription of liver MRI was developed and evaluated. A total of seven classes of rectangular bounding boxes covering the liver, torso, and arms for each localizer orientation were manually and automatically labeled to enable 3D prescription in any orientation. The intersection over union (IoU) between manual and automated 2D liver detection had a median > 0.88 and interquartile range < 0.11 for all classes. The shift in the resultant 3D axial prescription was less than 9 mm in S/I dimension for 91% of the test dataset.

Introduction

Recent technical developments in free-breathing MRI of the liver1–3 may open the door for automated, fully free-breathing, single button-push liver exams, with substantial advantages in efficiency and patient comfort. However, single button-push exams require automated image prescription, i.e., the prescription of subsequent series from an initial localizer acquisition. Automated image prescription has the potential to improve workflow, efficiency, and prescription accuracy and consistency4,5.Recent developments have demonstrated that artificial intelligence (AI) methods can enable automated image prescription in other applications, including spine, heart, and brain6–8. However, AI-based image prescription for liver MRI remains an unmet need. Therefore, the purpose of this work was to develop automated AI-based image prescription of liver MRI series based on a localizer acquisition.

Methods

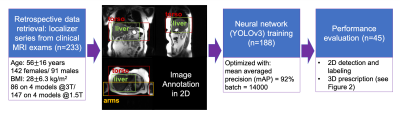

Data: In this IRB-approved study, data from 7822 patient exams were retrieved retrospectively, with a waiver of informed consent. From these data, 233 exams were used for initial training and evaluation. From each exam, we used the three-plane localizer series, which includes multiple abdominal images (~30 total) in the axial, coronal, and sagittal orientations. Training involved localizers (122 at 1.5T, 66 at 3T) from 188 patients (age 56.7±16.5 years, 113 females/75 males, BMI 27.8±6.4 kg/m2), with a wide variety of pathologies.Image Annotation: Labeling was performed by four board-certified abdominal radiologists and one MR physicist knowledgeable in abdominal anatomy. In order to enable liver image prescription in any orientation, seven classes of labels were annotated using bounding boxes that span the liver, torso, and arms (Figure 1).

Neural network: We used a convolutional neural network (CNN) for detection and classification to estimate coordinates of the bounding boxes described above. Specifically, we applied a recently proposed CNN architecture called YOLOv3, with well-validated applications in real-time object detection9,10. The network input includes a single image from the three-plane localizer acquisition. The network detects each of the 7 classes of labeled objects described above, and outputs the corresponding bounding boxes. Testing included localizers (25 at 1.5T, 20 at 3T) from 45 patients of age 51.8±15.0 years, 29 females/16 males, BMI 28.7±6.2 kg/m2, with a wide variety of pathologies.

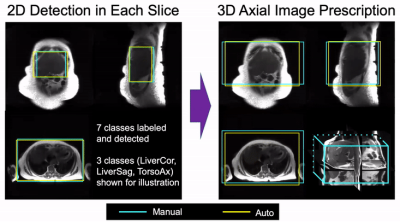

Image Prescription: Based on the detected bounding boxes for each slice in the localizer acquisition, image prescription for whole-liver acquisition in each orientation was calculated as the minimum 3D bounding box needed to cover all the labeled 2D bounding boxes in the required volume (Figure 2). For instance, for axial image prescription, the corresponding 3D bounding box covers the liver in the S/I dimension, and the torso in the A/P and R/L dimensions.

Evaluation: In order to evaluate the performance of automated 2D object detection for each of the seven classes of labels, we calculated the intersection over union (IoU), i.e. the area of the intersection between the manual and automated bounding boxes, divided by the area of their union. In order to evaluate the performance of the subsequent automated 3D prescription, the mismatch with the manual prescription for each of the six edges of the bounding box (right, left, anterior, posterior, superior, inferior) was calculated.

Results

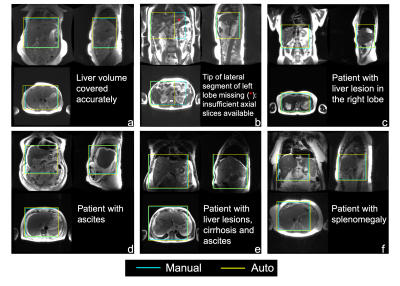

Training required ~15 hours, to reach a mean Averaged Precision (mAP) of 92% at an IoU threshold of 0.5. For testing, object detection for one entire three-plane localizer (~30 images) required ~0.3 seconds.Figure 3 shows representative examples of 3D detection of the liver (both manual and automated) in patients with various pathologies. As observed in these examples, the proposed automated method leads to excellent qualitative agreement with manual annotations. Figure 4 shows the histogram and statistical distribution of IoU between manual and automated labeling for all the seven classes of bounding boxes across all localizer image orientations. The individual IoU histograms for each of the seven classes are qualitatively similar, with median all > 0.88 and interquartile range all < 0.11. Figure 5 demonstrates the accuracy of the subsequent 3D image prescription, including 3D detection of the liver, and 3D prescription for axial imaging. The shift in the resultant 3D axial prescription was less than 9 mm in the S/I dimension for 91% of the test dataset.

Discussion

This work demonstrates the feasibility and accuracy across patients and pathologies of AI-based automated liver image prescription, and may advance the development of fully automated, single-button-push liver MRI.These promising results are aligned with recent developments in automated prescription for kidney and for spine using a YOLO network8,11, as well as for neuroimaging6.

This study has several limitations. A limited number of exams were used for training and evaluation. However, the promising performance observed from limited data is encouraging. In addition, the proposed method has not yet been implemented on the MRI system itself. The proposed automated prescription occasionally missed portions of the desired prescription volume. Nevertheless, characterization of the distribution of missed volume may enable determination of adequate “safety margins” in each dimension.

Conclusion

We have demonstrated the feasibility of AI-based automated prescription for liver MRI, and evaluated this approach in a wide variety of clinical datasets. This method has the potential to advance the development of fully free-breathing, single button-push MRI of the liver.Acknowledgements

We would like to thank Daryn Belden, Wendy Delaney, and Dr. John Garrett from UW Radiology for their assistance with data retrieval. We also wish to acknowledge GE Healthcare and Bracco who provide research support to the University of Wisconsin. Dr. Reeder is a Romnes Faculty Fellow, and has received an award provided by the University of Wisconsin-Madison Office of the Vice Chancellor for Research and Graduate Education with funding from the Wisconsin Alumni Research Foundation.References

1. Chandarana H, Feng L, Block TK, et al. Free-Breathing Contrast-Enhanced Multiphase MRI of the Liver Using a Combination of Compressed Sensing, Parallel Imaging, and Golden-Angle Radial Sampling. Invest Radiol. 2013;48(1). doi:10.1097/RLI.0b013e318271869c

2. Choi JS, Kim M-J, Chung YE, et al. Comparison of breathhold, navigator-triggered, and free-breathing diffusion-weighted MRI for focal hepatic lesions. J Magn Reson Imaging. 2013;38(1):109-118. doi:https://doi.org/10.1002/jmri.23949

3. Zhao R, Zhang Y, Wang X, et al. Motion-robust, high-SNR liver fat quantification using a 2D sequential acquisition with a variable flip angle approach. Magn Reson Med. 2020;84(4):2004-2017. doi:https://doi.org/10.1002/mrm.28263

4. Itti L, Chang L, Ernst T. Automatic scan prescription for brain MRI. Magn Reson Med. 2001;45(3):486-494. doi:https://doi.org/10.1002/1522-2594(200103)45:3<486::AID-MRM1064>3.0.CO;2-#

5. Lecouvet FE, Claus J, Schmitz P, Denolin V, Bos C, Berg BCV. Clinical evaluation of automated scan prescription of knee MR images. J Magn Reson Imaging. 2009;29(1):141-145. doi:https://doi.org/10.1002/jmri.21633

6. GE Healthcare’s AIRxTM Tool Accelerates Magnetic Resonance Imaging. Intel. Accessed December 15, 2020. https://www.intel.com/content/www/us/en/artificial-intelligence/solutions/gehc-airx.html

7. Barral JK, Overall WR, Nystrom MM, et al. A novel platform for comprehensive CMR examination in a clinically feasible scan time. J Cardiovasc Magn Reson. 2014;16(Suppl 1):W10. doi:10.1186/1532-429X-16-S1-W10

8. De Goyeneche A, Peterson E, He JJ, Addy NO, Santos J. One-Click Spine MRI. 3rd Med Imaging Meets NeurIPS Workshop Conf Neural Inf Process Syst NeurIPS 2019 Vanc Can.

9. Redmon J, Divvala S, Girshick R, Farhadi A. You Only Look Once: Unified, Real-Time Object Detection. ArXiv150602640 Cs. Published online May 9, 2016. Accessed December 15, 2020. http://arxiv.org/abs/1506.02640

10. Pang S, Ding T, Qiao S, et al. A novel YOLOv3-arch model for identifying cholelithiasis and classifying gallstones on CT images. PloS One. 2019;14(6):e0217647. doi:10.1371/journal.pone.0217647

11. Lemay A. Kidney Recognition in CT Using YOLOv3. ArXiv191001268 Cs Eess. Published online October 2, 2019. Accessed December 15, 2020. http://arxiv.org/abs/1910.01268

Figures