0529

Deep learning based radial de-streaking for free breathing time resolved volumetric DCE MRI1GE Healthcare, Atlanta, GA, United States, 2GE Healthcare, Houston, TX, United States, 3GE Healthcare, Madison, WI, United States, 4GE Healthcare, Hino, Japan

Synopsis

Radial imaging is becoming increasingly popular due to its ability to support highly accelerated imaging. However, it is plagued by streak artifacts that often arise from undersampling which can lead to poor image quality. The problem is particularly acute in time resolved imaging where the need for high spatio-temporal sampling usually leads to large amount of streaks. In this work, we propose a method for separate spatial and temporal deep learning for streak artifact reduction. The utility of the method is demonstrated on free breathing time resolved volumetric DCE MRI acquired using the stack-of-stars trajectory.

Introduction

Streak artifacts are a common source of image quality degradation in radial imaging. While usually associated with inadequate angular sampling they can also occur due to other factors (gradient non-linearity, motion etc.).1 Despite being benign at low undersampling rates, the artifacts can be quite severe at higher acceleration factors. This is a particularly concerning for dynamic MRI (time resolved imaging, multi-contrast imaging etc.) where the need for high spatio-temporal resolution demands highly accelerated imaging.2 The stack-of-stars (SoS) sequence uses hybrid Cartesian-radial scanning and can be used for free breathing volumetric body DCE MRI with the use of additional motion compensation.3 The need to resolve 3D data across time (high acceleration) on a large FOV (gradient non-linearity) during free breathing (motion) makes SoS very susceptible to streaking artifact.Compressed sensing and low-rank methods have been used to mitigate streaking artifacts.2 However, their steep computational cost has made them challenging to translate into clinical applications. Recently, deep learning (DL) based convolutional neural networks (CNN) have demonstrated comparable performance to these older methods but at a much smaller computational cost.4 While CNNs are usually used with 2D images, 3D and 4D variants have been developed to exploit correlations in higher dimensional imaging data albeit at increased computational cost.5,6,7 In contrast, we propose a method that uses separate spatial and temporal deep learning to mitigate streak artifacts.

Separate spatial and temporal DL for streak reduction

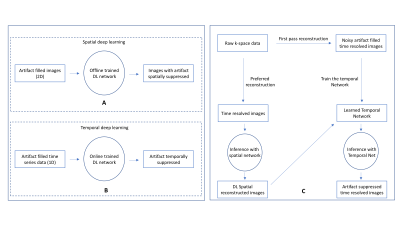

The proposed method uses separate networks for the processing of spatial and temporal data. The spatial DL network (Figure 1 A) is trained offline in a supervised manner from a database of about 10,000 labeled image pairs.8 The network is designed to mitigate noise, streaks and enable the recovery of fine detail and is robust to variations in undersampling rates and noise levels.The high degree of correlation that exists in the contrast/time dimension is exploited via a temporal network.9 The temporal network is learned on the fly in an unsupervised manner and is customized to the specific scan. The network seeks to learn the underlying structure in the temporal data from the corrupted training samples themselves. Once trained, the inference using the network is spatially independent (Figure 1 B) and can be parallelized across that dimension leading to very low inference costs.

The offline trained spatial network and the online trained temporal network together form the full DL processing pipeline. The conventionally reconstructed images are first passed through the spatial network that eliminates the bulk of the noise and artifact. This processed data is then passed onto the temporal network which delivers further artifact suppression. A flowchart of the proposed method can be seen in Figure 1 C.

Methods and Results

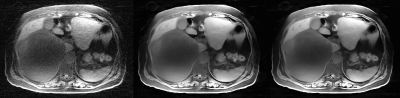

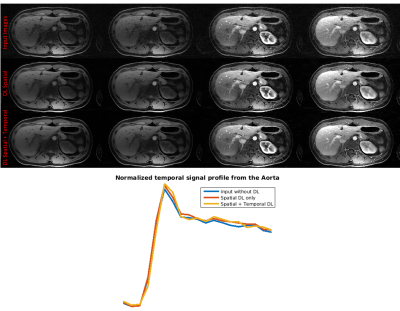

The proposed methods were tested on contrast enhanced data collected from two subjects (with appropriate IRB approval and informed consent) on a 3T scanner using the DISCO-STAR sequence.3 The sequence uses the SoS trajectory (with golden angle view-ordering) and intermittent fat suppression. The radial data from this acquisition was pre-processed and 20 contrast phases were generated at about 100 radial-views/phase yielding a temporal resolution of about 8 seconds/phase. The time-series images were generated using a motion compensated parallel imaging reconstruction3 and the resulting datasets were further processed with the spatial DL approach and then with the temporal DL approach.To better demonstrate the benefits from spatial and temporal processing, we present the results with the use of spatial DL alone and the combination of both spatial and temporal DL. The input images (no filtering) serves as a baseline for the artifact levels. Figure 2 shows the results from a single slice at four different contrast phases. Figure 3 shows the results from a few slices at a specific contrast phase. The images are windowed and highlighted with arrows to better visualize artifact. Figure 4 depicts the same results seen in Figure 2 but in the form of a movie loop. Note that the flickering streak artifact is almost completely eliminated with the addition of temporal filtering. Figure 5 depicts the results from a different slice along with a normalized temporal signal profile from the aorta. Despite the absence of a gold standard reference, the relatively strong similarity to the input without DL suggests that temporal fidelity is well preserved even with DL processing.

Conclusion

The SoS application discussed in this work routinely generates on the order of a few thousand images per scan. Scaling 2D deep learning methods to such large datasets can by itself be challenging. 3D/4D deep learning methods which leverage correlations in this high dimensional data can deliver better performance but will likely suffer from strong computational constraints even on sophisticated computational platforms. In this work, we present a two-stage approach that decouples the contrast/time dimension from the spatial dimensions. As the temporal processing is embarrassingly parallelizable, the overall computational cost is effectively just the cost of the spatial processing. We demonstrate improved streak reduction in SoS with spatial + temporal DL over the use of spatial DL alone. Other high dimensional applications are also likely to benefit from similar processing considerations.Acknowledgements

The authors gratefully appreciate in-vivo data provided by University of Yamanashi.References

1. Mandava et al., Radial streak artifact reduction using phased array beamforming. MRM, 2019; 81: 3915-3923

2. Feng et al., RACER-GRASP: Respiratory-weighted, aortic contrast enhancement-guided and coil-unstreaking golden angle radial sparse MRI. Magn Reson Med 2018;80: 77–89

3. Zhang et al., Fast motion robust abdominal stack of stars imaging using coil compression and soft gating. In Proceedings of the 25th Annual Meetings of ISMRM, Honolulu, HI, 2017. Abstract No.1284

4. Han et al., Deep learning with domain adaptation for accelerated projection-reconstruction MR, MRM, 2018; 80: 1189-1205

5. Hauptmann et al., Real time cardiovascular MR with spatiotemporal artifact suppression using deep learning - proof of concept in congenital heart disease. MRM, 2019; 81: 1143-1156

6. Qin et al., Convolutional Recurrent Neural Networks for Dynamic MR Image Reconstruction. IEEE TMI, 2018; 38: 280-290

7. Küstner et al., CINENet: Deep learning-based 3D Cardiac CINE Reconstruction with multi-coil complex 4D Spatio-Temporal Convolutions. In Proceedings of the 28th Annual Meeting of ISMRM, 2020. Abstract No. 1001

8. Lebel et al., Performance characterization of a novel deep-learning based MR image reconstruction pipeline. 2020 Aug 14 [accessed 2020 Dec 15]. https://arxiv.org/abs/2008.06559v1

9. Mandava et al., Unsupervised radial streak artifact reduction in time resolved MRI. In Proceedings of the 28th Annual Meeting of ISMRM, 2020. Abstract No. 0812

Figures