0397

How do we know we measure tissue parameters, not the prior?1Center for Biomedical Imaging and Center for Advanced Imaging Innovation and Research (CAI$^2$R), Department of Radiology, New York University School of Medicine, New York, NY, United States

Synopsis

In the machine-learning (ML) era, we are transitioning from max-likelihood parameter estimation to learning the mapping from measurements to model parameters. While such maps look smooth, there is danger of them becoming too smooth: At low SNR, ML estimates become the mean of the training set. Here we derive fit quality (MSE) as function of SNR, and show that MSE for various ML methods (regression, neural-nets, random forest) approaches a universal curve interpolating between Cramér-Rao bound at high SNR, and variance of the prior at low SNR. Theory is validated numerically and on white matter Standard Model in vivo.

Introduction

Maximizing spatial resolution and minimizing scan time forces us to deal with noisy and limited data. Thus, efficiently extracting microstructural tissue features of interest from the measurements is crucial. From relaxometry to microstructure MRI, the standard parameter estimation paradigm consists of minimizing an objective function with respect to the parameters of interest given noisy measurements. For its asymptotic benefits, maximum likelihood is a widespread estimator. However, for smooth nonlinear models like the ones we typically see in diffusion MRI1, parameter estimation is challenging. As the saying goes, `the diffusion signal is remarkably unremarkable'2, thus, a typical set of noisy measurements easily becomes overparametrized by the models we use to describe them. This may lead to imprecise or degenerate parameter estimates3. To solve this, constraints4, extra assumptions5 have been proposed but may lead to bias.Recently, data-driven approaches from supervised machine learning (ML) have gained popularity due to their ability to quickly solve nonlinear regressions. ML approach is to parametrize the mapping from measurements + noise (realistic model output) to model parameters. Neural networks6, random forest7, or polynomial regression8 have been shown to provide useful results in different MRI applications. However, the influence of the training data on the estimates has been an overlooked ML aspect.

Here, by locally linearizing the model, we analytically derive the optimal mean-squared error (MSE) quality metric of ML parameter estimation as function of SNR. We show that this MSE interpolates between classic max-likelihood quality metric (Cramér-Rao-bound) and the variance of the training set. Remarkably, any `black box' ML regressor approaches this analytical curve. This enables regressor-independent evaluation of data quality and sensitivity to model parameters.

Theory

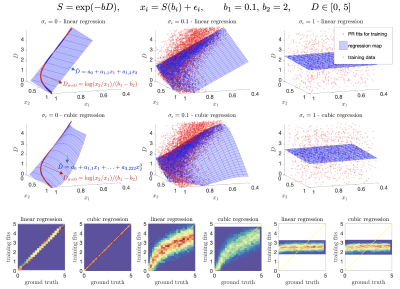

Noise as a regularizer. Supervised ML methods rely on the selection of a prior distribution to generate training data for `learning' the optimal mapping from input features/measurements to parameters. To capture the best mapping for noisy data, noise should be added to the training. However, this has a regularization effect. Figure 1 shows that at different noise levels, the manifold defined by the all the measurements in the training becomes smoothed. Thus, the optimal regression loses complexity. As noise blurs high-order features, the best approximation becomes heavily biased towards the prior distribution that defined such manifold. For extremely low SNR, the optimal regression becomes the expected value of the prior.Optimal MSE(SNR). Consider a polynomial regression on $$$N$$$ measurements to estimate $$$\theta$$$:

$$\begin{align*}x_{i}&=f_{i}(\theta)+\epsilon_{i},\quad\text{with}\,\quad\,i=1\ldots\,N,\qquad\epsilon\sim \mathcal{N}(0,\sigma),\,\,\epsilon_i\text{ iid}\\\hat{\theta}&=a_0+\sum\limits_{i=1}^N\,a_{1,i}\,x_{i},+\,\text{higher}\,\text{orders},\end{align*}$$

where $$$\hat{\theta}$$$ is our estimator and $$$f(\theta)$$$ our model. In the training, the coefficients of the regression are selected such that they minimize the MSE over noise realizations and training set. For the linear regression, MSE over the training-set

$$\begin{equation*}\mathrm{MSE}_{tr}=\int_{\Theta}d\theta\,\,p\left(\theta\right)\left\langle\left(\hat{\theta}-\theta\right)^{2}\right\rangle= a_{1, i}\,a_{1, j}\left( \overline{ f_i(\theta)\,f_j(\theta)}+\sigma^2\delta_{ij} \right)+a_0^2+\overline{\theta^{2}}+2 \,a_{0}\,a_{1, i}\,\overline{ f_i(\theta)}-2a_{1, i}\,\overline{\theta\,f_i(\theta)}-2 a_{0}\,\overline{\theta},\end{equation*}$$

where angular brackets denote averaging over noise ($$$\langle\epsilon_i\rangle=0,\,\langle\epsilon_i\epsilon_j\rangle=\sigma^2\delta_{ij}$$$), and bar averages over prior $$$p(\theta)$$$. Solving for the regression coefficients minimizing $$$\mathrm{MSE}_{tr}$$$ gives:

$$\begin{equation*}\mathrm{MSE}_{tr}=\operatorname{var}(\theta)-B_{ij}C_{ij},\quad\text{where}\,\,\boldsymbol{B}=(\boldsymbol{\Sigma}+\sigma^2\boldsymbol{I})^{-1},\,\Sigma_{ij}=\overline{f_i(\theta) f_j(\theta)}-\overline{f_i(\theta)}\,\overline{f_j(\theta)},\,C_{ij}=\left(\overline{\theta\,f_i(\theta)}-\overline{\theta}\,\overline{f_i(\theta)}\right)\left(\overline{\theta\,f_j(\theta)}-\overline{\theta}\,\overline{f_j(\theta)}\right).\end{equation*}$$

If we linearly expand $$$f(\theta)\approx\,f(\theta^*)+f'(\theta)\,(\theta-\theta^*)$$$, we can rewrite the above equation as:

$$\begin{equation*}\mathrm{MSE}_{tr}=\frac{\operatorname{var}(\theta)}{1+x_p},\text{where}\,\,x_p=\frac{\operatorname{var}(\theta)}{\operatorname{CRB}(\theta)},\end{equation*}$$

where in this example $$$\operatorname{CRB}(\theta)=I(\theta)^{-1}=(\tfrac{1}{\sigma^2}\frac{d f_i(\theta)}{d \theta}\,\frac{d\,f_i(\theta)}{d \theta})^{-1}$$$. An intuitive meaning for $$$\sqrt{x_p}$$$ is intrinsic SNR.

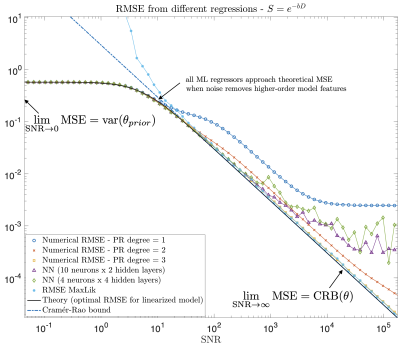

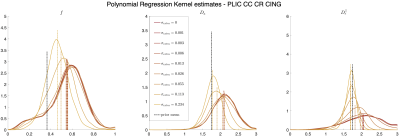

Two regimes. From above, interesting insights can be obtained if we take two limits. For $$$\lim_{x_p\rightarrow\infty}$$$ (high SNR), $$$\mathrm{MSE}_{tr}$$$ tends to the Cramér-Rao bound of $$$\theta$$$, a bound on the lowest achievable variance by an unbiased estimator9. Then, taking $$$\lim_{x_p\rightarrow\,0}$$$ (low SNR), we see that $$$\mathrm{MSE}_{tr}$$$ does not increase proportionally to $$$\sigma$$$ but instead reaches a plateau at a value of the variance in the training data. Any regression will at some point behave like the linear, see Fig. 2 for an exponential model.

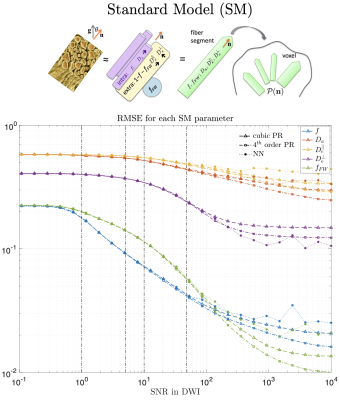

We are usually in-between these two extremes, where data-driven approaches provide lower MSE even if the prior does not match the test distribution. In the case of the Standard Model (SM) of diffusion in white matter10, a cubic polynomial is usually enough to fit all parameters within a realistic SNR (see Fig. 3 and Experiments for protocol). Since this model is highly nonlinear, a big increase in SNR means more sophisticated regressions will be optimal.

Experiments

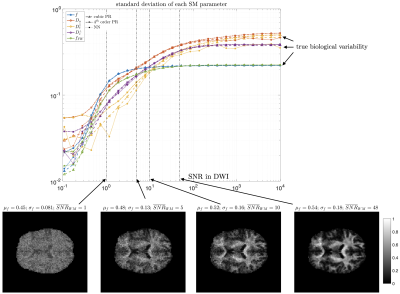

MRI data. A 23yo female volunteer was imaged in a whole-body 3T system (Siemens PRISMA) using a 20-channel head coil. Maxwell-compensated free-gradient diffusion waveforms were acquired11 using a spin echo EPI readout. The protocol had $$$\{6,20,15,40,35,40\}$$$ non-collinear gradient directions at $$$(b,b_\Delta)=[(0,1),(1000,1),(1500,0),(2000,1),(5000,0.8),(5500,1)]\,s/mm^2$$$, totalling 156 DWI. These images were acquired with $$$2\times2\times2\,mm^3$$$ voxels over 72 slices, $$$T_R=5.3s,\,T_E=92ms,\,BW=1600Hz/Px,\,R=2,\,PF=6/8,\,t_\text{acq}=15\,\text{minutes}$$$.Results. Figure 5 shows histograms of SM estimates for a white matter region of interest (ROI). As we increase the noise in the data, estimates reduce their variance and get shifted towards the mean of the prior. Figure 4 shows that parametric maps that look realistic can be too homogeneous when the SNR is low.

Discussion and Conclusion

For typical noise levels in quantitative MRI the prior distribution may push parameter estimates towards its mean. This is modulated by the SNR, as we showed experimentally and theoretically. At low SNR values, any data-driven regression will behave identically, with their MSE approaching a universal curve independently of the complexity of the regression. Understanding where on this curve is the ML estimation at a given SNR is essential for knowing if we measure tissue parameters, or merely the prior.Acknowledgements

This work was performed under the rubric of the Center for Advanced Imaging Innovation and Research (CAI2R, https://www.cai2r.net), a NIBIB Biomedical Technology Resource Center (NIH P41-EB017183). This work has been supported by NIH under NINDS award R01 NS088040 and NIBIB awards R01 EB027075.References

[1] D. S. Novikov, E. Fieremans, S. N. Jespersen, and V. G. Kiselev, “Quantifying brain microstructure with diffusionMRI: Theory and parameter estimation,”NMRinBiomedicine, p. e3998, 2018.

[2] D. A. Yablonskiy and A. L. Sukstanskii, “Theoretical models of the diffusion weighted mr signal,”NMRinBiomedicine, vol. 23, no. 7, pp. 661–681, 2010.

[3] I. O. Jelescu, J. Veraart, V. Adisetiyo, S. S. Milla, D. S. Novikov, and E. Fieremans, “One diffusion acquisitionand different white matter models: How does microstructure change in human early development based on WMTIand NODDI,”NeuroImage, vol. 107, pp. 242–256, 2015.

[4] H. Zhang, T. Schneider, C. A. Wheeler-Kingshott, and D. C. Alexander, “NODDI: Practical in vivo neuriteorientation dispersion and density imaging of the human brain,”NeuroImage, vol. 61, pp. 1000–1016, 2012.

[5] Y. Assaf, T. Blumenfeld-Katzir, Y. Yovel, and P. J. Basser, “New modeling and experimental framework tocharacterize hindered and restricted water diffusion in brain white matter,”MagneticResonanceinMedicine,vol. 52, pp. 965–978, 2004.

[6] V. Golkov, A. Dosovitskiy, J. I. Sperl, M. I. Menzel, M. Czisch, P. S ̈amann, T. Brox, and D. Cremers, “q-spacedeep learning: Twelve-fold shorter and model-free diffusion mri scans,”IEEETransactionsonMedicalImaging,vol. 35, no. 5, pp. 1344–1351, 2016.

[7] M. Palombo, A. Ianus, M. Guerreri, D. Nunes, D. C. Alexander, N. Shemesh, and H. Zhang, “SANDI: Acompartment-based model for non-invasive apparent soma and neurite imaging by diffusion mri,”NeuroImage,vol. 215, p. 116835, 2020.

[8] M. Reisert, E. Kellner, B. Dhital, J. Hennig, and V. G. Kiselev, “Disentangling micro from mesostructure bydiffusion MRI: A Bayesian approach,”NeuroImage, vol. 147, pp. 964 – 975, 2017.[9] S. M. Kay,Fundamentalsofstatisticalsignalprocessing:estimationtheory. Englewood Cliffs, N.J : PTR Prentice-Hall, 1993.

[10] D. S. Novikov, J. Veraart, I. O. Jelescu, and E. Fieremans, “Rotationally-invariant mapping of scalar and orien-tational metrics of neuronal microstructure with diffusion MRI,”NeuroImage, vol. 174, pp. 518 – 538, 2018.

[11] F. Szczepankiewicz, C.-F. Westin, and M. Nilsson, “Maxwell-compensated design of asymmetric gradient wave-forms for tensor-valued diffusion encoding,”MagneticResonanceinMedicine, vol. 0, pp. 1–14, 2019.

Figures