0396

Image Registration of Perfusion MRI Using Deep Learning Networks1State University of New York at Binghamton, Binghamton, NY, United States, 2Beth Israel Deaconess Medical Center & Harvard Medical School, Boston, MA, United States

Synopsis

Convolutional neural network (CNN) has demonstrated its accuracy and speed in image registration of structural MRI. We designed an affine registration network (ARN), based on CNN, to explore its feasibility on image registration of perfusion fMRI. The six affine parameters were learned from the ARN using both simulated and real perfusion fMRI data and the transformed images were generated by applying the transformation matrix derived from the affine parameters. The results demonstrated that our ARN markedly outperforms the iteration-based SPM algorithm both in simulated and real data. The current ARN is being extended for deformable fMRI image registration.

Introduction

Medical image registration plays an important role in medical analysis [1]. Image registration methods solve an optimization problem to establish pixel correspondence between a pair of fixed and moving images by maximizing a similarity measure of spatial correspondence between images. Conventional image registration optimization algorithms are often computationally expensive because they are typically solved using iterative algorithms [2]. Deep learning techniques, especially the convolutional neural network (CNN), have demonstrated comparable accuracy in learning image registration of structural magnetic resonance images (sMRI) [3, 4]. The accuracy for image registration of functional MRI (fMRI) cannot be directly inferred because functional MRI is typically acquired in low spatial resolution. Here, we assess the performance of 3D affine image registration of perfusion fMRI using CNN with simulated and real perfusion fMRI data.Methods

Real Imaging Data Dynamic pseudo-continuous arterial spin labeling (PCASL) perfusion MRI images from 20 subjects were obtained from our previous study [5]. Each subject was acquired with a time series of 39 3D PCASL perfusion images. Each 3D image had a 128x128x40 matrix with a resolution of 1.88x1.88x4 mm3. The first 3D images from 20 subjects were used as the target images. For each target image, 36 images from other time points were randomly drawn from the rest of the images.Simulated Data Only the first of the 3D images from 20 subjects were used as the target images in the simulation. For each target image, 36 affine transformations (x, y, and z shifts in a range of [-2, 2] voxels, x, y, and z rotations in a range of [-50, 50] with a uniform distribution for each of six parameters) were applied to generate moving images.

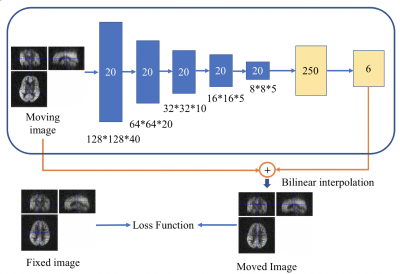

Network Architecture We designed an affine registration network (ARN) using an encoder CNN and a fully connected network (FCN), as shown in Fig. 1. The ARN contained several CNN layers with gradually reduced image resolution and two fully connected layers to learn useful low-resolution features for image registration and six affine registration parameters, respectively. The input layer took the moving image (128x128x40 matrix) as input. Twenty channels were used for the 5 convolution+ ReLU+ batch normalization layers of the encoder with a kernel size of 4 and stride of 2. Following the encoder CNN, one fully connected layer with a size of 250 and then the output layer with a size of 6 was used. The moved image was generated by applying the affine transformation from the learned 6 parameters to the moving image and sampling it with bilinear interpolation. 360 training images were used for the training of the DNN and 360 independent test images (not seen by the ARN) were used to evaluate the performance of unseen data.

Loss Function The ARN was trained in an unsupervised way by minimizing the loss between moved images and target images. The loss function includes mean squared error (MSE), pixel-wise L1 loss, and pixel-wise structural similarity index (SSIM) loss [6]. The relative weights of the three loss functions were determined empirically.

Comparison with SPM affine registration Statistical parametric mapping (SPM12) is the most popular neuroimage registration tool. The performance of our ARN was compared with that of SPM affine registration (i.e., realignment).

Results & Discussion

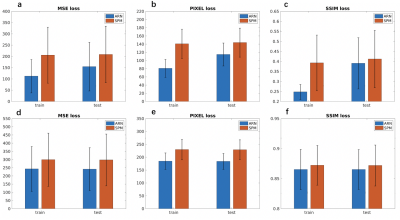

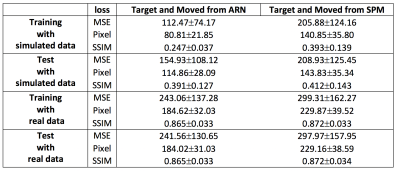

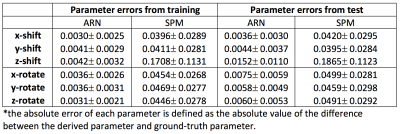

The MSE loss and L1 loss between the moved images and the target images from our ARN are significantly smaller (p < 10-4) than the corresponding loss from the SPM affine registration with mean loss magnitude reduction of 26% and 20%, and 19% and 20% using the test data of simulated and real perfusion image data, respectively (Table 1 and Fig. 2). These results demonstrate that the affine registration derived from our ARN markedly outperforms the iteration-based SPM algorithm (current state-of-the-art registration algorithm). Simulated data offers us a unique opportunity to compare the 6 affine parameters generated from the ARN and SPM and ground-truth parameters. Compared with the ground-truth parameters, the errors of the 6 parameters generated from the ARN are significantly smaller than those from the SPM (p < 10-4) with a mean error of 9.5 times smaller in the test data (Table 3). This implies that our ARN can provide more accurate affine image registration. We also verified that the ARN can successfully learn larger shifts and rotations (with shifts of 5 voxels and 20-degree rotations in x, y, and z directions), which indicates its potential better registration performance in clinical patients with larger motions. Further work is planned to extend the ARN framework for deformable fMRI image registration.Conclusion

The ARN outperforms the image affine registration compared to the current state-of-the-art algorithm. We expect its markedly improved performance even in patient’ populations with large motions.Acknowledgements

No acknowledgement found.References

1. Viergever, M., et al., A survey of medical image registration – under review. Medical Image Analysis, 2016. 2016(33): p. 140-144.

2. Sotiras, A., C. Davatzikos, and N. Paragios, Deformable Medical Image Registration: A Survey. IEEE Transactions on Medical Imaging, 2013. 32(7): p. 1153-1190.

3. Balakrishnan, G., et al., An Unsupervised Learning Model for Deformable Medical Image Registration. IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018.

4. Li, H. and Y. Fan, Non-Rigid Image Registration Using Self-supervised FullyConvolutional Networks Without Training Data IEEE Internation Symposium on biomedical Imaging, 2018.

5. Dai, W., et al., Continuous flow-driven inversion for arterial spin labeling using pulsed radio frequency and gradient fields. Magn Reson Med, 2008. 60(6): p. 1488-97.

6. Y. Zhu, Z.L., S. Newsam, and A. G. Hauptmann., Hidden two-stream convolutional networks for action recognition. ACCV, 2018.

Figures