0395

Fat-Saturated MR Image Synthesis with Acquisition Parameter-Conditioned Image-to-Image Generative Adversarial Network1Pattern Recognition Lab, Department of Computer Science, Friedrich-Alexander University Erlangen-Nürnberg, Erlangen, Germany, 2Department of Industrial Engineering and Health, Technical University of Applied Sciences Amberg-Weiden, Weiden, Germany, 3Siemens Healthcare, Erlangen, Germany

Synopsis

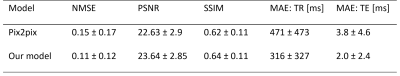

We trained an image-to-image GAN that incorporates the sequence parameterizations in terms of the acquisition parameters repetition time and echo time into the image synthesis. We trained our model on the generation of synthetic fat-saturated MR knee images from non-fat-saturated MR knee images conditioned on the acquisition parameters, enabling us to synthesize MR images with varying image contrast. Our approach yields an NMSE of 0.11 and PSNR of 23.64, and surpasses the performance of a pix2pix [1] benchmark method. It can potentially be used to synthesize missing/additional MR contrasts and for customized data generation to support AI training.

Introduction

With the development and rise of generative adversarial networks [2], image-to-image synthesis using neural networks has yielded excellent image quality. This development has also influenced MRI as new methods for MR image synthesis are developed that include cross-modality (e.g. MRI → CT [3]) or intra-modality image synthesis (e.g. 3T → 7T [4] or synthesis of missing contrasts [5]). However, recently published approaches only work on MR images with a fixed set of sequence parameterizations. This does not suffice for the clinical practice as sequence parameterizations vary significantly within different sites, scanners, and even scanners of the same site [6]. Consequently, an MR image-to-image translation approach must be able to incorporate varying acquisition parameters. Important acquisition parameters defining the image contrast are the repetition time (TR) and echo time (TE). We therefore developed an image-to-image GAN to generate synthetic MR images conditioned on these acquisition parameters and trained the network on the synthesis of fat-saturated MR images from non-fat-saturated MR images.Material and Methods

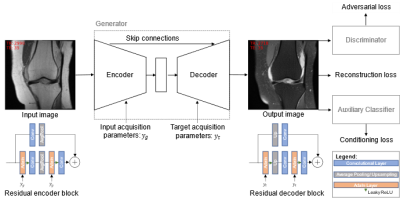

For training and validation, we used the clinical MR images from the fastMRI dataset [7, 8] that contains MR knee images from different MR scanners with varying sequence parameterizations. We used the seven central image slices of all image series acquired on 1.5 T Siemens scanners (Siemens Healthcare, Erlangen, Germany), leading to a dataset of 48,004 MR images with 4,815 unique studies. We split the data by study UID, such that the study UIDs of training, validation, and test dataset are disjoint. We used 100 randomly sampled studies each for validation and testing (1,495 and 1,579 images, respectively), and the rest for training. We trained an image-to-image GAN that incorporates both the acquisition parameters of the input and the target image. The generator consists of a U-Net [9] structure with residual blocks and is trained to generate fat-saturated MR images from non-fat-saturated MR images. The discriminator learns to distinguish between real and synthetic MR images. The GAN is extended by an auxiliary classifier (AC) that learns to determine the acquisition parameters only from the MR image content. This enables us to evaluate how well the intended contrast settings were yielded. Borrowing the idea from style transfer applications, adaptive instance normalization (AdaIN) [10] layers are used to inject the acquisition parameters into the generator. The input acquisition parameters are injected into the encoder, and the target acquisition parameters are injected into the decoder. We train the GAN under a L1 reconstruction loss to enforce pixel-wise similarity between the ground truth target and the reconstructed image, and a non-saturating adversarial loss with R1 regularization to produce sharp and realistic MR images. An overview of the network architecture and training procedure is given in Figure 1.Results

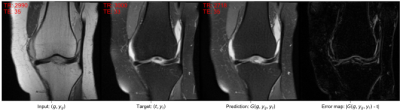

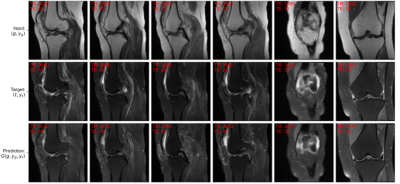

A widely adopted benchmark model is pix2pix [1] that serves as a general solution for image-to-image translations given different image categories (here: non-fat-saturated and fat-saturated MR images). Thus, we compared our approach to the pix2pix model. We used the normalized mean squared error (NMSE), peak signal-to-noise ratio (PSNR), and structural similarity index (SSIM) to evaluate our model. Additionally, we evaluated the performance of the AC in terms of mean absolute error (MAE) on the determination of TR and TE values from the MR image and the accuracy for determination of fat-saturation. The results are reported in Table 1, showing that our approach surpasses the performance of the pix2pix approach and yields lower errors w.r.t. the acquisition parameters, indicating better contrast synthesis. The AC reached a MAE, including its standard deviation, of 290 ± 341 ms for TR and 1.8 ± 2.2 ms for TE determination on the test dataset. The use of fat-saturation was correctly determined in 99.9% of the test cases. The good performance of the AC makes it suitable as a reliable MR contrast evaluation method. Examples for the synthesis of fat-saturated images are presented in Figure 2 and 3.Discussion and Conclusion

This work demonstrates promising results for the generation of synthetic MR images conditioned on acquisition parameters; however, limitations exist. The dataset does not contain sequence parametrizations within the complete valid space of acquisition parameters (e.g. T1-weighted images), but it is anticipated that the approach is easily extendible given the proper training dataset. Image pairs were defined based on the DICOM attributes slice position and imaging orientation for each image within a single study. Some MR images were not registered properly, likely due to movement of the patient between sequence acquisitions, which reduces the quality of the training dataset. As rigid registration did not improve the results, advanced registration techniques are anticipated to improve the quality of the training dataset and therefore the results. Our work can potentially be used to synthesize missing MR contrasts that could not be acquired during the scan due to scan time constraints, motion corruption, or pre-early scan termination due to claustrophobia of the patient. Furthermore, it can serve as advanced data augmentation technique by synthesizing different contrast weightings for an MR image. This is anticipated to increase the robustness of AI applications for MRI for contrast changes. In future work, we aim to extend this approach to multi-image-to-image contrast translation and to 3D.Acknowledgements

This work is supported by the Bavarian Academic Forum (BayWISS)—Doctoral Consortium “Health Research”, funded by the Bavarian State Ministry of Science and the Arts.References

[1] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit, Honolulu, HI, 2017, pp. 5967–5976.

[2] I. J. Goodfellow et al., “Generative adversarial nets,” in Adv Neural Inf Process Syst, 2014, pp. 2672–2680.

[3] H. Emami, M. Dong, S. P. Nejad-Davarani, and C. K. Glide-Hurst, “Generating synthetic CTs from magnetic resonance images using generative adversarial networks,” Med Phys, vol. 45, 2018, doi: 10.1002/mp.13047.

[4] D. Nie et al., “Medical image synthesis with deep convolutional adversarial networks,” IEEE Trans Biomed Eng, vol. 65, no. 12, pp. 2720–2730, 2018, doi: 10.1109/TBME.2018.2814538.

[5] A. Sharma and G. Hamarneh, “Missing MRI pulse sequence synthesis using multi-modal generative adversarial network,” IEEE Trans Med Imaging, vol. 39, no. 4, pp. 1170–1183, 2020, doi: 10.1109/TMI.2019.2945521.

[6] J. Denck, W. Landschütz, K. Nairz, J. T. Heverhagen, A. Maier, and E. Rothgang, “Automated billing code retrieval from MRI scanner log data,” J Digit Imaging, vol. 32, no. 6, pp. 1103–1111, 2019, doi: 10.1007/s10278-019-00241-z.

[7] F. Knoll et al., “fastMRI: A publicly available raw k-space and DICOM dataset of knee images for accelerated MR image reconstruction using machine learning,” Radiol Artif Intell, vol. 2, no. 1, e190007, 2020, doi: 10.1148/ryai.2020190007.

[8] J. Zbontar et al., “fastMRI: An open dataset and benchmarks for accelerated MRI,” Nov. 2018. [Online]. Available: http://arxiv.org/pdf/1811.08839v2

[9] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Lecture Notes in Computer Science, Med Image Comput Comput Assist Interv (MICCAI), Cham: Springer International Publishing, 2015, pp. 234–241.

[10] X. Huang and S. Belongie, “Arbitrary style transfer in real-time with adaptive instance normalization,” in Proc IEEE Int Conf Comp Vis (ICCV), Venice, 2017, pp. 1510–1519.

Figures