0390

HDnGAN: High-fidelity ultrafast volumetric brain MRI using a hybrid denoising generative adversarial network1Department of Biomedical Engineering, Tsinghua University, Beijing, China, 2Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital, Boston, MA, United States, 3Department of Radiology, Siriraj Hospital, Mahidol University, Bangkok, Thailand, 4Department of Radiology, Massachusetts General Hospital, Boston, MA, United States, 5Siemens Medical Solutions, Boston, MA, United States, 6Department of Engineering Physics, Tsinghua University, Beijing, China, 7Department of Radiology, Stanford University, Stanford, CA, United States, 8Department of Electrical Engineering, Stanford University, Stanford, CA, United States

Synopsis

Highly accelerated high-resolution volumetric brain MRI is intrinsically noisy. A hybrid generative adversarial network (GAN) for denoising (entitled HDnGAN) consisting of a 3D generator and a 2D discriminator was proposed to improve the SNR of highly accelerated images while preserving realistic textures. The novel architecture benefits from improved image synthesis performance and increased training samples for training the discriminator. HDnGAN's efficacy is demonstrated on 3D standard and Wave-CAIPI T2-weighted FLAIR data acquired in 33 multiple sclerosis patients. Generated images are similar to standard FLAIR images and superior to Wave-CAIPI and BM4D-denoised images in quantitative evaluation and assessment by neuroradiologists.

Introduction

High-resolution volumetric brain MRI is widely used in clinical and research applications to provide detailed anatomical information and delineation of structural pathology. A major barrier to the greater adoption of high-resolution 3D sequences in clinical practice is the long acquisition time, which may lead to patient anxiety and motion artifacts that compromise diagnostic quality.Current state-of-the-art fast imaging techniques such as Wave-controlled aliasing in parallel imaging (Wave-CAIPI)1 can accelerate volumetric MRI with negligible g-factor and image artifact penalties. However, the signal-to-noise ratio (SNR) of highly accelerated images is intrinsically lower than standard imaging. Previous studies have demonstrated the promise of deep learning in image restoration tasks including denoising2 and super-resolution3.

In this work, we propose using generative adversarial networks (GANs) for improving the SNR of highly accelerated images while recovering realistic textural details. We systematically characterized the effects of adversarial loss on the resultant image sharpness. Unlike existing studies using simulated data4,5, we demonstrated the efficacy of our method on empirical Wave-CAIPI and standard T2-weighted fluid-attenuated inversion recovery (FLAIR) data acquired on 33 multiple sclerosis patients.

Methods

Data Acquisition.This study was approved by the IRB, and was HIPAA compliant. With written informed consent, data were acquired on a whole-body 3-Tesla MAGNETOM Prisma MRI scanner (Siemens Healthcare, Erlangen, Germany) equipped with a 20-channel head coil in 33 patients undergoing clinical evaluation for demyelinating disease (20 for training, 5 for validation, 8 for evaluation). Standard FLAIR data were acquired using a 3D T2 SPACE FLAIR sequence with: repetition time=5000 ms, echo time=390 ms, inversion time=1800 ms, flip angle=120°, 176 sagittal slices, slice thickness=1 mm, field of view=256×256 mm2, resolution=1 mm isotropic, bandwidth=650 Hz/pixel, GRAPPA factor=2, acquisition time=7.25 minutes. Wave-CAIPI FLAIR data were acquired with matched parameter values except for: echo time=392 ms, bandwidth=750 Hz/pixel, acceleration factor=3×2, acquisition time=2.75 minutes.

GAN Architecture and Training.

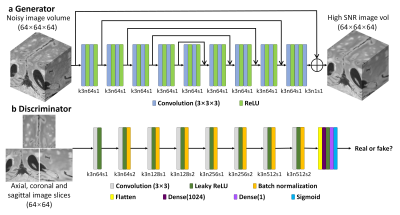

A hybrid GAN architecture for denoising with a 3D generator and a 2D discriminator entitled “HDnGAN” was proposed (Fig. 1). Specifically, a modified U-Net6, with max pooling, up-sampling and batch normalization layers removed, was used as the generator (Fig. 1a). The number of kernels at each layer was kept constant (n=64). 3D convolution was adopted to increase the data redundancy from an additional spatial dimension for improved image synthesis performance and smooth transition between 2D image slices.

The discriminator of SRGAN7 was adopted (Fig. 1b), with spectral normalization(8) incorporated in each layer to stabilize training. The discriminator was designed to classify 2D image slices (axial, coronal, or sagittal) rather than 3D blocks to increase the number of training samples from a limited number of patients for training the discriminator.

The objective was to minimize the loss function $$$L$$$, which consisted of a content loss $$$L_{content}$$$from the generator and an adversarial loss $$$ L_{adversarial} $$$ from the discriminator:$$L=L_{content}+\lambda L_{adversarial}$$

where $$$\lambda$$$ is a hyperparameter that determines the contribution of $$$L_{adversarial} $$$ to $$$ L $$$.

The network was implemented using the Keras API (https://keras.io/) with a Tensorflow (https://www.tensorflow.org/) backend. Training was performed on 64×64×64 voxel blocks for the generator, and 64×64 voxel slices for the discriminator, using Adam optimizer and an NVIDIA GeForce RTX 2080 Ti GPU.

Results Evaluation

For comparison, Wave-CAIPI images were denoised by the state-of-the-art denoising BM4D9,10 algorithm (https://www.cs.tut.fi/~foi/GCF-BM3D/). The mean absolute error (MAE), peak SNR (PSNR), structural similarity index (SSIM), and VGG7,11,12 perceptual loss were used to quantify image similarity comparing to standard FLAIR images.

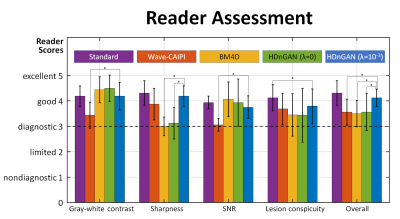

Images were evaluated by two neuroradiologists across five image quality metrics, including: gray-white matter contrast, sharpness, SNR, lesion conspicuity and overall quality. Images were scored using a five-point scale: 1 nondiagnostic, 2 limited, 3 diagnostic, 4 good, 5 excellent. Student’s t-test was performed to assess whether there were significant differences between the image quality scores for different methods.

Results

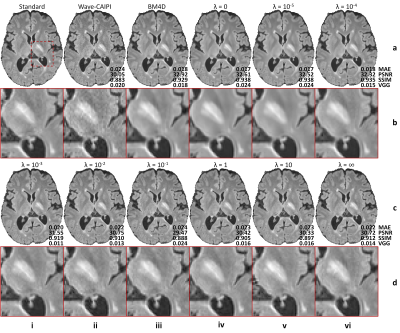

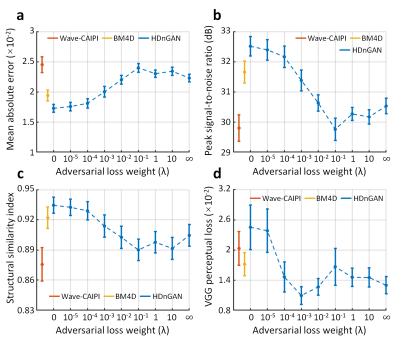

The contribution of adversarial loss controlled the sharpness of resultant images (Fig. 2). For HDnGAN with low λ values (i.e., 0, 10-5, 10-4) and BM4D, the resultant images were smoother and lacked high-frequency textures. When λ gradually increased, more textural details became apparent.Figure 3 demonstrated the quantitative similarity metrics measured by MAE, PSNR, SSIM, and VGG for different λ values. HDnGAN with λ=0 achieved the best performance in terms of MAE, PSNR, and SSIM. HDnGAN with λ=10-3 achieved the best performance in terms of VGG loss.

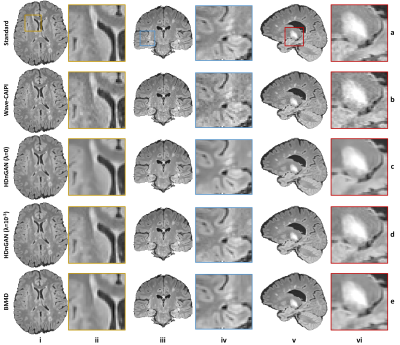

Figure 4 demonstrated that our HDnGAN with λ=10-3 generated high-quality image volumes with increased SNR, smooth transitions between imaging slices along different directions, and rich and realistic textural details.

The results of the reader study (Fig. 5) confirmed that HDnGAN (λ=10-3) significantly improved the gray-white contrast and SNR of the input Wave-CAIPI images and outperformed BM4D and HDnGAN (λ=0) in terms of image sharpness. The overall scores from HDnGAN (λ=10-3) were significantly higher than those from Wave-CAIPI, BM4D, and HDnGAN (λ=0), with no significant difference compared to the standard images.

Discussion and Conclusion

We achieved high-fidelity ultrafast volumetric MRI using synergistic Wave-CAIPI and HDnGAN. The novel hybrid architecture enabled our HDnGAN to be well-trained with limited empirical data. We also enabled control of the richness of the output image textures by adjusting adversarial loss contributions. Reader assessment demonstrated clinicians’ preference for images from HDnGAN (λ=10-3) over Wave-CAIPI and BM4D.Acknowledgements

This work was supported by the Tsinghua University Initiative Scientific Research Program, National Institutes of Health (grant numbers P41-EB030006, K23-NS096056, R01-EB020613, R01-EB028797), a research grant from Siemens Healthineers, and the Massachusetts General Hospital Claflin Distinguished Scholar Award. B.B. has provided consulting services to Subtle Medical.References

1. Bilgic B, Gagoski BA, Cauley SF, Fan AP, Polimeni JR, Grant PE, et al. Wave-CAIPI for highly accelerated 3D imaging. Magnetic Resonance in Medicine. 2015;73(6):2152-62.

2. Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Transactions on Image Processing. 2017;26(7):3142-55.

3. Kim J, Lee JK, Lee KM. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. 2016 IEEE Conference on Computer Vision and Pattern Recognition. IEEE Conference on Computer Vision and Pattern Recognition2016. p. 1646-54.

4. Chen Y, Xie Y, Zhou Z, Shi F, Christodoulou AG, Li D, et al. Brain MRI Super Resolution Using 3D Deep Densely Connected Neural Networks. 2018 Ieee 15th International Symposium on Biomedical Imaging. IEEE International Symposium on Biomedical Imaging2018. p. 739-42.

5. Yang G, Yu S, Dong H, Slabaugh G, Dragotti PL, Ye X, et al. DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction. IEEE Transactions on Medical Imaging. 2018;37(6):1310-21.

6. Ronneberger O, Fischer P, Brox T, editors. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention; 2015: Springer.

7. Ledig C, Theis L, Huszar F, Caballero J, Cunningham A, Acosta A, et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. 30th IEEE Conference on Computer Vision and Pattern Recognition. IEEE Conference on Computer Vision and Pattern Recognition, 2017. p. 105-14.

8. Miyato T, Kataoka T, Koyama M, Yoshida Y. Spectral normalization for generative adversarial networks. arXiv preprint arXiv:180205957. 2018.

9. Maggioni M, Katkovnik V, Egiazarian K, Foi A. Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE transactions on image processing. 2012;22(1):119-33.

10. Dabov K, Foi A, Katkovnik V, Egiazarian K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Transactions on image processing. 2007;16(8):2080-95.

11. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:14091556. 2014.

12. Xintao W, Ke Y, Shixiang W, Jinjin G, Yihao L, Chao D, et al. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. Leal-Taixe L, Roth S, editors 2019. p63-79.

Figures