0387

Deep-Learning-Based Motion Correction For Quantitative Cardiac MRI1HeartVista, Inc., Los Altos, CA, United States

Synopsis

We developed a deep-learning-based approach for motion correction in quantitative cardiac MRI, including perfusion, T1 mapping, and T2 mapping. The proposed approach consists of a segmentation network and a registration network. The segmentation network was trained using 2D short-axis images for each of the three sequences, while the same registration network was shared between all three sequences. The proposed approach was faster and more accurate than a popular traditional registration method. Our work is beneficial for building a faster and more robust automated processing pipeline to obtain CMR parametric maps.

Background

Motion correction is crucial for many quantitative cardiac magnetic resonance (CMR) techniques, such as T1 mapping, T2 mapping, and perfusion [1-3]. Conventional methods have been proposed based on either feature matching [4-7], or the low-rank property of spatial or temporal dimensions [8-10]. Medical image registration has exploited deep learning [11] to improve the registration speed, although the application to CMR has not been widely explored. In this work, we developed a deep-learning-based method for motion correction in quantitative CMR. Compared with a traditional registration method [6], our proposed method showed improvements in both accuracy and speed.Methods

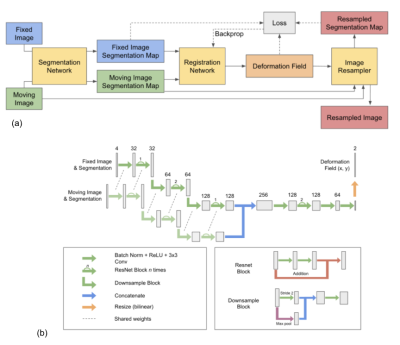

We first fine-tuned our previously published cardiac segmentation network [12] for 2D short-axis cardiac images of perfusion, T1, and T2 mapping sequences, respectively. T1 and T2 mapping shared the same segmentation network, and the perfusion sequence used its own segmentation network. Segmentation networks yield the likelihood of each pixel belonging to the left ventricular lumen, myocardium, right ventricular lumen, and background segments. The inputs of our registration network (Fig. 1) include the classification likelihoods of the reference image and those of the moving image. The use of classification likelihoods aims to improve the accuracy of registration in each heart compartment, especially the myocardium, where the quantitative analysis is performed. Our registration network outputs a deformation field that is applied to the moving image to yield the registered image. Details of our network are described in Fig. 1. The registration network was trained using ~110,000 cardiac images from 490 patients, and using outputs from the segmentation network for the perfusion sequence. During the training, our network minimizes the loss function L = ||Px - Py∘T||2 + ɑV(T), where Px is the classification likelihoods of the fixed image, T is the deformation field, Py∘T is the classification probabilities of the moving image Py after applying the predicted deformation field T, V is the total variation function, and ɑ is the weighting factor. The same registration network was used for all three sequences.The segmentation network was evaluated on ~900 perfusion images from 19 patients, ~250 images of T1 mapping sequence from 53 patients, and ~200 images of T2 mapping sequence from 22 patients. Dice coefficients were calculated between manual labels and predicted ROIs.

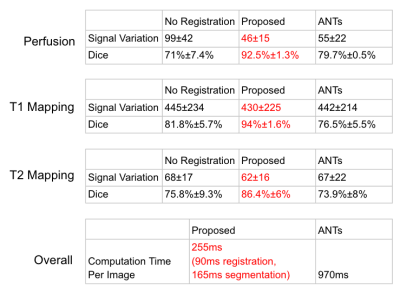

The signal variation, dice coefficient, and computation time of our proposed registration method was compared with a popular image registration method [3] (ANTs), https://github.com/stnava/LabelMyHeart. Dice coefficients were calculated between predicted myocardium ROI of registered images and those of reference images. Signal variations in registered images were calculated as the standard deviation of the second derivatives of time curves for each pixel within the myocardium ROI of the reference image. Computation time per image was calculated by running models on a commercial laptop with a 2.6 GHz 6-core processor and 16 GB memory. The computation time for our proposed method includes the time used for both segmentation and registration. The test datasets include ~4000 perfusion images from 19 patients, ~400 images of T1 mapping sequence from 20 patients, and ~100 images of T2 mapping sequence from 10 patients.

Results

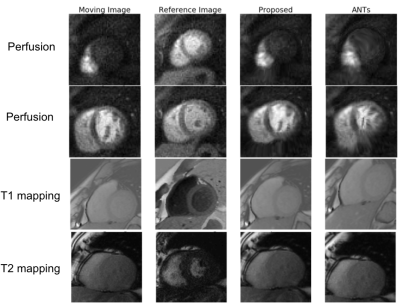

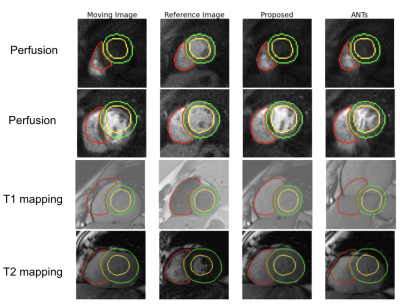

Our fine-tuned segmentation neural network achieved a mean dice coefficient of 93%, 85%, and 87% for perfusion, T1 mapping, and T2 mapping, respectively. Large contrast change between images is common for these three applications. Fig. 2 shows that our proposed method is robust to the large contrast difference between the moving image and the reference image, whereas such contrast difference tends to cause distortions in ANTs results. To demonstrate the accuracy of the registration results in Fig. 2, segmentation contours of the reference image are overlaid on top of the reference image, the moving image and the registration results in Fig. 3. Fig. 4 summarizes the comparison between our proposed registration method and ANTs. Compared with ANTs, our proposed method showed improvement in dice coefficients, and showed a reduction in signal variation and computation time for all three applications. ANTs performed worse than no registration for T1 and T2 mapping images, probably due to the large change in image contrast.Conclusion

We developed a deep-learning-based approach for motion correction in quantitative CMR and validated it on images of perfusion, T1 mapping, and T2 mapping sequences. The proposed network achieved higher accuracy and shorter computation time compared to a traditional registration method. Our work is beneficial for building a faster and more accurate automated processing pipeline to obtain CMR parametric maps.Acknowledgements

We thank Amos Cao, Arun Seetharaman, Eric Peterson, Haisam Islam, Jason He for their help in proofreading.References

1. B. Pontré et al., "An Open Benchmark Challenge for Motion Correction of Myocardial Perfusion MRI," in IEEE Journal of Biomedical and Health Informatics, vol. 21, no. 5, pp. 1315-1326, Sept. 2017, doi: 10.1109/JBHI.2016.2597145.

2. Xue, H., Shah, S., Greiser, A., Guetter, C., Littmann, A., Jolly, M.‐P., Arai, A.E., Zuehlsdorff, S., Guehring, J. and Kellman, P. (2012), Motion correction for myocardial T1 mapping using image registration with synthetic image estimation. Magnetic Resonance Medicine, 67: 1644-1655.

3. Giri, S., Shah, S., Xue, H., Chung, Y.‐C., Pennell, M.L., Guehring, J., Zuehlsdorff, S., Raman, S.V. and Simonetti, O.P. (2012), Myocardial T2 mapping with respiratory navigator and automatic nonrigid motion correction. Magn Reson Med, 68: 1570-1578.

4.Med Image Comput Comput Assist Interv Int Conf Med Image Comput Comput Assist Interv. 2008, 11 (Pt 2): 35-43.

5. C. Chefd'hotel, G. Hermosillo and O. Faugeras, "Flows of diffeomorphisms for multimodal image registration," Proceedings IEEE International Symposium on Biomedical Imaging, Washington, DC, USA, 2002, pp. 753-756, doi:10.1109/ISBI.2002.1029367.

6. Avants BB, Epstein CL, Grossman M, Gee JC. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med Image Anal. 2008;12(1):26-41. doi:10.1016/j.media.2007.06.004

7. Benovoy M, Jacobs M, Cheriet F, Dahdah N, Arai AE, Hsu LY. Robust universal nonrigid motion correction framework for first-pass cardiac MR perfusion imaging. J Magn Reson Imaging. 2017;46(4):1060-1072. doi:10.1002/jmri.25659

8. Melbourne A, Atkinson D, White M, Collins D, Leach M, Hawkes D. Registration of dynamic contrast-enhanced MRI using a progressive principal component registration (PPCR) Phys Med Biol. 2007;52:5147–5156. PHYSICS

9. Lingala SG, DiBella E, Jacob M. (DC-CS): A Novel Framework for Accelerated Dynamic MRI. IEEE Trans Med Imaging. 2015;34(1):72–8

10. Scannell CM, Villa ADM, Lee J, Breeuwer M, Chiribiri A. Robust Non-Rigid Motion Compensation of Free-Breathing Myocardial Perfusion MRI Data. IEEE Trans Med Imaging. 2019;38(8):1812-1820. doi:10.1109/TMI.2019.2897044

11. Haskins, G., Kruger, U. & Yan, P. Deep learning in medical image registration: a survey. Machine Vision and Applications 31, 8 (2020).

12. De Goyeneche, A. S., Addy, N. O., Santos, J. M., & Hu, B. (2019). Automated Cardiac Magnetic Resonance Imaging. Circulation, 140, A17153-A17153.

Figures