0334

Bidirectional Translation Between Multi-Contrast Images and Multi-Parametric Maps Using Deep Learning1Biomedical Imaging Research Institute, Cedars-Sinai Medical Center, Los Angeles, CA, United States, 2Department of Bioengineering, UCLA, Los Angeles, CA, United States

Synopsis

Multi-contrast MRI and multi-parametric maps provide complementary qualitative and quantitative information for disease diagnosis. However, due to limited scan time, a full array of images is often unavailable in practice. To provide both qualitative weighted images and quantitative maps using a weighted-only or mapping-only acquisition, in this work, we propose to perform bidirectional translation between conventional weighted images and parametric maps. We developed a combined training strategy of two convolutional neural networks with cycle consistency loss. Our preliminary results show that the proposed method can translate between contrast-weighted images and quantitative maps with high quality and fidelity.

Introduction

Quantitative MRI is an emerging technique for characterization of abnormal tissues, while conventional weighted imaging is still used for routine clinical diagnosis. Although a combination of the two can provide complementary information for clinical diagnosis and quantitative tissue characterization, it is impractical to acquire both due to long scan time. Recently neural networks have achieved promising results on image translation between different image contrasts or modalities.1 In this work, we propose a combined training of two convolutional neural networks (CNNs) using cycle consistency loss2 to perform bidirectional translation between multi-contrast weighted images and multi-parametric maps, which allows both qualitative and quantitative information with a weighted-only or mapping-only acquisition.Methods

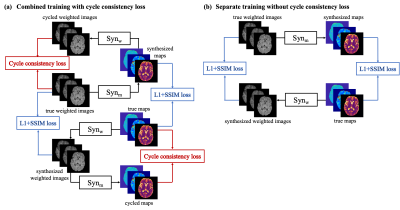

Network designOur design includes two networks, $$$Syn_{\mathrm{w}}$$$ and $$$Syn_{\mathrm{m}}$$$, to transform between contrast-weighted images $$$\mathbf{W}$$$ and quantitative maps $$$\mathbf{M}$$$ in two directions (Figure. 1(a)). In our implementation, both synthetic networks are CNNs based on the U-Net3 structure. Besides the losses calculated between the synthesized results and labels, for which we select a combination of L1 loss and structural-similarity-index (SSIM) loss, we introduce a cycle consistency loss inspired by CycleGAN2. This loss function enforces that source images can be re-synthesized from their projection onto the target domain. The loss functions are:

$$L=L_{1}\left(S y n_{\mathrm{w}}(\mathbf{M}), \mathbf{W}\right)+\lambda_{1} L_{1}\left(\operatorname{Syn}_{\mathrm{m}}(\mathbf{W}), \mathbf{M}\right)+\lambda_{2} L_{\mathrm{SSIM}}\left(S y n_{\mathrm{w}}(\mathbf{M}), \mathbf{W}\right) \\+\lambda_{3} L_{\mathrm{SSIM}}\left(S y n_{\mathrm{m}}(\mathbf{W}), \mathbf{M}\right)+\lambda_{4} L_{\mathrm{cyc}}$$ $$L_{\mathrm{cyc}}=L_{1}\left(\operatorname{Syn}_{\mathrm{w}}\left(\operatorname{Syn}_{\mathrm{m}}(\mathbf{W})\right), \mathbf{W}\right)+L_{1}\left(\operatorname{Syn}_{\mathrm{m}}\left(\operatorname{Syn}_{\mathrm{w}}(\mathbf{M})\right), \mathbf{M}\right)$$ where $$$\lambda_i, i=1,2,3,4$$$ are weighting parameters, $$$L_{1}\left(\mathbf{A}, \mathbf{B}\right)$$$ is the L1 loss between $$$\mathbf{A}$$$ and $$$\mathbf{B}$$$, and $$$L_{SSIM}\left(\mathbf{A}, \mathbf{B}\right)$$$ is the SSIM loss between $$$\mathbf{A}$$$ and $$$\mathbf{B}$$$.

Data acquisition and preprocessing

We scanned 15 multiple sclerosis patients on a 3T Siemens scanner (12 for training and 3 for testing). The acquired contrast-weighted images included T1 MPRAGE, T1 GRE, and T2 FLAIR images. We used a T1-T2-T1$$$\rho$$$ Multitasking sequence4 to obtain T1 maps, T2 maps, and proton density maps (T1$$$\rho$$$ maps were ignored, as they did not have corresponding T1$$$\rho$$$-contrast-weighted images). All the images and quantitative maps were coregistered using ANTS5. The weighted images were normalized by the 95th percentile of the intensity slice-wise. The paired weighted images and quantitative maps were used as multi-channel input and output for the networks.

Evaluation

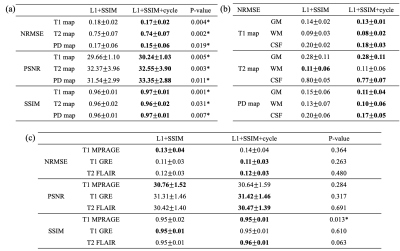

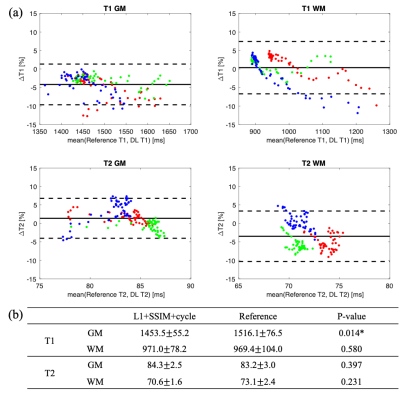

To demonstrate the benefits of the proposed combined training scheme, we also trained the networks separately without cycle consistency loss ($$$\lambda_4=0$$$; Figure 1(b)). To quantitatively evaluate the synthetic weighted images and quantitative maps, normalized root mean squared error (NRMSE), peak signal-to-noise ratio (PSNR), and SSIM were calculated. For the quantitative maps, we measured T1 and T2 values on gray matter (GM) and white matter (WM). Segmentations of GM, WM, and cerebral spinal fluid (CSF) were obtained using FAST6. The Bland-Altman analysis was performed between the proposed method and the Multitasking reference. Two-way repeated measures ANOVAs were conducted for statistical analysis of obtained quantitative metrics and T1/T2 values. The significance level was set as P < 0.05.

Results

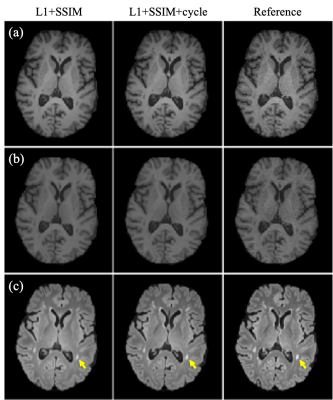

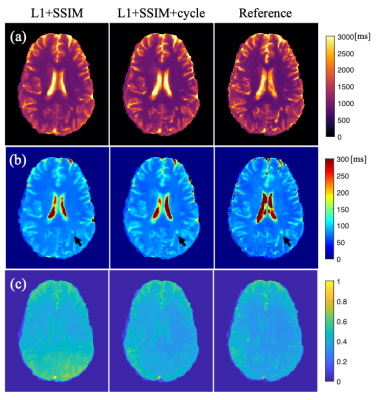

Examples of synthetic weighted images and quantitative maps are shown in Figure 2 and Figure 3, respectively. Table 1 shows a summary of the quantitative metrics. For contrast-weighted images, similar metrics were achieved for the two deep learning approaches (i.e., with or without cycle consistency loss), but adding cycle loss made the lesions on T2 FLAIR more visible (Figure 2). For quantitative maps, compared with separate single-direction training, the combined training using cycle consistency loss provided less blurry results (especially for T2 maps) and better delineation of lesions (Figure 3). As shown in Table 1(a), the proposed combined training approach achieved significantly lower NRMSE and significantly higher PSNR and SSIM than the method without cycle loss. While the NRMSE of T2 maps for both methods was high, Table 1(b) shows that most errors came from the CSF regions. Figure 4 shows the T1/T2 measurements on gray matter and white matter. T1 values measured on the white matter and T2 values measured on both tissues provided by our method agreed well with the reference without significant differences. For T1 values measured on the gray matter, although statistically significant bias between the proposed method and the reference was observed, the Bland-Altman plots show that the bias was small (<5%).Discussion

In this work, we used cycle consistency loss to train two neural networks jointly for bidirectional translation between conventional weighted images and quantitative maps. Our method generated synthetic weighted images with good contrast and provided T1 and T2 maps with reasonable values. While we observed large NRMSE for the synthetic T2 maps, separate evaluation on different tissues showed that most errors came from CSF, which was expected since signals for CSF were nulled on T2 FLAIR. We did not have other contrasts in the input to provide the T2 information. We observed significant bias on T1 values measured on the gray matter between the proposed method and the reference. This may be related to the small size of the testing dataset (N=3). More data is required to further validate our method in future work.Conclusion

We developed a deep learning-based method to perform a bidirectional synthesis of contrast-weighted images and quantitative maps. With the proposed method, both qualitative weighted images and quantitative parametric maps can be obtained using a weighted-only or mapping-only acquisition.Acknowledgements

This work was supported by NIH R01EB028146.References

1. Wang T, Lei Y, Fu Y, Curran WJ, Liu T, Yang X. Medical Imaging Synthesis using Deep Learning and its Clinical Applications: A Review. arXiv preprint arXiv:2004.10322. 2020.

2. Zhu JY, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2017; pp. 2223-2232.

3. Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, Springer. 2015; pp. 234–241.

4. Ma S, Wang N, Fan Z, Kaisey M, Sicotte NL, Christodoulou AG, et al. Three-Dimensional Whole-Brain Simultaneous T1, T2, and T1ρ Quantification using MR Multitasking: Method and Initial Clinical Experience in Tissue Characterization of Multiple Sclerosis. Magn Reson Med. 2020, in press.

5. Avants BB, Tustison N, Song G. Advanced normalization tools (ANTS). Insight j. 2009;2(365):1-35.6. Zhang Y, Brady M, Smith S. Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE transactions on medical imaging. 2001;20(1):45-57.

Figures