0278

Anomaly-aware multi-contrast deep learning model for reduced gadolinium dose in contrast-enhanced brain MRI - a feasibility study1Subtle Medical Inc., Menlo Park, CA, United States

Synopsis

Deep learning (DL) has recently been proven to be effective in addressing the safety concerns of Gadolinium-based Contrast Agents (GBCAs). Recent studies have shown that DL-based algorithms are able to reconstruct contrast-enhanced MRI images with only a fraction of the standard dose. This work investigates the feasibility of improving the performance of such DL algorithms using multi-contrast MRI data, combined with an unsupervised anomaly detection based attention mechanism.

Introduction

Deep learning (DL) has gained major traction for the potential to mitigate the safety risks associated with GBCA in MRI exams. Recent studies have shown that DL could reconstruct high quality contrast-enhanced images using 3D T1w images with only a fraction of the standard dose in diverse clinical settings [1, 2]. However, due to the differences in the timing between dose injection and image acquisition, and the nature of certain slowly-enhancing lesions, a lack of enhancing signal information in the low-dose image may be observed in the proposed scan protocol and DL framework, a roadblock for wide clinical adoption. This work aims to address this issue using additional image contrasts (such as T2 and FLAIR) and unsupervised attention mechanism for inherent anomaly detection.Methods

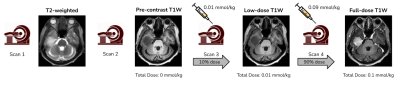

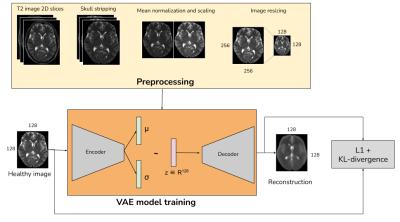

Data & PreprocessingWith IRB approval and informed patient consent, images from 28 patients (22 train and 6 validation) were retrospectively selected for training the dose reduction DL model. The scan protocol for this in-house dataset is shown in Fig. 1, where four images are obtained for each patient from a single imaging session: three 3D T1-weighted images - pre-contrast, 10% dose (0.01 mmol/kg) and full dose contrast-enhanced (0.1 mmol/kg) and a 2D T2-weighted image. As described in [2], the T1-weighted images were skull-stripped, co-registered and intensity normalized. The T2-weighted images were skull stripped using the HD-BET tool [3] and registered with respect to the pre-contrast T1-weighted image, using the SimpleElastix [4] library. Relative scaling was performed between the T1 and T2 images by normalizing with the respective mean values. The overall preprocessing workflow is shown in Fig. 2.

Unsupervised anomaly detection (UAD)

(1) Dataset & preprocessing

It was observed that T2-weighted images have hyperintense regions in and around the site of a tumor or a lesion. This work used the unsupervised anomaly detection model proposed in [5], but adopted to T2-weighted images. The anomaly detection was trained using the IXI public dataset [6] which has T2-weighted images from 578 cognitively normal individuals. The dataset was split into 405 train and 173 validation samples. The T2 images are skull-stripped using HD-BET tool [3], mean-normalized and resampled to an image size of 128x128.

(2) VAE model

The VAE model used the unified network architecture for the encoder and decoder as described in [5]. The latent space was dense in nature with a dimension of 128. The VAE model was trained on 2D image slices to project the input data onto a learned mean and variance from which a sample was drawn and reconstructed. The model was trained with a reconstruction L1-loss combined with a weighted KL divergence loss, for 100 epochs using Adam optimizer with an early stopping scheme, on a Nvidia-Tesla V100 GPU.

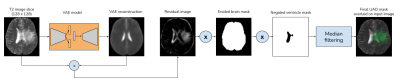

(3) Post-processing

The model had a high reconstruction error for anomalous samples that are not part of the distribution that is encoded in the latent space. This made the pixel-wise residuals i.e, the absolute difference between the input and the VAE reconstruction, to contain the anomaly regions. The residual image was then thresholded using a prior quantile of pixel values. An eroded brain mask was then applied to get rid of the sharp hyperintense regions at the brain-mask boundaries. As the ventricle regions are hyper-intense in T2 images, they were removed using the VentMapp3r [8] tool, to obtain the anomaly mask. The anomaly mask was then resized to the dimensions of the input image to obtain the final UAD mask. The overall UAD mask generation steps are illustrated in Fig. 3.

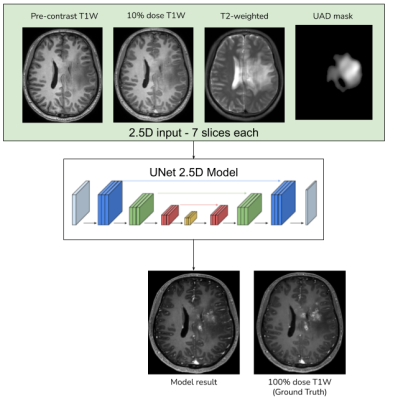

Multicontrast DL model with attention mechanism

Using the anomaly detection model described above, UAD masks were generated for the in-house T2 images. UNet architecture from [2] was used, with the input channels being 2.5D slices of pre-contrast T1W, low-dose T1W, T2W and the UAD mask, as shown in Fig. 4. The model was trained with the full-dose image as the ground truth with a combination of L1, SSIM, perceptual and adversarial as described in [2]. In the original DL model the L1-loss was weighted using the enhancement signals computed between the low-dose and the zero-dose images. In order to enable the model with an anomaly-aware attention mechanism, the proposed model weights the L1-loss using the UAD mask.

Results

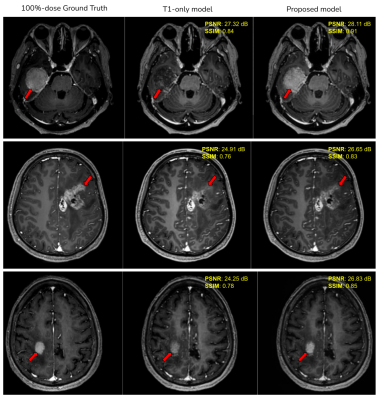

Fig. 5 shows examples of qualitative improvement in the enhancement patterns of the tumors, along with quantitative improvements in the PSNR and SSIM metrics. The average end-to-end processing time for the proposed model (including the UAD mask generation) is 75 seconds on a single Nvidia-Tesla-V100 GPU.Discussion

Based on the quantitative and qualitative results on the shown examples, we have demonstrated the feasibility of using multi-contrast images combined with unsupervised anomaly detection, that has the capability of providing complimentary contrast information thus having the potential of improving the existing GBCA dose reduction DL models. The proposed approach can be applied to other contrasts such as FLAIR, DWI etc. Comprehensive clinical validation and reader studies have to be performed to evaluate the performance of the proposed model under diverse clinical settings.Conclusion

We have investigated the feasibility of using multi-contrast MRI images combined with an unsupervised anomaly-aware attention mechanism, to improve the performance of existing GBCA-dose-reduction DL-algorithms.Acknowledgements

We would like to acknowledge the grant support of NIH R44EB027560.References

1. Gong, E., Pauly, J. M., Wintermark, M., Zaharchuk, G. Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Im. 2018; 48(2):330-340.

2. Pasumarthi, S., Tamir, J., Gong, E., Zaharchuk, G., Zhang, T. Toward a site and scanner-generic deep learning model for reduced gadolinium dose in contrast-enhanced brain MRI, Proceedings of the ISMRM 2020. P1286

3. Isensee F, Schell M, Tursunova I, Brugnara G, Bonekamp D, Neuberger U, Wick A, Schlemmer HP, Heiland S, Wick W, Bendszus M, Maier-Hein KH, Kickingereder P. Automated brain extraction of multi-sequence MRI using artificial neural networks. Hum Brain Mapp. 2019; 1–13. https://doi.org/10.1002/hbm.24750.

4. Marstal, K., Berendsen, F., Staring, M., Klein, S. SimpleElastix: A User-Friendly, Multilingual Library for Medical Image Registration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 2016; :574-582.

5. Christoph Baur, Stefan Denner, Benedikt Wiestler, Shadi Albarqouni, Nassir Navab. Autoencoders for Unsupervised Anomaly Segmentation in Brain MR Images: A Comparative Study. arxiv

6. IXI brain development dataset - https://brain-development.org/ixi-dataset/

7. ADNI dataset - http://adni.loni.usc.edu/

8. VentMapp3r - https://github.com/mgoubran/VentMapp3r

Figures