0063

Results of the 2020 fastMRI Brain Reconstruction Challenge1NYU School of Medicine, New York, NY, United States, 2Facebook AI Research, New York, NY, United States, 3AIRS Medical, Seoul, Korea, Republic of, 4Yonsei University, Seoul, Korea, Republic of, 5Siemens Healthineers, Princeton, NJ, United States, 6Siemens Healthcare GmbH, Erlangen, Germany, 7CEA (NeuroSpin) & Inria Saclay (Parietal), Université Paris-Saclay, Gif-sur-Yvette, France, 8Département d’Astrophysique, CEA-Saclay, Gif-sur-Yvette, France, 9Radboud University Medical Center, Nijmegen, Netherlands, 10Amsterdam UMC, Amsterdam, Netherlands, 11Facebook AI Research, Menlo Park, CA, United States

Synopsis

The next round of the fastMRI reconstruction challenge took place, this time using anatomical brain data. Submissions were ranked by SSIM and resulting finalists again by 6 radiologists. We observed the cases with clear SSIM separation achieving the highest radiologists’ rankings, in particular the winning reconstructions. Most 4x track reconstructions exhibit desirable image quality, with some exceptions that show anatomy-like hallucinations. Radiologist sentiment decreased for the 8x and Transfer tracks, indicating that these may require further investigation.

Introduction

The collection of raw data for research of machine learning based methods is difficult and expensive. Many research groups lack the organizational infrastructure for data collection at necessary scale. The fastMRI project has attempted to advance community-based scientific synergy in MRI by i) releasing large datasets of raw k-space and DICOM images,1,2 available to almost any researcher, and ii) hosting public leaderboards and open competitions.3 The 2020 fastMRI Challenge continues the 2019 challenge3 with a few key differences, described below.Methods

This year’s challenge hallmarks:- The target anatomy is brain rather than knee.1

- Radiologists were asked to rate images based on quality of depiction of pathology, rather than general image quality impression.

- We added a designated track that we called the Transfer track, where participants were asked to run model inference on a mix of data from vendors (GE and Philips) that were not known during training (Siemens-only).

- Subsampling masks were (pseudo-)equispaced, modified for exact 4-fold/8-fold sampling rates despite the fully-sampled center. The latter was kept for its utility for autocalibrating parallel imaging methods4,5,6 and compressed sensing7.

Some features were identical to last year’s challenge’s3:

- Structural similarity8 (SSIM) to the ground truth was used to select the top 3 submissions. These finalist reconstructions and the ground truth were then sent to radiologists to determine the winners.

- Retrospective subsampling based on fully-sampled 2D raw k-space data.

- We kept the subsampling rates of 4-fold and 8-fold. The 2019 fastMRI challenge suggested these rates provide participants with a suitable obtainable target and a “reach” goal.

- The root sum-of-squares method was used as ground truth for quantitative evaluation.

Dataset

The dataset is part of the fastMRI dataset1 including 6,970 clinical brain MRI scans (3,001 at 1.5 T, 3,969 at 3 T) collected on a range of Siemens scanners including 2D pre- and post-contrast T1, T2, and FLAIR sequences with variable reconstruction matrix sizes. Of these, 565 scans were withheld for evaluation in the challenge.

The Transfer track consisted of additional 118 clinical scans from a 3T GE Discovery MR750 and 212 scans collected on volunteers on Philips scanners from Philips Healthcare north American clinical partner sites. One difficulty of the Transfer track was that the saved GE raw data did not contain frequency oversampling. Also, the Philips data, collected on volunteers, included no post-contrast imaging.

In addition to standard HIPAA-compliant deidentification, all scans were cropped at the level of the orbital rim to exclude identifiable facial features.

Evaluation Process

After the top 3 submissions were identified based on SSIM, six (two T1 post-contrast, two T2, and two FLAIR) cases from the challenge dataset were selected per track for the second phase. Cases were selected to represent a range of pathologies from intracranial tumors and strokes to normal and age-related changes.

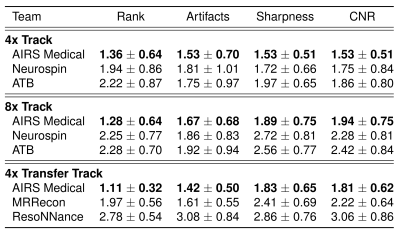

6 radiologists from different institutions ranked the reconstructions based on the quality of the depiction of the pathology. For volunteer cases in the Transfer track, small age-related imaging changes were used for ranking. Radiologists also scored each case in terms of artifacts, sharpness and contrast-to-noise ratio (CNR) on a Likert-type scale: 1 being the best (e.g., no artifacts) and 4 the worst (e.g., unacceptable artifacts).

Results

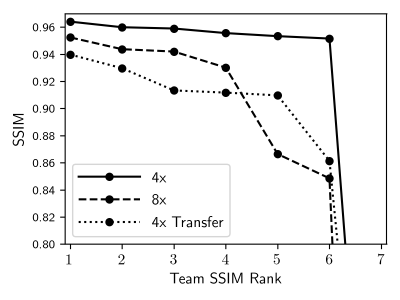

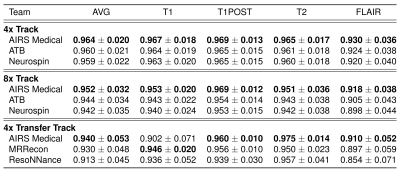

Each track saw seven submissions, which were ranked by mean SSIM as shown in Figure 1. The metrics of the top 3 finalists’ submissions are broken down in Table 1. One team consistently ranked the highest, with only one subsplit exception. The lowest SSIM scores were achieved in the Transfer track, where some models introduced artifacts in their reconstructions of GE data, residual aliasing in the shown case.Table 2 shows results of radiologists’ evaluations, confirming the top performer. In a case-wise breakdown, the best radiologist-determined reconstruction corresponded to highest SSIM in 16 out of 18 cases. Aside from the winning team, differentiation among the other finalists was not strong.

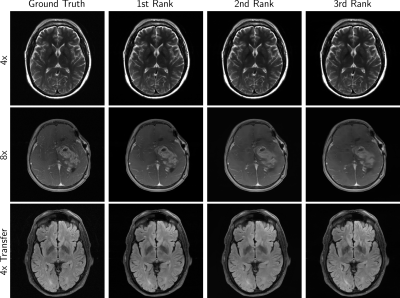

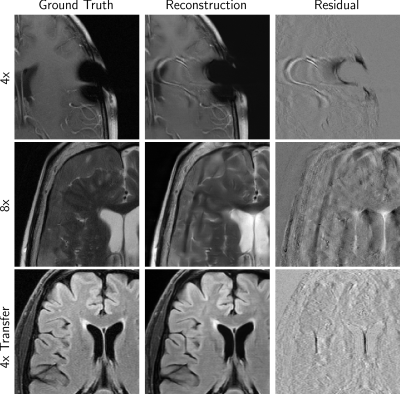

Figure 2 shows example cases from finalists of all tracks, while Figure 3 shows some undesirable results. Over-smoothing is readily apparent in the 8X example from all three of the top performers in Figure 2. Hallucination of anatomy-like features in conjunction with aliasing was apparent to a small degree in all tracks and a potential problematic artifact.

Discussion

One team assertively scored the best in all evaluation phases, considering mean radiologists’ rankings or respective SSIM scores. The winning model also scored highest on Likert-type ratings of artifacts, sharpness, and CNR.Multiple radiologists found images in the 4x track to be similar in terms of depiction of the pathology. When it came to the 8x and Transfer tracks, radiologist sentiment became occasionally negative. This was also affected by hallucinations such as shown in Figure 3, which are not acceptable and especially problematic if they mimic normal structures that are either not present or actually abnormal.

Conclusion

The competition provides insights into the current state-of-the-art of machine learning for MR image reconstruction. The challenge confirmed areas in need of research, particularly those along the lines of evaluation metrics, error characterization, and AI-generated hallucinations.Acknowledgements

Bruno Riemenschneider and Matthew Muckley have contributed equally to this work.

We would like to thank all research groups that participated in the challenge. We thank the scientific advisors Jeff Fessler, Jon Tamir, and Daniel Rueckert for feedback during challenge organization. We thank the judges for the contribution of their clinical expertise and time. We would also like to thank Tullie Murrell, Mark Tygert, and C. Lawrence Zitnick for many valuable contributions to the launch and execution of this challenge. We give special thanks to Philips North America and their clinical partner sites for the data they contributed for use as part of the Transfer track.

Grant support: NIH R01EB024532 and P41EB017183.

References

1. J. Zbontar, F. Knoll, A. Sriram, M. J. Muckley, M. Bruno, A. Defazio, M. Parente, K. J. Geras, J. Katsnelson, H. Chandarana et al., “fastMRI: An open dataset and benchmarks for accelerated MRI,” arXiv preprint arXiv:1811.08839, 2018.

2. F. Knoll, J. Zbontar, A. Sriram, M. J. Muckley, M. Bruno, A. Defazio, M. Parente, K. J. Geras, J. Katsnelson, H. Chandarana et al., “fastMRI: A publicly available raw k-space and DICOM dataset of knee images for accelerated MR image reconstruction using machine learning,” Radiol.: Artificial Intell., vol. 2, no. 1, p. e190007, 2020.

3. F. Knoll, T. Murrell, A. Sriram, N. Yakubova, J. Zbontar, M. Rabbat, A. Defazio, M. J. Muckley, D. K. Sodickson, C. L. Zitnick et al., “Advancing machine learning for MR image reconstruction with an open competition: Overview of the 2019 fastMRI challenge,” Magn. Res. Med., 2020.

4. D. K. Sodickson and W. J. Manning, “Simultaneous acquisition of spatial harmonics (SMASH): fast imaging with radiofrequency coil arrays,” Magn. Res. Med., vol. 38, no. 4, pp. 591–603, 1997.

5. M. A. Griswold, P. M. Jakob, R. M. Heidemann, M. Nittka, V. Jellus, J. Wang, B. Kiefer, and A. Haase, “Generalized autocalibrating partially parallel acquisitions (GRAPPA),” Magn. Res. Med., vol. 47, no. 6, pp. 1202–1210, 2002.

6. M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala, and M. Lustig, “ESPIRiT—an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA,” Magn. Res. Med., vol. 71, no. 3, pp. 990–1001, 2014.

7. M. Lustig, D. L. Donoho, J. M. Santos, and J. M. Pauly, “Compressed sensing MRI,” IEEE Sig. Process. Mag., vol. 25, no. 2, pp. 72–82, 2008.

8. Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEETrans. Imag. Process., vol. 13, no. 4, pp. 600–612, 2004.

Figures