4629

Early prediction of neurodevelopmental deficits in very preterm infants using a multi-task deep transfer learning model1The Perinatal Institute and Section of Neonatology, Perinatal and Pulmonary Biology, Cincinnati Children's Hospital Medical Center, Cincinnati, OH, United States, 2Department of Pediatrics, University of Cincinnati College of Medicine, Cincinnati, OH, United States, 3Imaging Research Center, Cincinnati Children's Hospital Medical Center, Cincinnati, OH, United States, 4Department of Radiology, University of Cincinnati College of Medicine, Cincinnati, OH, United States, 5Department of Electronic Engineering and Computing Science, University of Cincinnati, Cincinnati, OH, United States, 6Department of Radiology, Cincinnati Children's Hospital Medical Center, Cincinnati, OH, United States

Synopsis

We proposed a deep transfer learning model using the fusion of clinical and brain functional connectome data obtained at term for early neurodevelopmental deficits prediction at two years corrected age in very preterm infants. The proposed model was first trained in an unsupervised fashion using 884 subjects from publicly available ABIDE repository, then fine-tuned and cross-validated on 33 very preterm infants. Our model achieved an AUC of 0.77, 0.63 and 0.74 on the risk stratification of cognitive, language and motor deficits, respectively. Our findings demonstrated the feasibility of using deep transfer learning on connectome data for abnormal neurodevelopment prediction.

INTRODUCTION

Survivors following very premature birth (i.e., ≤32 weeks gestational age) 1 remain at high risk for neurodevelopmental impairments, thereby increasing their risk for poor educational, health, and social outcomes. 2 Unfortunately, it may take 2-5 years from birth to accurately diagnose disabilities in these high-risk infants. Early, accurate identification, soon after birth, could pave the way for domain-specific, intensive early neuroprotective therapies during a critical window for optimal neuroplasticity. There is a growing interest in developing artificial intelligence neural network approaches to predict neurodevelopmental and neurological deficits using connectome data. 3 Such models typically require training on large datasets, 4 but unfortunately large neuroimaging datasets are either unavailable or expensive to obtain. Transfer learning represents an important key to solve the fundamental problem of insufficient training data in deep learning. 5 We developed a multi-task transfer learning enhanced deep neural network (TL-DNN) model to jointly predict multiple neurodevelopmental deficits in preterm infants, including cognitive, language, and motor skills at two years corrected age.METHODS

This study includes 884 subjects (age range = 7-64 years, median age = 14.7 years) from the publicly available autism brain imaging data exchange (ABIDE) repository 6 and 33 very preterm infants (gestational age at birth 28.3 (2.4) weeks; postmenstrual age at scan 40.2 (0.5) weeks). The Nationwide Children's Hospital Institutional Review Board approved this study, and written parental informed consent was obtained for every subject. All preterm infants received standardized Bayley Scales of Infant and Toddler Development III test at 2 years corrected age. The Bayley-III cognitive, language, and motor scores are on a scale of 40 to 160, with a mean of 100 and standard deviation (SD) of 15. We grouped very preterm infants using a cutoff of 85 into those at high vs. low risk for moderate/severe neurodevelopmental deficits.We employed our neonatal-optimized pipeline 7 for neonatal resting state fMRI preprocessing using FMRIB Software Library (FSL, Oxford University, UK), Statistical Parametric Mapping software (SPM, University College London, UK), and Artifact Detection Tools (ART, MIT, Cambridge, US). Ninety ROIs were defined based on a neonatal automated anatomical labeling (AAL) atlas. 8 The functional connectivity was defined as the temporal correlation of BOLD signals between spatially apart ROIs. 9 This was calculated using functional connectivity toolbox (CONN). 10

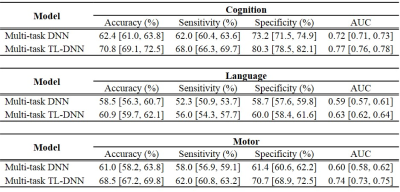

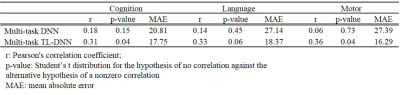

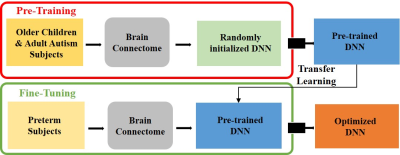

An overview of the multi-task deep transfer learning framework is shown in Figure 1. More specifically, the proposed framework includes 2 modules: 1) pre-training (Figure 1, top red panel). We first pre-train a deep neural network (DNN) prototype in an unsupervised fashion using stacked sparse autoencoder (SSAE) to learn the general representation of brain networks from 884 older children and adults subjects. This DNN prototype are comprised of fully-connected layers and batch normalization layers. Batch normalization layer is able to mitigate the internal covariate shift problem so as to accelerate the training process; 2) fine-tuning (Figure 1, bottom green panel). We then adapt the pre-learned brain connectome knowledge from module 1 to better represent neonatal connectome by fine-tuning the whole TL-DNN model using 33 very preterm subjects. The detailed architecture of TL-DNN is illustrated in Figure 2. It contains two separate input channels for connectome and clinical data. Both channels are later fused into a single channel as data integration. There are three parallel output channels for cognitive, language and motor task, respectively. For neurodevelopmental risk classification, we used softmax function in the output channels and cross-entropy as loss function. For neurodevelopmental score regression, we applied linear function in the output channels and mean-squared error as loss function. After the fine-tuning, we will have an optimized TL-DNN for the joint neurodevelopmental outcome prediction. We validated the proposed TL-DNN model using 5-fold cross-validation with accuracy, sensitivity, specificity, and area under the receiver operating characteristic curve (AUC) for risk stratification, and Pearson’s correlation coefficient and mean absolute error for prediction of cognitive scores.

RESULTS

We performed both risk stratification (i.e., classification; neurodevelopmental score cutoff=85), and score prediction (i.e., regression). Our transfer learning enhanced TL-DNN model achieved an AUC of 0.77, 0.63 and 0.74 on the risk stratification of cognitive, language and motor deficits. As compared with a DNN model without transfer learning, our model significantly improved risk stratification accuracy by 8.4% (p<0.001), 2.4% (p=0.034), and 7.5% (p<0.001) and improved the AUC by 0.05 (p<0.001), 0.04 (p<0.001) and 0.14 (p<0.001), respectively (Table 1). In addition, Predicted cognitive and motor neurodevelopmental scores were significantly correlated with actual scores, measured with a Pearson’s correlation coefficient of 0.31 (p=0.04) and 0.36 (p=0.04), respectively (Table 2).DISCUSSIONS and CONCLUSIONS

Early and reliable identification of high-risk infants for later neurodevelopmental impairments would facilitate targeted early interventions during periods of maximal neuroplasticity to improve clinical outcomes. To mitigate concerns regarding insufficient data for training a deep learning model, we employed transfer learning in this work. We present a transfer learning enhanced deep learning model using the fusion of brain connectome and clinical data for early joint prediction of the neurodevelopmental outcomes (cognitive, language and motor) at 2 years of age in very preterm infants. A larger study is needed to validate our approach.Acknowledgements

This study was supported by the National Institutes of Health grants R21-HD094085, R01-NS094200, and R01-NS096037 and a Trustee grant from Cincinnati Children’s Hospital Medical Center. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We thank the ABIDE project investigators for making their data publicly available.References

1. Hamilton BE, Martin JA, Osterman MJ. Births: Preliminary Data for 2015. Natl Vital Stat Rep. 2016;65(3):1-15.

2. Jarjour IT. Neurodevelopmental Outcome After Extreme Prematurity: A Review of the Literature. Pediatric Neurology. 2015;52(2):143-152.

3. Barkhof F, Haller S, Rombouts SA. Resting-state functional MR imaging: a new window to the brain. Radiology. 2014;272(1):29-49.

4. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436-444.

5. Shin HC, Roth HR, Gao M, et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging. 2016;35(5):1285-1298.

6. Di Martino A, Yan C-G, Li Q, et al. The autism brain imaging data exchange: towards a large-scale evaluation of the intrinsic brain architecture in autism. Molecular psychiatry. 2014;19(6):659.

7. He L, Parikh NA. Aberrant Executive and Frontoparietal Functional Connectivity in Very Preterm Infants With Diffuse White Matter Abnormalities. Pediatr Neurol. 2015;53(4):330-337.

8. Shi F, Yap PT, Wu G, et al. Infant brain atlases from neonates to 1- and 2-year-olds. PLoS One. 2011;6(4):e18746.

9. Betzel RF, Bassett DS. Multi-scale brain networks. Neuroimage. 2017;160:73-83.

10. Whitfield-Gabrieli S, Nieto-Castanon A. Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect. 2012;2(3):125-141.

Figures