4144

Physical Needle Localization using Mask R-CNN for MRI-Guided Percutaneous Interventions1Radiological Sciences, University of California, Los Angeles, Los Angeles, CA, United States, 2Bioengineering, University of California, Los Angeles, Los Angeles, CA, United States, 3Mechanical and Aerospace Engineering, University of California, Los Angeles, Los Angeles, CA, United States

Synopsis

Discrepancy between the physical needle location and the MRI passive needle feature could lead to needle localization errors in MRI-guided percutaneous interventions. By leveraging physics-based simulations with different needle orientations and MR imaging parameters, we designed and trained a Mask Regional Convolutional Neural Network (R-CNN) to automatically localize the physical needle tip and axis orientation based on the MRI passive needle feature. The Mask R-CNN framework was tested on a separate set of actual phantom MR images and achieved physical needle localization with median tip error of 0.74 mm and median axis error of 0.95°.

Introduction

Needle localization is essential for the accuracy and efficiency of MRI-guided percutaneous interventions (1–4). For passive needle visualization (5), there is a discrepancy (e.g., 5-10 mm (6,7)) between the needle feature and the physical needle position. Previous studies observed that the sequence type, frequency encoding direction, and needle orientation with respect to B0 are the main factors affecting the discrepancy, but have not proposed any automated technique to estimate the physical needle position (6,7). A fast and accurate method to localize the physical needle position could benefit MRI-guided interventions (3,4). Therefore, the objective of this study was to develop a deep-learning framework to automatically localize the physical needle position from the passive needle feature on MRI.Methods

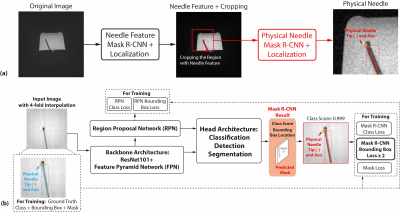

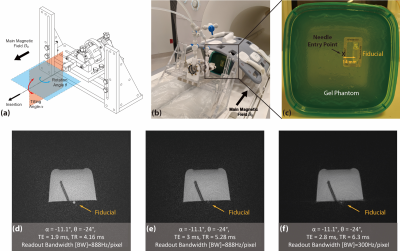

Overall Framework: We propose a framework consisting of two Mask Regional Convolutional Neural Network (R-CNN) stages (Fig. 1). First, a previously trained “needle feature” Mask R-CNN segmented the needle feature on the input image (8). Next, the image was cropped to a patch containing the needle feature and we trained a new “physical needle” Mask R-CNN to use the image patch for localizing the physical needle position.Phantom Experiments: We used a golden-angle (GA) ordered radial spoiled gradient-echo (GRE) sequence for real-time 3T MRI-guided needle (20 gauge, 15 cm, Cook Medical) insertion in gel phantoms (8,9). A needle actuator was used to control needle insertion (1,11) (Fig. 2a-c). Different imaging parameters and needle orientations (Fig. 2d-f) were used to create variations of the passive needle feature in a total of 55 images.

Simulation: To overcome the difficulties of generating training data from actual MRI scans, which are expensive, time-consuming, and subject to measurement uncertainties, we simulated data based on the off-resonance effects for the sequence, accounting for needle rotation/tilting and radial sampling (12,13). We used phantom data with different needle orientations and imaging parameters to calibrate the susceptibility value of the needle material (190ppm) and ensure that simulations reflected actual conditions (Fig. 3).

Training: To train and deploy the physical needle Mask R-CNN, we used the output bounding box corners to define the physical needle tip and axis (Fig. 1b). The bounding box loss was weighted to twice that of the other losses during training, since the needle location is most important. 157 simulated images with the same imaging parameters as phantom experiments, θ from -30° to 30°, and α from 0° to -25° were created for training. Additional 4-fold data augmentation was performed by rescaling, translation, and adding Gaussian noise (total of 785 images).

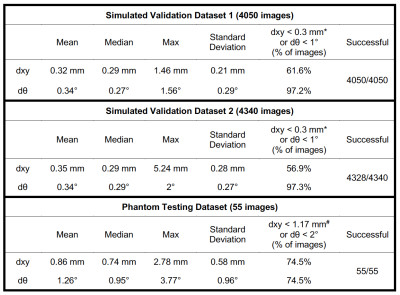

Validation: First, 810 simulated images with the same imaging parameters as the training data, but different θ and α, were created and augmented in a similar way to validate the network performance for different needle orientations (validation set 1; 4050 total images). Second, 868 simulated images with different parameters (TE = 2.5ms, BW = 888Hz/pixel; TE = 3.7ms, BW = 300Hz/pixel) and different needle orientations compared to training data were created and augmented in a similar way to investigate the performance of the model for new imaging parameters (validation set 2; 4340 total images). The Euclidean distance between the estimated physical needle tip position and the reference (dxy: mm) and the absolute difference between the needle axis orientations (dθ: degrees) were computed to evaluate the localization accuracy.

Testing: After validation, we retrained the physical needle Mask R-CNN using training data and validation dataset 1, and tested the performance in a set of 55 phantom images. The physical needle tip and orientation obtained by Mask R-CNN were compared to the measured reference (Fig. 2) in terms of dxy and dθ.

Results

Example results for both validation datasets showed accurate physical needle localization (Fig. 4a-b). The localization results in validation datasets 1 and 2 achieved mean dxy around 0.3 mm and dθ around 0.3°. Example physical needle localization results on phantom data are shown in Fig. 4c-d. The proposed method accurately localized the physical needle position on images with different parameters, achieving dxy and dθ of 0.74 mm and 0.95° with processing time of 200 ms. The results are summarized in Table 1.Discussions

For GA radial GRE, dxy was around 5 mm (Fig. 3), which could lead to localization errors when targeting clinically relevant lesions with ~5 mm diameter (14). In addition, dxy varies with different needle orientation and imaging parameters, which could lead to more uncertainties during procedural manipulation. Experimental results show that our network can be trained with simulation-based data and achieve accurate needle localization on phantom images. The median dxy and dθ (Table 1) were at the limits of the simulation model resolution and image resolution, and show the potential of our framework to provide accurate needle position information, in real-time, for MRI-guided interventions. The performance of our framework may be improved by using sets of experimental images to fine-tune the networks for specific in vivo applications.Conclusions

In this study, a deep-learning method for physical needle localization based on Mask R-CNN was established using substantial amounts of simulated training data. Phantom testing results demonstrate that the proposed framework can accurately localize the physical needle position in real time based on passive needle features on MRI.Acknowledgements

This study was supported in part by Siemens Healthineers and UCLA Radiological Sciences. The simulation code implementation was assisted by Dr. Frank Zijlstra at UMC Utrecht.References

1. Mikaiel S, Simonelli J, Li X, Lee Y, Lee YS, Lu D, Sung K, Wu HH. MRI-guided targeted needle placement during motion using hydrostatic actuators. Int. J. Med. Robot. Comput. Assist. Surg. 2019; (In press).

2. Tan N, Lin W-C, Khoshnoodi P, et al. In-bore 3-T MR-guided transrectal targeted prostate biopsy: prostate imaging reporting and data system version 2–based diagnostic performance for detection of prostate cancer. Radiology 2016; 283:130–139.

3. Renfrew M, Griswold M, Çavusoglu MC. Active localization and tracking of needle and target in robotic image-guided intervention systems. Auton. Robots 2018; 42:83–97.

4. Mehrtash A, Ghafoorian M, Pernelle G, et al. Automatic needle segmentation and localization in MRI with 3D convolutional neural networks: application to MRI-targeted prostate biopsy. IEEE Trans. Med. Imaging 2019; 38:1026–1036.

5. Ladd ME, Erhart P, Debatin JF, Romanowski BJ, Boesiger P, McKinnon GC. Biopsy needle susceptibility artifacts. Magn. Reson. Med. 1996; 36:646–651.

6. P DiMaio S, Kacher D, Ellis R, Fichtinger G, Hata N, Zientara G, Panych L, Kikinis R, Jolesz F. Needle artifact localization in 3T MR images. Stud. Health Technol. Inform. 2006; 119:120–125.

7. Song S-E, Cho NB, Iordachita II, Guion P, Fichtinger G, Whitcomb LL. A study of needle image artifact localization in confirmation imaging of MRI-guided robotic prostate biopsy. In: IEEE international conference on robotics and automation (ICRA) 2011; pp.4834–4839.

8. Li X, Raman SS, Lu D, Lee Y, Tsao T, Wu HH. Real-time needle detection and segmentation using Mask R-CNN for MRI-guided interventions. In: Proceedings of the ISMRM 27th annual meeting 2019; p.972.

9. Li X, Lee Y-H, Mikaiel S, Simonelli J, Tsao T-C, Wu HH. Respiratory motion prediction using fusion-based multi-rate Kalman filtering and real-time golden-angle radial MRI. IEEE Trans. Biomed. Eng. 2019; (In press).

10. Sørensen TS, Atkinson D, Schaeffter T, Hansen MS. Real-time reconstruction of sensitivity encoded radial magnetic resonance imaging using a graphics processing unit. IEEE Trans. Med. Imaging 2009; 28:1974–1985.

11. Simonelli J, Lee Y-H, Chen C-W, Li X, Lu D, Wu HH, Tsao T-C. Hydrostatic actuation for remote operations in MR environment. IEEE/ASME Trans. Mechatronics 2019; (In press).

12. Zijlstra F, Viergever MA, Seevinck PR. SMART tracking: simultaneous anatomical imaging and real-time passive device tracking for MR-guided interventions. Phys. Medica 2019; 64:252–260.

13. Zijlstra F, Bouwman JG, Braškutė I, Viergever MA, Seevinck PR. Fast Fourier-based simulation of off-resonance artifacts in steady-state gradient echo MRI applied to metal object localization. Magn. Reson. Med. 2016; 78:2035–2041.

14. Kim YK, Kim YK, Park HJ, Park MJ, Lee WJ, Choi D. Noncontrast MRI with diffusion-weighted imaging as the sole imaging modality for detecting liver malignancy in patients with high risk for hepatocellular carcinoma. Magn. Reson. Imaging 2014; 32:610–618.

15. Fessler JA. Michigan Image Reconstruction Toolbox. Available: https//web.eecs.umich.edu/~fessler/code/ Downloaded 2019.

Figures