4135

Scanner Control and Realtime Visualization via Wireless Augmented Reality1Biomedical Engineering, Case Western Reserve University, Cleveland, OH, United States, 2Department of Radiology, School of Medicine, Case Western Reserve University, Cleveland, OH, United States

Synopsis

We implemented an augmented reality (AR) scanner interface in order to streamline the scanner console while allowing users to work natively in three dimensions. The Microsoft Hololens was leveraged to allow users to visualize and control the scanner wirelessly. A gesture based interface was created, with emphasis placed on simplification of the scan process to help untrained non-imaging personnel to interact with the scanner. The resulting system is simple to use and allows the operator to maintain direct sight of the patient throughout the scan.

Purpose

MRI acquisitions are inherently three-dimensional, but we control scanners and review images in two dimensions. For as long as the field has existed, slice planes, fields of view, and other acquisition parameters have been configured via menus and flat projections of slice intersections. This disconnect from three-dimensionality continues after reconstruction: slices are displayed out of context both from each other and from the patient, on a screen that often blocks the operator’s view of the subject.Scanner control software has bloated over the years to enable highly-granular control that emphasizes flexibility over intuitive use, creating barriers to use of the system by non-technicians (especially physicians without MR expertise) and introducing points for human error [1]. With the help of more autonomous protocols such as magnetic resonance fingerprinting (MRF), we aim to develop a three dimensional augmented reality (AR) interface that is both intuitive to use and capable of replacing the control and display functions of the scanner console.

Methods

We developed the control and visualization system in the Unity Engine, and used a time-slice approach (Unity coroutines)for communication and user inputs in place of multithreading to expand platform compatibility. Communication with the 3T clinical scanner (Skyra, Siemens Healthineers) was via the Access-I SDK, which enables granular remote control of the scanner over HTTP.A gesture system was implemented to capture the user’s hand motions for interaction with the scanner. Although the gestures were developed for Windows Mixed Reality devices, the underlying interface is compatible with all Unity Engine build targets, including WebGL and mobile devices.

After the user dons the AR headset an origin is established in world space via an AR marker (Vuforia) or a shared spatial anchor. This origin is then mapped to the scanner’s coordinate system and virtual buttons are instantiated around the user for gesture and gaze based control. Bidirectional communication is established with the scanner via HTTP through a registration handshake that opens WebSockets for data transfer and establishes permissions for the client to control the scanner directly.

To begin a scan, the user first selects a body region.Optionally, a 3-axis localizer acquisition can be run and rendered at the origin. This establishes the patient context for subsequent scans. The user may then select a specific acquisition to run (2D, 3D, etc). Sequence-specific controls are then displayed to allow the user to dynamically add slice groups unique numbers of slices, orientations, and center positions from within the UI. Each slice group is represented by blank placeholder geometry within the coordinate system.

Once data has been collected and reconstructed, the images and associated transform data are sent by the Access-I plugin to the visualization device where each image replaces the associated slice placeholder in the visualization. The user can select whether only the most recent acquisitions are shown or new acquisitions are added to the existing set of visualized data, as well as adjust scan parameters for the subsequent acquisition.

Results

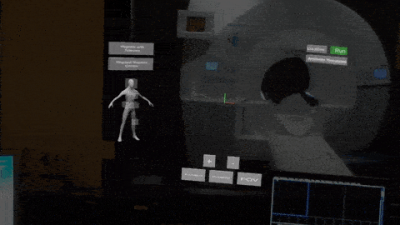

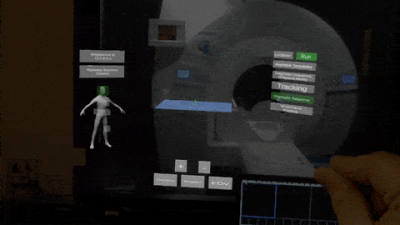

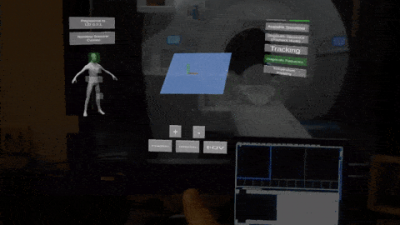

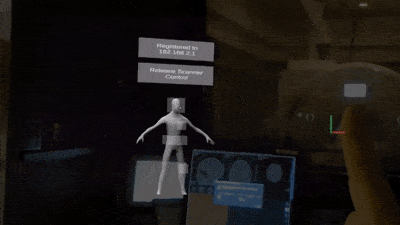

The system described above was implemented for the Hololens device in Unity 2019.1f1. Bidirectional communication between the scanner and a Microsoft Hololens was established reliably over a wireless LAN. Latency between the completion of acquisition and visualization in the visualization program averaged 0.27s for a 256^2 matrix acquisition, though that includes the time needed for online reconstruction.Figure 1 shows the software registering to control a simulated scan and loading the initial slice coordinates from the saved sequence. Figure 2 demonstrates the user interface for modifying a preset slice group position, then adding an additional slice group to the protocol. Figure 3 demonstrates the ability to add and modify multiple slice groups within a sequence template. Figure 4 demonstrates the interface in use at the scanner for an initial online test of the control system, and shows rendering of the imaging planes based on the metadata provided by the scanner.

Discussion

This system streamlines the acquisition and visualization process, while retaining the three dimensional nature of an MRI experiment. This allows clinicians and technologists to work in 3D throughout the acquisition and reading process. Native 3D control of scan targets and acquisition parameters is possible, and reconstructed data can be immediately co-registered and rendered without input from the user.Bidirectional communication exposes most scanner functions for control from the AR interface, without the user needing to disengage from the data or lose sight of the patient. While the interface is significantly pared down from the desktop interface, this encourages interaction with the image data rather than the console controls. The streamlined acquisition process can be run with minimal understanding of the scanner’s interface, allowing physicians to take a more active role during interventional imaging. It should be noted that AR is not the ideal interface for in-depth scan modifications - such details should be pre-configured in a traditional interface before switching to AR during the acquisition.

In the future, as the system operates in a world coordinate system it is possible for multiple clients to connect to the scanner in local or telepresence mixed reality.This would allow for novel collaboration about a patient (and modification of scan parameters) while the patient is still in the scanner.

Acknowledgements

Siemens Healthcare, R01EB018108, NSF 1563805, R01DK098503, and R01HL094557.References

[1] Automatic scan prescription for brain MRI. Magn Reson Med. 2001 Mar;45(3):486-94. https://www.ncbi.nlm.nih.gov/pubmed/11241708

[2] Ma, D. Gulani, V., Seiberlich, N., Liu, K., Sunshine, J., Duerk, J. and Griswold, M.A. (2013). Magnetic Resonance Fingerprinting. Nature. 2013 Mar 14. 495(7440): 187-192.

Figures