3953

Automatic Mouse Skull-Stripping in fMRI Research via Deep Learning Using 3D Attention U-net1Guangdong Provincial Key Laboratory of Medical Image Processing & Key Laboratory of Mental Health of the Ministry of Education, School of Biomedical Engineering, Southern Medical University, Guangzhou, China, 2School of Biomedical Engineering, Southern Medical University, Guangzhou, China, Guangzhou, China, 3Department of Electrical and Electronic Engineering, Laboratory of Biomedical Imaging and Signal Processing, The University of Hong Kong, Hong Kong SAR, China, Hong Kong, China

Synopsis

Skull-stripping is an important preprocessing step in functional magnetic resonance imaging (fMRI) research. In fMRI research using rodents, skull-stripping is still manually implemented, and it is very time-consuming. To address this problem, a 3D Attention U-net was trained for automatically extracting mouse brain from fMRI time series. The experimental results demonstrate that the mouse brain can be effectively extracted by the proposed method, and the corresponding fMRI results agrees well with the results with manual skull-stripping.

Purpose

To develop a deep learning method for automatically extracting mouse brain from fMRI time series.Introduction

Functional magnetic resonance imaging (fMRI) is widely used to gain understanding about brain organization, information processing and neurological disease search biomarker1-4. In fMRI research using rodents, skull-stripping is an important preprocessing step, but it is still manually performed and thus time-consuming5,6. The automatic segmentation methods like BET7 , which are widely used in human fMRI research, can not be applied to skull-stripping in animal fMRI studies.With the development of deep learning, especially convolutional neural networks (CNNs), state-of-the-art performance has been achieved in medical image analysis tasks such as organ segmentation8-10 and lesion detection11,12. U-net13 is widely used in medical and biomedical image segmentation. Here, we propose an improved 3D attention U-Net14,15 for automatically extracting mouse brain in fMRI research.

Method

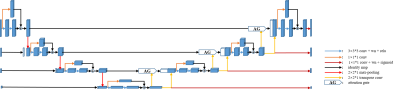

Network ArchitectureOur proposed method employed a 3D Attention U-net that learns an end-to-end mapping to segment the mouse brain from original images with varying sampling levels. Figure 1 show the overall architecture of the proposed method. This architecture was based on the popular U-net. In this work, we adapted the 3D-based architecture with input image pyramid and deep supervised output layers. Soft attention gates (AGs)14 residual layers were also used for better representational power. The loss function for training is composed of focal loss16 and active contour loss17.

Training Detail

We implemented the proposed model using the TensorFlow1.14.0. The overall dataset consists of 55 normal-appearing mice brain images with a 44-11 training-validation split, and each mouse includes T2 RARE anatomical images(256*256*20) and functional BOLD images(64*64*20). As such, we trained the two neural network models: one for T2-weighted images, and the other for BOLD images. The convolution parameters were randomly initialized with Xavier18, the training optimizer used is Adam19, and the normalization we used is weighted normalization20. The Dice score coefficient (DSC), precision and sensitivity were calculated for the quantitative evaluation.

Data Acquisition

Six male C57BL/55 mice weighing 25-30g were used for fMRI studies on 7T Bruker scanner with Cryogenic prober. All mice were lightly anesthetized with 0.04mg/kg/hr of dexmedetomidine and 0.3% isoflurane, with their muscle relaxed by 0.2mg/kg pancuronium bromide. The animals were mechanically ventilated at a rate of 80 breaths/min. Their rectal temperature was controlled at 37℃ using a MRI-compatible air heater (SA Instruments Inc., NY, USA). The heart rate (260-350 beat/min) and oxygen saturation (>95%) were continuously monitored.

Data Analysis

Both T2-weighted anatomical images (TR/TE=2500ms/35ms, FOV=16mm×16mm, matrix size=256×256, and 20 slices with a thickness of 0.5mm), and the functional BOLD images (Repetition =450, TR/TE=750ms/15ms, FOV=16mm×16mm, matrix size=64×64, and 20 slices with a thickness of 0.5mm) was acquired for all animals. fMRI data of 6 mice were used to evaluate the segmentation performance. The other standard preprocessing were performed using SPM12 (www. fil.ion.ucl.ac.uk/spm) and our in-house Matlab code. Seed-based analysis was then performed using 4×4 voxels regions as seeds. Pearson`s correlation with regard to the seed was computed.

Results

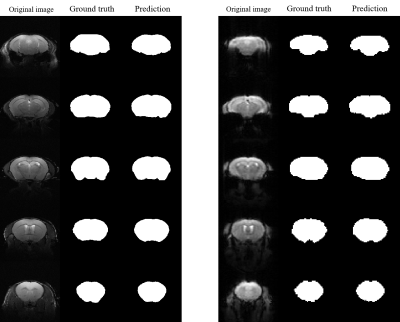

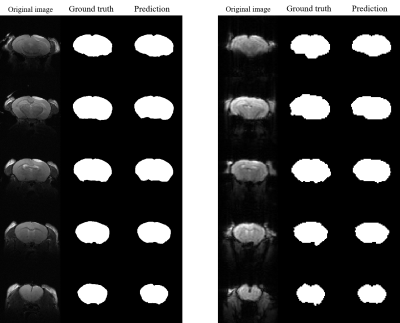

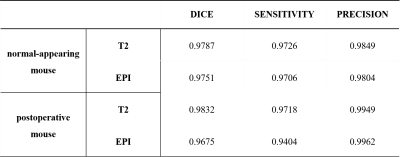

Figures 2 and 3 present the results of segmentation on the T2-weighted (left) and correspondingfunctional BOLD images(right) of both normal-appearing mouse and mouse that have undergone craniotomy(postoperative mice).The proposed method accurately extracts the mouse brain and achieve a result close to the ground truth.Table 1 presents the quantitative evaluation of the proposed method in validation dataset. The proposed method consistently generates images with high DSC, sensitivity and precision values for the T2 RARE images and functional BOLD images of both normal-appearing mice and postoperative mice.

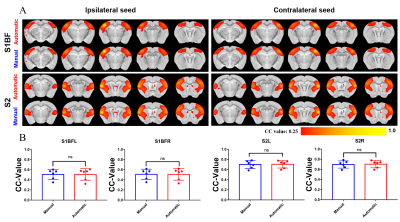

Figure 4 shows the results of the fMRI experiment using the manual and automatic skull-stripping operations. There is no difference between the final fMRI results.

Discussion & Conclusion

This work presents a novel method that employs the 3D attention U-net as an end-to-end mapping that inputs the brains of mice outputs the segmentation maps. The mean of speed of handling one mouse brain images of 20 slices with our model is 2.72s with T2 RARE images and 1.00s with functional BOLD images using a typical desktop computer. The performance in postoperative mice demonstrates the good generalization of our model. In conclusion, deep learning using 3D attention U-net can achieve automatic skull-skipping operation for mouse fMRI research, and this would substantially reduce the processing time and labor in the analysis of mouse fMRI data.Acknowledgements

No acknowledgement found.References

1. Xuyun Wen, Han Zhang, Gang Li, Mingxia Liu, Weiyan Yin, Weili Lin, Jun Zhang, Dinggang Shen. First-year development of modules and hubs in infant brain functional networks. Neuroimage. 2019;185:222-235.

2. Nina E. Fultz, Giorgio Bonmassar, Kawin Setsompop, Robert A. Stickgold, Bruce R. Rosen, Jonathan R. Polimeni, Laura D. Lewis. Coupled electrophysiological, hemodynamic, and cerebrospinal fluid oscillations in human sleep. Science 2019; 366(6465):628-631.

3. Jin Liu, Mingrui Xia, Zhengjia Dai, Xiaoying Wang, Xuhong Liao, Yanchao Bi, Yong He. Intrinsic Brain Hub Connectivity Underlies Individual Differences in Spatial Working Memory. Cerebral Cortex 2017; 27(12):5496–5508.

4. Andrew T Drysdale, Logan Grosenick, Jonathan Downar, Katharine Dunlop, Farrokh Mansouri, Yue Meng. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nature Medicine 2017; 23:28–38.

5. Xifan Chen, Chuanjun Tong, Zhe Han, Kaiwei Zhang, Binshi Bo, Yanqiu Feng, Zhifeng Liang. Sensory evoked fMRI paradigms in awake mice. NeuroImage 2020; 204.

6. Russell W. Chana, Alex T. L. Leong, Leon C. Ho, Patrick P. Gao, Eddie C. Wong, Celia M. Dong, Xunda Wang, Jufang Hec, Ying-Shing Chand, Lee Wei Limd, and Ed X. Wu. Low-frequency hippocampal–cortical activity drives brain-wide resting-state functional MRI connectivity. PNAS 2017;114(33):6972-6981.

7. S.M. Smith. Fast robust automated brain extraction. Human Brain Mapping. 2002; 17(3):143-155.

8. Havaei M, Davy A, Warde-Farley D, et al. Brain tumor segmentation with Deep Neural Networks. Med Image Anal 2017;35:18-31.

9. Zongwei Zhou, Md Mahfuzur Rahman Siddiquee, Nima Tajbakhsh, Jianming Liang. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. arXiv:1807.10165 2018.

10. Avendi MR, Kheradvar A, Jafarkhani H. A combined deep-learning and deformable-model approach to fully automatic segmentation of the left ventricle in cardiac MRI. Med Image Anal 2016;30:108-119.

11. Moi Hoon Yap, Gerard Pons, Joan Mart´ı, Sergi Ganau, Melcior Sent´ıs, Reyer Zwiggelaar, Adrian K Davison, and Robert Mart´ı. Automated breast ultrasound lesions detection using convolutional neural networks. IEEE journal of biomedical and health informatics. 2018; 22(4):1218–1226.

12. Noel CF Codella, David Gutman, M Emre Celebi, Brian Helba, Michael A Marchetti, Stephen W Dusza, Aadi Kalloo, Konstantinos Liopyris, Nabin Mishra, Harald Kittler, et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic). ISBI 2018; 168–172.

13. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-Net: Convolutional Networks for Biomedical Image Segmentation. MICCAI 2015; 234-241.

14. Ozan Oktay, Jo Schlemper, Loic Le Folgoc and etc. Attention U-Net: Learning Where to Look for the Pancreas. ArXiv:1804.03999v3 2018.

15. Nabila Abraham, Naimul Mefraz Khan. A Novel Focal Tversky Loss Function with Improved Attention U-net for Lesion Segmentation. arXiv:1810.07842 2018.

16. Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, Piotr Dollár. Focal Loss for Dense Object Detection. arXiv:1708.02002.

17. Xu Chen, Bryan M. Williams, Srinivasa R. Vallabhaneni, Gabriela Czanner, Rachel Williams, and Yalin Zheng. Learning Active Contour Models for Medical Image Segmentation. CVPR 2019;11632-11640.

18. Xavier Glorot, Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. Proceedings of Machine Learning Research.

19. Diederik P. Kingma, Jimmy Ba. Adam: A Method for Stochastic Optimization. arXiv:1412.6980 2014.

20. Tim Salimans, Diederik P. Kingma. Weight Normalization: A Simple Reparameterization to Accelerate Training of Deep Neural Networks. arXiv:1602.07868.

Figures

Figure 4. There is no significant different between two skull-stripping methods in interhemispheric resting state functional connectivity. (A) RsfMRI connectivity maps of S1BF and S2. RsfMRI connectivity maps generated by correlation analysis of BOLD signals using a seed (4×4 voxels) defined in the ipsilateral and contralateral side. (B) Quantification of the interhemispheric rsfMRI connectivity (n=6, Paired t test, error bars indicate ±SD).