3929

The role of hippocampus in central auditory processing – An optogenetic auditory fMRI study1Laboratory of Biomedical Imaging and Signal Processing, The University of Hong Kong, Hong Kong, China, 2Department of Electrical and Electronic Engineering, The University of Hong Kong, Hong Kong, China, 3Neurology and Neurological Sciences, Stanford University, Stanford, CA, United States

Synopsis

Hearing is not just a sensory process as it plays pivotal roles in enabling communication, and memory and learning functions. This indicates that auditory processing is not confined within the central auditory pathways and likely involves higher-order cognitive structures such as hippocampus, which at present remains poorly understood. Here, we employed combined optogenetic and auditory fMRI in rats to investigate whether auditory processing can be influenced by hippocampal inputs. We reveal the specific role of ventral hippocampus in influencing auditory processing of behaviorally relevant sound across the entire central auditory pathways.

Purpose

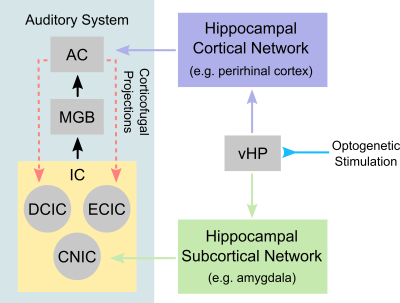

Traditionally, auditory functions are attributed to processes that occur within the central auditory pathways, whereby sound information is routed from the ear to the auditory brainstem, thalamus and cortex. Presently, it is recognized that our ability to hear is no longer just a sensory process because of the pivotal roles it play in enabling communication, and memory and learning functions. This indicates that auditory processing is not confined within the central auditory pathways and likely involves numerous regions in the higher-order cognitive systems, which at present remains poorly understood. One postulated region is the hippocampus, which is widely believed to mediate numerous cognitive functions that are associated with sensory processing1,2. Electrophysiology studies showed that the HP neurons only responded to sounds that were associated with a trained behavioral task3,4. Meanwhile, anatomical tracing studies showed that the hippocampus shared extensive reciprocal projections to both auditory cortex and subcortical regions (i.e., brainstem and thalamus)5,6. However, these studies did not directly probe and reveal the role of hippocampus in central auditory processing. Consequently, whether the hippocampus, a cognitive region, can influence auditory functions remains unresolved. Such investigations would require one to present a behaviorally relevant sound (e.g., sounds used for communication), while simultaneously probe the hippocampus and monitor the whole-brain activity. In this seminal study, we aim to investigate whether auditory processing can be influenced by hippocampal inputs through combined optogenetic and auditory fMRI in rats. We will present rodent vocalizations (i.e., innate communication calls with strong emotional context such as survival and pleasure7) as our behaviorally relevant sound, while initiating hippocampal inputs through optogenetic stimulation of ventral hippocampus (vHP) excitatory neurons – a region postulated to play a role in processing sensory inputs with an emotional context1,2. Additionally, we will contrast our behaviorally relevant sounds by presenting temporally reversed vocalizations (i.e., to remove the context while preserving identical acoustic energy) and broadband noise.Methods

Animal preparation: 3μl AAV5-CaMKIIα::ChR2(H134R)-mCherry was injected at ventral dentate gyrus (vDG) of adult SD rats (200-250g, male). After 4 weeks, an opaque optical fiber cannula (d=450μm) was implanted at the injection site to deliver optogenetic stimulation (Figure 1A). All experiments were performed under 1.0–1.5% isoflurane.Optogenetic and Auditory Stimulation: Auditory stimulus was delivered via a customized tube to the left ear. Three types of sounds were presented (Aversive vocalization and relax vocalization: 22kHz and broadband noise: 1-40kHz, with SPL = 83 dB) in a block design paradigm (Figure 1D). Auditory fMRI trials with and without continuous 5Hz optogenetic stimulation at vHP were interleaved.

fMRI Acquisition and Analysis: fMRI data was acquired at 7T using GE-EPI (FOV=32×32mm2, matrix=64×64, α=56°, TE/TR=20/1000ms, twelve 1mm slices, spaced 0.2mm apart). After preprocessing and averaging, standard GLM analysis was applied to identify significant BOLD responses.

Results

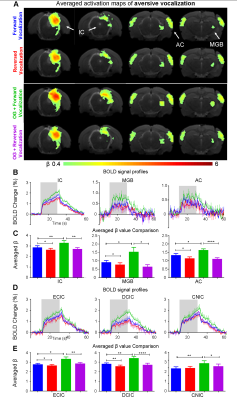

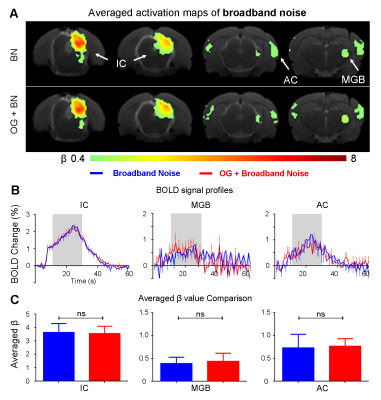

The vHP only modulates behaviorally relevant vocalization soundsWe examined the downstream hippocampal activates initiated in vHP by optogenetic stimulation. Robust positive BOLD responses were found in cortical, hippocampal formation and subcortical regions (Figure 1C). Next, we presented auditory stimulations with and without continuous optogenetic stimulation of vHP. Without optogenetic stimulation, both aversive (Figure 2) and relax (Figure 3) vocalizations, and broadband noise (Figure 4) evoked robust BOLD responses along the central auditory pathways, including the inferior colliculus (IC), medial geniculate body (MGB) and primary auditory cortex (AC)8,9. Moreover, the selectivity for the vocalizations (forward vs temporally reversed) is present, corroborating our previous study8. Interestingly, when optogenetic stimulation was presented, only the responses to both aversive and relax vocalization were enhanced, but not the responses to temporally-reversed vocalization and broadband noise. This demonstrates the specificity of the role of vHP in influencing only behaviorally relevant sounds. Such enhancement was found in IC, MGB and AC. Furthermore, IC subdivision analysis showed that response enhancement to both aversive and relax vocalization in external (ECIC) and dorsal (DCIC) cortices of IC are the most prominent followed by central nucleus of IC (CNIC).

Discussions & Conclusion

This study directly demonstrates for the first time the role of hippocampus in central auditory processing. We showed that hippocampal inputs only specifically influence behaviorally relevant sounds. Responses to both vocalizations were enhanced but such observations were absent when the respective vocalizations were temporally reversed and broadband noise at identical SPL were presented. This indicates that the hippocampus may be involved in discriminating the nature of different auditory stimuli through three possible pathways such as the hippocampal-amygdala-IC/AC10,11; hippocampal-entorhinal-AC and auditory corticofugal projections12,13; and hippocampal-cortical1,4. Evoked BOLD responses to aversive and relax vocalization were similarly enhanced throughout the central auditory pathway (i.e., IC, MGB and AC). The aversive vocalization used in the present study is fear and anxiety related14 while the relax vocalization is related to sexual and social behavior15. The similarity of the response enhancement towards both aversive and relax vocalization sounds suggests that vHP modulation might be independent of the emotional context embedded in the sound. Taken together, our study demonstrates that central auditory processing is not confined within the traditional auditory pathways. Specifically, we present direct experimental evidence that ventral hippocampal inputs do play a substantial role in auditory functions.Acknowledgements

This study is supported in part by Hong Kong Research Grant Council (C7048-16G and HKU17115116 to E.X.W. and HKU17103819 to A.T.L.), Guangdong Key Technologies for Treatment of Brain Disorders (2018B030332001) and Guangdong Key Technologies for Alzheimer’s Disease Diagnosis and Treatment (2018B030336001) to E.X.W.References

1. Fanselow, M. S., & Dong, H. W. (2010). Are the dorsal and ventral hippocampus functionally distinct structures?. Neuron, 65(1), 7-19.

2. Strange, B. A., Witter, M. P., Lein, E. S., & Moser, E. I. (2014). Functional organization of the hippocampal longitudinal axis. Nature Reviews Neuroscience, 15(10), 655-669.

3. Xiao, C., Liu, Y., Xu, J., Gan, X., & Xiao, Z. (2018). Septal and Hippocampal Neurons Contribute to Auditory Relay and Fear Conditioning. Frontiers in Cellular Neuroscience, 12, 102.

4. Rothschild, G., Eban, E., & Frank, L. M. (2017). A cortical–hippocampal–cortical loop of information processing during memory consolidation. Nature neuroscience, 20(2), 251.

5. Buzsáki, G. (1996). The hippocampo-neocortical dialogue. Cerebral cortex, 6(2), 81-92.

6. Van Strien, N. M., Cappaert, N. L. M., & Witter, M. P. (2009). The anatomy of memory: an interactive overview of the parahippocampal–hippocampal network. Nature Reviews Neuroscience, 10(4), 272.

7. Blanchard, R. J., Blanchard, D. C., Agullana, R., & Weiss, S. M. (1991). Twenty-two kHz alarm cries to presentation of a predator, by laboratory rats living in visible burrow systems. Physiology & behavior, 50(5), 967-972.

8. Gao, P. P., Zhang, J. W., Fan, S. J., Sanes, D. H., & Wu, E. X. (2015). Auditory midbrain processing is differentially modulated by auditory and visual cortices: An auditory fMRI study. Neuroimage, 123, 22-32.

9. Zhang, J. W., Lau, C., Cheng, J. S., Xing, K. K., Zhou, I. Y., Cheung, M. M., & Wu, E. X. (2013). Functional magnetic resonance imaging of sound pressure level encoding in the rat central auditory system. Neuroimage, 65, 119-126.

10. Marsh, R. A., Fuzessery, Z. M., Grose, C. D., & Wenstrup, J. J. (2002). Projection to the inferior colliculus from the basal nucleus of the amygdala. Journal of Neuroscience, 22(23), 10449-10460.

11. Janak, P. H., & Tye, K. M. (2015). From circuits to behaviour in the amygdala. Nature, 517(7534), 284-292.

12. Williamson, R. S., & Polley, D. B. (2019). Parallel pathways for sound processing and functional connectivity among layer 5 and 6 auditory corticofugal neurons. ELife, 8, e42974.

13. King, A. J., & Bajo, V. M. (2013). Cortical modulation of auditory processing in the midbrain. Frontiers in neural circuits, 6, 114.

14. Portfors, C. V. (2007). Types and functions of ultrasonic vocalizations in laboratory rats and mice. Journal of the American Association for Laboratory Animal Science, 46(1), 28-34.

15. Bialy, M., Podobinska, M., Barski, J., Bogacki‑Rychlik, W., & Sajdel‑Sulkowska, E. M. (2019). Distinct classes of low frequency ultrasonic vocalizations (lUSVs) in rats during sexual interactions relate to different emotional states. Acta Neurobiol Exp, 79, 1-12.

Figures