3764

Learning how to Clean Fingerprints -- Deep Learning based Separated Artefact Reduction and Regression for MR Fingerprinting1Pattern Recognition Lab, Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen, Germany, 2Magnetic Resonance, Siemens Healthcare, Erlangen, Germany

Synopsis

Various deep learning approaches have been recently introduced to enable a fast MRF reconstruction compared to dictionary matching. Artefacts resulting from the strong undersampling during the acquisition often impair the reconstruction results. In this work, we introduce a deep learning artefact reduction method in order to provide clean fingerprints for the subsequent regression network. Our results achieve a decreased relative error by over 50% using our artefact reduction method compared to previously proposed deep learning regression model without prior artefact reduction.

Introduction

Magnetic Resonance Fingerprinting (MRF) is a quantitative imaging technique, where multiple highly undersampled images are acquired with varying acquisition parameters (e.g. TR or FA), resulting in time series for every image pixel.1 Quantitative maps of multiple parameters e.g. the T1 and T2 relaxation times can be reconstructed using these so-called fingerprints. The conventional dictionary-based method1 uses a simulated set of possible fingerprints and compares every measured time series with them by simple methods, e.g. dot product. This method is highly expensive in terms of calculation time and memory consumption. Furthermore, these approaches are limited to the resolution of the dictionary. Recently, various machine learning methods including deep learning (DL)2 have been introduced to overcome these limitations.3-8 It was shown, that the reconstruction of fully sampled signals can be easily replaced by a simple DL network 3, while undersampling artefact afflicted signals introduces more complexity to the task 4-8. Artefact reduction based on iterative reconstruction prior to DL reconstruction was applied in e.g. 9. In order to provide a fully automated approach, we propose a U-net architecture for artefact reduction providing clean signals for a subsequently applied neural network based-regression and thereby improving map quality.Methods

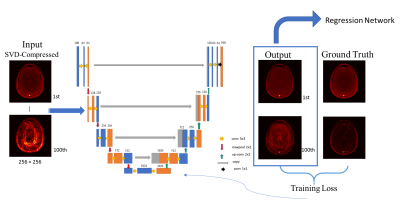

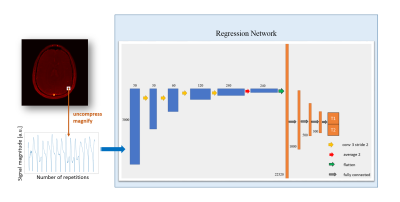

The prototype algorithm consists of two steps. In the first step a multi-channel U-net architecture 10 was used to denoise and dealias the signals (Fig. 1). The second step focused on the estimation of T1 and T2 (regression). We used a convolutional neural network model proposed by Hoppe et al. 3 (Fig. 2).Experiments

Data acquisition: 2-D MRF data was acquired as axial brain slices in 10 volunteers (5 male, 5 female, age: 39.2$$$\pm$$$14.3 years) on two 3T MR scanners (MAGNETOM Prisma, MAGNETOM Skyra Siemens Healthcare, Erlangen, Germany) using a prototype FISP sequence with spiral readouts 11 and a prescan-based $$$B_1^+$$$ correction 12 employing the following sequence parameters: FOV: 300 mm2, spatial resolution: 1.17 x 1.17 x 5.0 mm3 , variable TR (12-15 ms), FA (5-74°), number of repetitions: 3,000, undersampling factor: 48. No repetitions of the same slices were included in the data.Training: 61 slices from 8 volunteers were used as the training dataset, 12 slices from one volunteer were used as validation dataset. The trained models were tested on one volunteer. Datasets were split without overlapping. Quantitative parameter maps of T1 and T2 relaxations and $$$B_1^+$$$ were used as the ground truth in this work. For this, the conventional method with a fine-resolved dictionary was used (Table 1). For training the artefact reduction network, the matched entry from dictionary was considered as ground truth. Both the measured signals and dictionary fingerprints were compressed by SVD to 50 main temporal components. For each component, real and imaginary parts were forwarded as 2 independent channels to the network. Thus, the number of both input channels and output channels of the network was 100. An overview is shown in Fig. 1. The regression network was trained on 23,200 pixels randomly chosen from 61 training scans.

Evaluation: Signals with and without our DL-based denoising were both evaluated with the regression network. For a more straightforward and direct comparison to the existing method 3, each cleaned signal was uncompressed back to 3,000-point time series, and only the magnitude of the complex-valued signal was fed to the model. Results of the two pipelines were compared.

Results

Fig. 3 shows the reconstruction result of the test scan with/without the artefact reduction neural network compared to the ground truth. The average relative errors of T1/T2 relaxation predictions were 0.200$$$\pm$$$0.580/0.580$$$\pm$$$2.683 with the artefact reduction step, and 0.420$$$\pm$$$0.448/1.192$$$\pm$$$2.628 for the non-processed signals.Discussion

The results show that the relative errors of T1/T2 relaxation times were remarkably reduced (by 52% for T1 and 51% for T2, respectively) when predicted from the cleaned signals as compared to non-processed signals. The U-net based model effectively removed comprehensive artefacts. In addition, it learned to handle the variety of signal patterns from different volunteers and scan settings and enabled the further regression network to generalize better on a diverse dataset. The artefact reduction step successfully prevented the issue of quick overfitting and poor test performance, in contrary to train directly on the non-processed signals. In general, T2 is more difficult to predict than T1, which is consistent with previous studies 4,5,8. It should be noticed, that both the uncompressing step on the cleaned signals and the restriction to magnitude data were just employed for the sake of a fair comparison. As studies 13 have shown that preserving both real and imaginary parts of the complex signal enhances the reconstruction performance, we believe that the performance and efficiency can be further improved.Conclusion

In this work we showed that a DL-based artefact reduction step prior to the DL-based regression significantly improves the accuracy of a plain CNN model on MRF signals resulting from highly undersampled acquisitions. The model was evaluated on in-vivo human brain scans of different volunteers and generalized well on unseen test samples. Future work will include training both networks on a larger dataset, applying a different regression network directly on the compressed cleaned data, and train the two-step models in an end-to-end manner.Acknowledgements

No acknowledgement found.References

[1] Ma, Dan, et al. "Magnetic resonance fingerprinting." Nature 495.7440 (2013): 187.

[2] Maier, Andreas, et al. "A gentle introduction to deep learning in medical image processing." Zeitschrift für Medizinische Physik29.2 (2019): 86-101.

[3] Hoppe, Elisabeth, et al. "Deep Learning for Magnetic Resonance Fingerprinting: A New Approach for Predicting Quantitative Parameter Values from Time Series." GMDS. 2017.

[4] Hoppe, Elisabeth, et al. "Deep learning for magnetic resonance fingerprinting: Accelerating the reconstruction of quantitative relaxation maps." Proceedings of the 26th Annual Meeting of ISMRM, Paris, France. 2018.

[5] Hoppe, Elisabeth, et al. "RinQ Fingerprinting: Recurrence-Informed Quantile Networks for Magnetic Resonance Fingerprinting." International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham, 2019.

[6] Cohen, Ouri, et al. “MR fingerprinting deep reconstruction network (DRONE),” Magnetic resonance in medicine, vol. 80, no. 3, pp. 885–894, 2018.

[7] Balsiger, Fabian, et al. "Magnetic resonance fingerprinting reconstruction via spatiotemporal convolutional neural networks." International Workshop on Machine Learning for Medical Image Reconstruction. Springer, Cham, 2018.

[8] Fang, Zhenghan, et al. "Deep Learning for Fast and Spatially-Constrained Tissue Quantification from Highly-Accelerated Data in Magnetic Resonance Fingerprinting." IEEE transactions on medical imaging (2019).

[9] Golbabaee, Mohammad, et al. “Deep MR Fingerprinting with total-variation and low-rank subspace priors”, Abstract 807. Proceedings of the 27th Annual Meeting of ISMRM, Montreal, Canada.

[11] Jiang, Yun, et al. "MR fingerprinting using fast imaging with steady state precession (FISP) with spiral readout." Magnetic resonance in medicine 74.6 (2015): 1621-1631.

[10] Ronneberger, Olaf, et al. “U-net: Convolutional networks for biomedical image segmentation." International Conference on Medical image computing and computer-assisted intervention. 2015

[12] Chung, Sohae, et al. "Rapid B1+ mapping using a preconditioning RF pulse with TurboFLASH readout." Magnetic resonance in medicine 64.2 (2010): 439-446.

[13] Virtue, Patrick, et al. "Better than real: Complex-valued neural nets for MRI fingerprinting." 2017 IEEE International Conference on Image Processing (ICIP). IEEE, 2017.

Figures

Figure 1: U-net architecture for the artefact reduction

As input for our U-net-based artefact reduction we use the main 50 complex-valued components of SVD-compressed fingerprints for each slice, resulting in 100 input channels for real and imaginary parts. The network outputs are the cleaned fingerprints of the same size as the input. For the training, the Mean-Squared-Error of the compressed signals from the dictionary matches and the network outputs is used.

Figure 2: Illustration of regression architecture for T1/T2 relaxations

For the regression, we use fingerprints from every pixel as input. The regression network consists of 4 convolutional and 4 fully connected layers, providing T1/T2 outputs. We use two kinds of fingerprints: 1) Non-processed fingerprints and 2) cleaned fingerprints from U-net (Fig. 1), which are uncompressed and magnified.

Table 1: Parameters of the dictionary used for ground truth

To create ground truth data for our trainings, we used a fine resolved dictionary with shown ranges and steps for T1, T2 and B1+, resulting in overall 691,497 parameter combinations.

Figure 3: Quantitative maps from test scan

Row 1: Ground truth maps Row 2: Reconstructed maps without prior artefact reduction and relative error maps Row 3: Reconstructed maps from cleaned fingerprints and relative error maps A clear improvement in the reconstruction quality can be observed using a prior DL-based artefact reduction approach.