3619

Deep Simultaneous Optimization of Sampling and Reconstruction for Multi-contrast MRI1School of Information Technology and Electrical Engineering, the University of Queensland, Brisbane, Australia, 2School of Information and Communication Technology, Griffith University, Brisbane, Australia

Synopsis

MRI images of the same subject in different contrasts contain shared information, such as the anatomical structure. Utilizing the redundant information amongst the contrasts to sub-sample and faithfully reconstruct multi-contrast images could greatly accelerate the imaging speed, improve image quality and shorten scanning protocols. We propose an algorithm that generates the optimized sampling pattern and reconstruction scheme of one contrast (e.g. T2-weighted image) when images with different contrast (e.g. T1-weighted image) have been acquired. The proposed algorithm achieves increased PSNR and SSIM with the resulting optimal sampling pattern compared to other acquisition patterns and single contrast methods.

Introduction

For diagnosis purposes, MRI protocols routinely involve acquiring different contrast images, such as T1-weighted images (T1WI) and T2-weighted images (T2WI). The prolonged sequential acquisition of multiple contrast images can lead to motion artifacts and discomfort for patients being scanned. Since different contrast images of the same subject contain similar anatomical structure, the complimentary imaging information from one contrast (e.g. T1WI) could be used as a reference to sub-sample and reconstruct images with the other contrast (e.g. T2WI), thereby accelerating the overall process. Previous studies[1] have shown the benefits of incorporating contrast information in sub-sampled reconstructions. However, most of these studies focus only on the reconstruction process, and limited investigations have been undertaken on how the complementary information of the other contrasts could be best used in the acquisition process. It is also seen from the other studies[2-3] that optimization of the sampling pattern leads to superior image quality. In this work, we take the reconstruction of sub-sampled T2WI to show that the acquisition pattern could be optimized with information provided by T1WI in order to achieve better reconstruction results.Methods

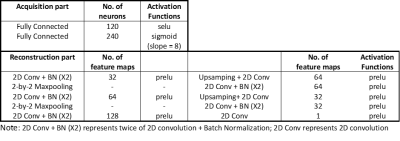

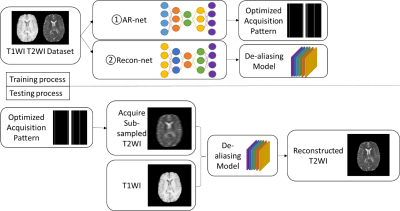

To learn the optimized sub-sampling pattern and reconstruction for multi-contrast images, we propose an integrated acquisition and reconstruction network, namely AR-net. The AR-net architecture contains two parts, the acquisition part, which is used to optimize the sampling pattern, and the reconstruction part, which is based on U-net[4] to reconstruct the fully-sampled images from the aliased images. The detailed configuration of the network is listed in Table 1.The overall pipeline of the training and testing process is shown in Figure 1. In the training process, pairs of T1WI and T2WI are firstly fed into the AR-net to train for the optimized sampling pattern. The objective is to select the most important sampling locations in k-space that lead to the minimal mean-absolute-error (MAE) between the reconstructed images and the ground truth images during the learning process. We can obtain the probability of k-space locations from the acquisition part, where higher probability represents the points to be sampled. Inspired by[2-3] we use the sigmoid with large slope as the activation function and l1-norm sparsity constraint to approximate the discrete sampling process. The slope and the coefficient of l1-norm in the loss function are used to tune different sampling rates. The optimized mask only contains ones for sampled locations and zeros otherwise after threshold approximation. We show experiments with the 1D sub-sampling pattern optimization and it is straightforward to extend to higher dimensional patterns.

The second step in the training process is to generate the de-aliasing model that maps the zero-padded sub-sampled images to the reconstructed images using a multi-contrast reconstruction network, namely Recon-net. For simplicity, the configuration of the Recon-net is the same as the reconstruction part of AR-net in Table 1. We simulate the sub-sampled T2WI by applying the mask generated from AR-net to the ground truth images. To further use the complementary information from the other contrast in the second step, T1WI is concatenated to the aliased T2WI in the input layer of the Recon-net. We use MAE as the loss function and Adam optimizer with learning rate 0.0005 to train the models. In the testing process, we reconstruct the T2WI which are sub-sampled by the learned optimized masks on the test dataset using the trained models.

Results and Discussion

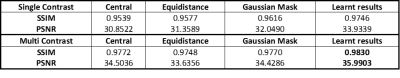

We used the brain tumor segmentation challenge 2019 (BraTS2019) dataset[5-8] to train and test the proposed algorithm. The data had been pre-processed by the organizer, and we further normalized the intensity to [0~1]. We extracted 2D slices of size 240×240 from 208 subjects, where 2,287 slices were randomly selected as training dataset, 1,320 slices as validation dataset and 2,165 slices as testing dataset. The training and validation dataset are used in the training process, and the testing dataset is used only in the testing process to report the average results.To demonstrate the superiority of the learnt sampling pattern over conventional sampling patterns, we trained de-aliasing models for different sampling patterns, including low-resolution mask (centered sampling pattern), equal distance mask with 2/3 in the center and Gaussian mask with the same sub-sampling rate and same hyper-parameter settings. We sampled 22 over 240 lines, which is up to the sub-sampling rates of 10. The illustration of the masks and corresponding zero-padded images are shown in Figure 2. To show the effectiveness of the T1WI, we also trained single-contrast de-aliasing models for T2WI and compared the results with the multi-contrast reconstruction models for different sampling patterns.

Peak signal-to-noise ratio (PSNR) and structure similarity index (SSIM) are used to evaluate the reconstruction results in the testing process. Figure 2 visualizes the reconstruction results for different sampling patterns and Table 2 shows the quantitative comparison. We can observe that the proposed method yields a reduced error map with increased SSIM and PSNR despite large reduction factors.

Conclusion

We proposed a deep learning-based AR-net that generates the optimal sampling pattern for the contrast-of-interest images and the de-aliasing model. The proposed method achieves average PSNR over 35dB and SSIM over 0.98 with acceleration rates up to 10.Acknowledgements

No acknowledgement found.References

[1] L. Xiang et al., "Deep Leaning Based Multi-Modal Fusion for Fast MR Reconstruction," IEEE Transactions on Biomedical Engineering, 2018.

[2] C. D. Bahadir, A. V. Dalca, and M. R. Sabuncu, "Adaptive Compressed Sensing MRI with Unsupervised Learning," arXiv preprint arXiv:1907.11374, 2019.

[3] C. D. Bahadir, A. V. Dalca, and M. R. Sabuncu, "Learning-based Optimization of the Under-sampling Pattern in MRI," in International Conference on Information Processing in Medical Imaging, 2019: Springer, pp. 780-792.

[4] O. Ronneberger, P. Fischer, and T. Brox, "U-net: Convolutional networks for biomedical image segmentation," in International Conference on Medical image computing and computer-assisted intervention, 2015: Springer, pp. 234-241.

[5] B. H. Menze et al., "The multimodal brain tumor image segmentation benchmark (BRATS)," IEEE Transactions on Medical Imaging, vol. 34, no. 10, pp. 1993-2024, 2014.

[6] S. Bakas et al., "Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features," Nature Scientific Data, vol. 4, p. 170117, 2017.

[7] S. Bakas et al., "Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the BRATS challenge," arXiv preprint arXiv:1811.02629, 2018.

[8] S. Bakas et al., "Segmentation labels and radiomic features for the pre-operative scans of the TCGA-GBM collection. ," The Cancer Imaging Archive, 2017, doi: 10.7937/K9/TCIA.2017.GJQ7R0EF.

[9] S. Bakas et al., "Segmentation labels and radiomic features for the pre-operative scans of the TCGA-LGG collection," The Cancer Imaging Archive, 2017, doi: 10.7937/K9/TCIA.2017.KLXWJJ1Q.

Figures