3611

Parallel Imaging with a Combination of SENSE and Generative Adversarial Networks (GAN)1Yantai University, Yantai, China, 2Fudan University, Shanghai, China

Synopsis

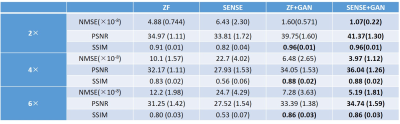

This study aims to use GAN architecture to remove the g-factor artifacts in SENSE reconstruction. The proposed method outperforms SENSE and ZF+GAN in terms of the measured quality metrics (decreases of NMSE and increases of PSNR and SSIM). Besides, our method performs well in preserving images details with under-sampling factor of up to 6-fold, which is promising to be applied in clinical applications.

Background

MRI is an advanced imaging method, however, one of its major limitations is its low imaging speed. To accelerate MR image acquisition and ensure high image quality, methods based on under-sampled k-space reconstruction have been exploited. For multi-channel coils and parallel imaging, the most commonly used reconstruction method is SENSE1. However, SENSE will introduce g-factor artifacts at high under-sampled factors. Recently, many studies2-4 have utilized CNN in image reconstructions. The purpose of this work is to use GAN architecture to remove the g-factor artifacts from the SENSE reconstruction and thus obtain the final artifact-free images.Materials and Methods

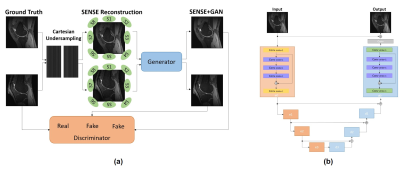

Here we name our method as “SENSE+GAN”. The architecture of the proposed SENSE-GAN reconstruction method is shown in Figure 1(a). The multi-coil k-space data were reconstructed using SENSE before feeding into GAN. The role of the generator is to remove the g-factor artifacts. The training process can benefit more from the SENSE reconstruction compared to that from zero-filling (ZF) reconstruction. We adopted a residual U-Net architecture (shown in Figure 1(b)) as the generator, which consisted of an encoder, decoder and symmetric skip connections between encoder and decoder blocks. We used a public knee database5 containing 20 subjects to evaluate our method. The images were obtained from a GE 3.0T scanner (GE Healthcare, Milwaukee, WI, USA) using a 3D FSE CUBE sequence with proton density (PD) weighting (TE=25ms, TR=1550ms, FOV=160mm, matrix size = 320×320×256). All data were acquired with 8-channel knee coils. For each subject, 100 central slices were used for training and testing. The data were retrospectively under-sampled in the k-space using Cartesian masks with 2×, 4× and 6× accelerations. A total of 40 k-space center lines were sampled to estimate the sensitivity maps. The other part of k-space was uniformly sampled with different acceleration rates. To avoid overfitting, the network was trained with standard augmentations, including random rotation, shearing and flipping. All the data were randomly split into two groups, i.e., 1600 images for training and 400 images for validation. We compared the performance of SENSE+GAN to ZF+GAN with different acceleration factors (2×, 4×, 6×). The ground truth images were calculated by performing square root of the sum-of-squares (SSOS) of the multi-coil full-sampled images. To assess the quality of the reconstructed images, we applied three quality metrics to all the images, i.e., NRMSE, PSNR and SSIM.Results

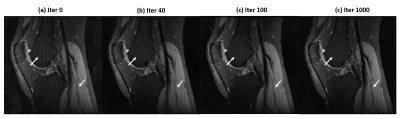

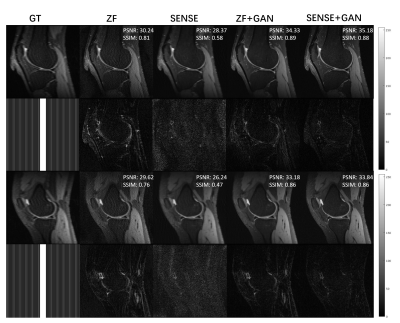

Figure 2 shows the intermediate images during the training iterations. The remaining g-factor artifacts in the SENSE reconstructed image were removed gradually during iterations. Figure 3 shows a representative example of SENSE+GAN reconstruction. The zoomed-in images and the corresponding error maps show the advantages of the proposed method. The SENSE+GAN performs especially well for the preservation of image details compared to ZF+GAN. Two representative reconstruction examples with acceleration factor of 6 are shown in Figure 4. It can be seen that the SENSE reconstruction is quite noisy at high acceleration rate. But with the application of GAN, the noise level is largely reduced and the image quality is obviously improved. The ZF images are so blurring that even after applying GAN, some fine details in the images were lost, which is not acceptable for clinical applications. As can be seen in Figure 4, the proposed SENSE+GAN method produces more faithful reconstruction compared to ZF+GAN. Similarly, we can see that SENSE+GAN performs consistently better than the other methods in terms of the overall pixel-wise errors and the preservations of fine details. It is observed that the proposed SENSE+GAN method performs the best among all the methods according to all measured quality metrics (highlighted with bold numbers in Table 1).Discussion and Conclusion

In conclusion, we have presented a novel framework for accelerated MRI reconstruction by combining SENSE reconstruction with GAN. Results show that the proposed method is capable of producing faithful image reconstructions from highly under-sampled k-space data. The SENSE+GAN method consistently outperforms SENSE and ZF+GAN approaches in terms of all measured quality metrics. The improvement of reconstruction is more obvious for higher under-sampling rates, which is promising for many clinical applications.Acknowledgements

This work is supported by National Natural Science Foundation of China (No.61902338).References

1. Pruessmann KP, Weiger M, Scheidegger MB, Boesiger P. SENSE: sensitivity encoding for fast MRI. Magnetic resonance in medicine 1999;42(5):952-962.

2. Schlemper J , Caballero J , Hajnal J V , et al. A Deep Cascade of Convolutional Neural Networks for Dynamic MR Image Reconstruction. IEEE Transactions on Medical Imaging 2017;37(2):491-503.

3. Wang S, Su Z, Ying L, et al. Accelerating magnetic resonance imaging via deep learning. IEEE International Symposium on Biomedical Imaging; 2016. p. 514-517.

4. Hammernik K, Klatzer T, Kobler E, et al. Learning a variational network for reconstruction of accelerated MRI data. Magnetic Resonance in Medicine 2018;79(6):3055-3071.

5. http://mridata.org/fullysampled/knees.

Figures