3588

Real-Time Cardiac Cine MRI with Residual Convolutional Recurrent Neural Network1United Imaging Intelligence, Cambridge, MA, United States, 2UIH America, Inc., Houston, TX, United States

Synopsis

Real-time cardiac cine MRI does not require ECG gating in the data acquisition and is more useful for patients who can not hold their breaths or have abnormal heart rhythms. However, to achieve fast image acquisition, real-time cine commonly acquires highly undersampled data, which imposes a significant challenge for MRI image reconstruction. We propose a residual convolutional RNN for real-time cardiac cine reconstruction. To the best of our knowledge, this is the first work applying deep learning approach to Cartesian real-time cardiac cine reconstruction. Based on the evaluation from radiologists, our deep learning model shows superior performance than compressed sensing.

Introduction

Real-time cardiac cine MRI (RT-cine), compared to retro-cine, requires neither ECG gating nor breath-holding, which can be applied to a more general patient cohort and simplify the scanning process 1. To achieve a high temporal resolution (<50ms) and an acceptable spatial resolution (<2mm), highly undersampled data (>10x acceleration) needs to be collected for RT-cine, which imposes a significant challenge for image reconstruction. Compressed sensing (CS) based approaches have been proposed for dynamic cardiac MR (CMR) 2-7 and have shown good reconstruction quality under high acceleration. The application of CS to RT-cine, however, is limited by the slow reconstruction speed due to iterative algorithms and tricky hyperparameter tuning. Deep learning based methods, on the other hand, can potentially achieve much faster reconstruction speed and thus have drawn many attentions in recent years 8-12. Qin et al. 13 developed a convolutional recurrent neural network for dynamic CMR. While these studies show promising results, there are several limitations: First, simulated undersampled data from retro-cine rather than real RT-cine data are used for evaluation. Second, the acceleration rates are usually lower than 10x. Third, algorithms are tested on synthesized single-coil data rather than multi-coil data. Fourth, the reconstruction quality evaluations lack clinical assessment.In this study, we propose a residual convolutional recurrent neural network (Res-CRNN) for RT-cine MRI. Our deep learning model is evaluated on highly accelerated (12x) multi-coil RT-cine data and compared to CS. To the best of our knowledge, this is the first work applying the deep learning approach to real Cartesian RT-cine MRI and it is evaluated by radiologists.

Methods

Since it is almost impossible to obtain ground truth (i.e., fully sampled data) for RT-cine, we adopt the strategy of training the deep learning model on retro-cine data. Then the trained model is applied to the acquired undersampled multi-coil RT-cine data directly from scanners for image reconstruction.Data collection: We collected retro-cine data of 51 patients (343 slices) with a bSSFP sequence on a clinical 3T scanner (uMR 790 United Imaging Healthcare, Shanghai, China) with the approval of local IRB. We also collected RT-cine data of 27 slices from two patients, which were acquired using a bSSFP sequence using variable Latin Hypercube undersampling 14. Imaging parameters include: imaging matrix: 192x180, TR/TE = 2.8/1.3 ms, spatial resolution = 1.82x1.82 mm2, and temporal resolution = 34 ms and 42 ms for retro-cine and RT-cine, respectively. Both retro- and RT-cine data were acquired using phased-arrayed coils.

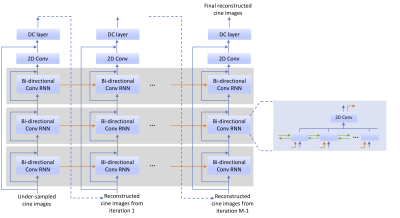

Res-CRNN: Figure 1 depicts the proposed Res-CRNN. The network includes: three bi-directional convolutional RNN layers to model dynamic information 13, the data consistency layer 8, as well as two levels of residual connections, which promote the network to learn high-frequency details. To reduce the GPU memory consumption and speed up reconstruction, one extra 2DConv layer is added within each bi-directional ConvRNN layer to reduce the number of feature maps in hidden states. All Conv kernels are 3x3 and the filter numbers are k=48 for bi-directional ConvRNN layers and k=2 for other 2DConv layers.

Model training: For model training, the retro-cine data were retrospectively undersampled using the same 12x acceleration sampling mask as in RT-cine and then fed into the neural network as input. MSE and SSIM 15 were used as the training loss. Images from each coil were reconstructed independently and then combined using the root sum of square method.

Evaluation: For comparison, the same RT-cine data was reconstructed by CS using BART 16. Temporal total variation and spatial wavelets were adopted and ADMM was used for optimization with maximum iterations of 100. CS hyperparameters were heuristically optimized on one representative data. Coil sensitivity maps were calculated using ESPIRiT 17 from temporally averaged data. Res-CRNN and CS were evaluated on a workstation (Intel Xeon E5-2630 CPU, 256G memory and Nvidia Tesla V100 GPU)

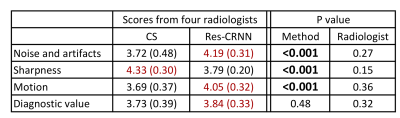

The reconstruction results were independently evaluated by four experienced radiologists based on four categories (Figure 3), where a score in the 1-5 scale (1 as unacceptable and 5 as perfect) was assigned. The pair of reconstruction videos from CS and Res-CRNN were presented side-by-side in a randomized and blind fashion to radiologists. Statistical significance was calculated by repeated measures ANOVA.

Results

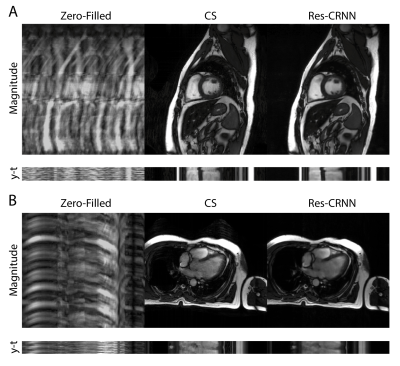

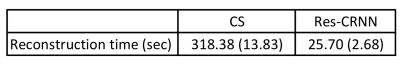

Figure 2 shows examples of RT-cine reconstruction results. Res-CRNN achieves less noise and aliasing artifacts than CS. Figure 3 shows the evaluation from four radiologists. Res-CRNN reconstruction receives significantly better scores for noise and artifacts as well as motion, but lower scores for sharpness than CS (all P<0.001). There is no significant difference in diagnostic value between CS and Res-CRNN (P=0.48). No significant difference among the four radiologists was observed (all P>0.1). Reconstruction speed of Res-CRNN is more than ten times faster than CS (318.3s vs. 25.7s on average).Discussion and Conclusion

Unlike other studies using synthetic data from retro-cine 8,13,18 or processed coil-combined data from RT-cine 11, we proposed a deep learning model, Res-CRNN, for real multi-coil RT-cine MRI reconstruction. The deep learning method presents superior image quality over CS and is favored by the radiologists in noise/artifacts reduction and motion depiction. Moreover, the reconstruction speed of the deep learning method is significantly faster than CS, making it suitable for daily clinical usage.Acknowledgements

No acknowledgement found.References

1. Qing Yuan, Leon Axel, Eduardo H Hernandez, Lawrence Dougherty, James J Pilla, Craig H Scott, Victor A Ferrari, and Aaron S Blom. Cardiac-respiratory gating method for magnetic resonance imaging of the heart. Magnetic Resonance in Medicine, 43(2):314–318, 2000.

2. Chen Chen, Yeqing Li, Leon Axel, and Junzhou Huang. Real time dynamic mri with dynamic total variation. International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 138–145. Springer, 2014.

3. Tomoyuki Kido, Teruhito Kido, Masashi Nakamura, Kouki Watanabe, Michaela Schmidt, Christoph Forman, and Teruhito Mochizuki. Compressed sensing real-time cine cardiovascular magnetic resonance: accurate assessment of left ventricular function in a single-breath-hold. Journal of Cardiovascular Magnetic Resonance, 18(1):50, 2016.

4. Michael S Hansen, Thomas S Sørensen, Andrew E Arai, and Peter Kell-man. Retrospective reconstruction of high temporal resolution cine images from real-time mri using iterative motion correction. Magnetic Resonance in Medicine, 68(3):741–750, 2012.

5. Peter Kellman, Christophe Chefd’hotel, Christine H Lorenz, Christine Mancini, Andrew E Arai, and Elliot R McVeigh. High spatial and temporal resolution cardiac cine mri from retrospective reconstruction of data acquired in real time using motion correction and resorting. Magnetic Resonance in Medicine, 62(6):1557–1564, 2009.

6. Hong Jung, Kyunghyun Sung, Krishna S Nayak, Eung Yeop Kim, and Jong Chul Ye. k-t focuss: a general compressed sensing framework for high resolution dynamic mri. Magnetic Resonance in Medicine, 61(1):103–116,2009.

7. Urs Gamper, Peter Boesiger, and Sebastian Kozerke. Compressed sensing in dynamic mri. Magnetic Resonance in Medicine, 59(2):365–373,2008.

8. Jo Schlemper, Jose Caballero, Joseph V Hajnal, Anthony N Price, and Daniel Rueckert. A deep cascade of convolutional neural networks for dynamic mri mage reconstruction. IEEE Transactions on Medical Imaging, 37(2):491–503,2017.

9. Kerstin Hammernik, Teresa Klatzer, Erich Kobler, Michael P Recht, Daniel K Sodickson, Thomas Pock, and Florian Knoll. Learning a variational network for reconstruction of accelerated mri data. Magnetic Resonance in Medicine,79(6):3055–3071, 2018.

10. Yoseob Han, Leonard Sunwoo, and Jong Chul Ye. k-space deep learning for accelerated mri. IEEE Transactions on Medical Imaging, 2019.

11. Andreas Hauptmann, Simon Arridge, Felix Lucka, Vivek Muthurangu, and Jennifer A Steeden. Real-time cardiovascular mr with spatio-temporal artifact suppression using deep learning–proof of concept in congenital heart disease. Magnetic Resonance in Medicine, 81(2):1143–1156, 2019

12. Kyong Hwan Jin, Harshit Gupta, Jerome Yerly, Matthias Stuber, and Michael Unser. Time-dependent deep image prior for dynamic mri. arXiv:1910.01684, 2019.

13. Chen Qin, Jo Schlemper, Jose Caballero, Anthony N Price, Joseph V Hajnal,and Daniel Rueckert. Convolutional recurrent neural networks for dynamic mri mage reconstruction.IEEE Transactions on Medical Imaging, 38(1):280–290,2018.

14. Jingyuan Lyu, Yu Ding, Jiali Zhong, Zhongqi Zhang, Lele Zhao, Jian Xu,Qi Liu, Ruchen Peng, and Weiguo Zhang. Toward single breath-hold whole-heart coverage compressed sensing mri using variable spatial-temporal latin hypercube and echo-sharing (valas). ISMRM, 2019.

15. Hang Zhao, Orazio Gallo, Iuri Frosio, and Jan Kautz. Loss functions for neural networks for image processing. arXiv:1511.08861, 2015.

16. Jonathan I Tamir, Frank Ong, Joseph Y Cheng, Martin Uecker, and Michael Lustig. Generalized magnetic resonance image reconstruction using the berkeley advanced reconstruction toolbox.

17. Martin Uecker, Peng Lai, Mark J Murphy, Patrick Virtue, Michael Elad,John M Pauly, Shreyas S Vasanawala, and Michael Lustig. Espirit—an eigen-value approach to autocalibrating parallel mri: where sense meets grappa. Magnetic Resonance in Medicine, 71(3):990–1001, 2014.

18. Christopher Sandino, Peng Lai, Shreyas Vasanawala, and Joseph Cheng. Dl-espirit: Improving robustness to sense model errors in deep learning-based reconstruction. ISMRM, 2019.

Figures