3573

Dictionary-based convolutional neural network (CNN) for MR Fingerprinting with highly undersampled data

Yong Chen1,2, Zhenghan Fang3, and Weili Lin1,2

1Radiology, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States, 2Biomedical Research Imaging Center, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States, 3Biomedical Engineering, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

1Radiology, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States, 2Biomedical Research Imaging Center, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States, 3Biomedical Engineering, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

Synopsis

In this study, we proposed a framework to generate simulated training dataset to train a convolutional neural network, which can be applied to highly undersampled MR Fingerprinting images to extract quantitative tissue properties. This eliminates the necessity to acquire training dataset from multiple subjects and has the potential to enable wide applications of deep learning techniques in quantitative imaging using MR Fingerprinting.

Introduction

MR Fingerprinting (MRF) is an imaging framework for efficient and simultaneous quantification of multiple tissue parameters (1). Recent studies have shown that the integration of deep learning in MRF can help extract more useful features in the acquired MRF data to improve tissue characterization and reduce scan time (2, 3). For example, Cohen et al. have developed a 4-layer neural network to extract tissue properties (2). The network was trained on MRF dictionary entries and can be applied to fully-sampled MRF dataset. Fang et al. proposed a deep learning model that can be applied to highly-undersampled MRF acquisitions, which is compatible with most existing MRF methods (3). However, this network was trained on MRF datasets acquired from multiple subjects, which takes extra time and does not take advantage of the existing MRF dictionary. In this study, we aimed to develop a new deep learning method that 1) is trained with simulated dataset generated from the MRF dictionary, and 2) can be directly applied to highly undersampled MRF images.Methods

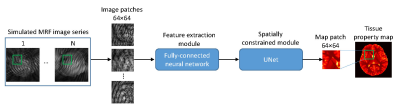

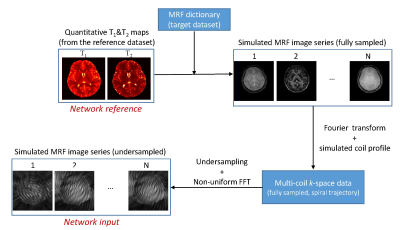

The highly undersampled MRF dataset, named the target dataset, was acquired using a FISP-based MRF sequence with a total of 2300 time frames (4). Only one spiral arm was acquired for each MRF image so it is highly aliased. Similar to the original MRF method, a MRF dictionary containing all possible signal evolutions was generated using Bloch equation simulations (1). Template matching was then applied to extract the ground-truth tissue maps using all the time frames acquried. In this study, we aimed to leverage deep learning techniques to accelerate the MRF acquisition by using only the first 25% time frames for tissue characterization.The same CNN structure as developed in (3) was used in this study. The network contains two modules, the feature extraction module and the spatially constrained module (Fig. 1). Image patches with a size of 64x64 were used during the training process. Prior studies have shown that training in this manner could utilize the information from neighboring pixels to improve tissue characterization (3). To generate training dataset containing such neighboring information, quantitative T1 and T2 maps obtained in another study (named the reference dataset) was used (5). The dataset includes ~60 pairs of axial T1 and T2 maps acquired from five normal subjects using MRF. The differences in MRF methods used to acquire the reference and target datasets are summarized in Fig. 2. It is worth noting that only the quantitative maps from the reference dataset were utilized and any other dataset containing co-registered T1 and T2 maps can be applied for this purpose. The workflow to generate simulated training dataset based on the MRF dictionary is illustrated in Fig. 3. In brief, each pair of T1 and T2 maps was combined with the MRF dictionary for the target dataset to generate a series of fully-sampled MRF images (~570 images). Based on simulated coil maps, multi-coil fully-sampled k-space data using spiral readout was computed. The same spiral undersampling pattern applied in MRF acquisitions was applied to generate the simulated undersampled MRF image series. Repeating the process for all pairs of T1 and T2 maps in the reference dataset created a set of training data, including both the network input and reference maps (Fig. 3). The trained network was then applied to the highly-undersampled MRF images in the target dataset to extract T1 and T2 values and the results were compared to the ground-truth maps obtained using all 2300 time points.

As a comparison, a 4-layer fully-connected network trained with MRF dictionary entries was also implemented in the study (2). The quantitative maps were extracted using the first 570 time points in the target dataset.

Results

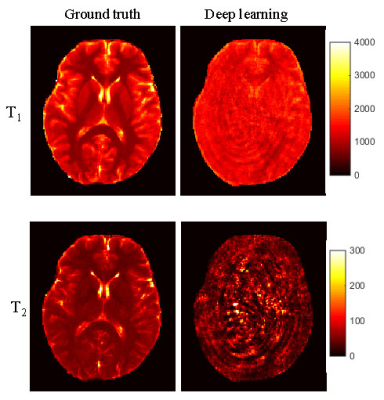

Fig. 4 shows the results obtained using the fully-connected network trained directly with the MRF dictionary entries. Significant aliasing artifacts were observed in both T1 and T2 maps, which suggests that this method is not applicable for undersampled MRF images. Fig. 5 shows the results obtained using the network trained with simulated dataset. Significant improvement in map quality was noted as compared to results shown in Fig. 4. Compared to the results obtained with CNN trained with acquired data, slightly higher NRMSE values were observed, which needs to be further improved in the future.Discussion and Conclusion

Prior studies have demonstrated that deep learning methods trained with acquired dataset can be used to replace template matching in MRF to extract quantitative tissue properties and achieve similar performance with less number of time points (3). However, a drawback of this approach is that even with a small change in either the MRF acquisition parameters or geometry settings, a new training dataset needs to be acquired from many subjects, which is time-consuming and inefficient to evaluate the technique for various applications. In this study, a framework to generate simulated training dataset based on the MRF dictionary was proposed. The dictionary is calculated based on Bloch equation simulations and can be easily regenerated based on changes in the MRF design. This will allow wide applications of deep learning in MRF. Future works will focus on further improvement of map quality obtained using the proposed method.Acknowledgements

No acknowledgement found.References

1. Ma D, et al. Nature, 2013; 187–192.

2. Cohen O, et al. MRM, 2018; 885-894.

3. Fang Z, et al. IEEE TMI, 2019; 2364-2374.

4. Jiang Y, et al. MRM, 2015; 1621-1631.

5. Fang Z, et al. ISMRM, 2019; p1109.

Figures

Figure 1.

Network structure consisting of a feature extraction module and a spatially

constrained module. Image patches with a size of

64×64

was used to train the network.

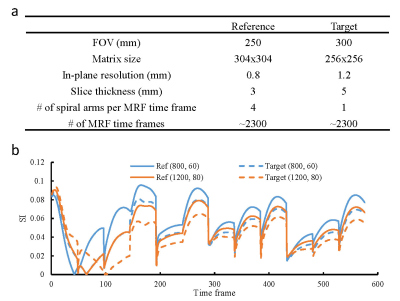

Figure 2.

Comparison of the MRF methods used to acquire the reference

and target datasets for this study. (a) Comparison of key acquisition

parameters in MRF. (b) Comparison of MRF signal evolutions in the dictionaries

associated with the two datasets. Representative curves for two tissues with

different T1

and T2

values are presented. These results demonstrate that substantial differences

exist between the two MRF acquisitions.

Figure 3.

Workflow to generate the training dataset based on MRF dictionary.

Figure 4.

Representative T1

and T2

maps obtained using the fully-connected CNN trained directly with the MRF

dictionary entries. The results were obtained using the first 570 time points

as the input data. Significant aliasing artifacts were noticed when it was

applied to highly undersampled MRF images.

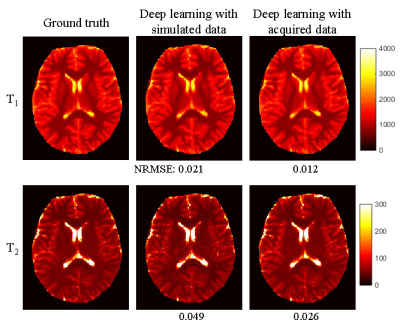

Figure 5.

Representative T1

and T2

maps obtained using the network trained with simulated dataset. The results

obtained using the network trained with acquired data are also plotted for

comparison. Both results were obtained using ~570 time points. Normalized

root-mean-square-error was calculated as compared to the ground truth results

obtained using all the 2300 time points.