3567

Oriented Object Detection Convolutional Neural Network for Automated Prescription of Oblique MRI Acquisitions1Radiology and Biomedical Imaging, University of California San Francisco, San Francisco, CA, United States

Synopsis

High quality scan prescription that optimally covers the area of interest with scan planes aligned to relevant anatomical structures is crucial for error-free radiologic interpretation. The goal of this project was to develop a machine learning pipeline for oblique scan prescription that could be trained on localizer images and metadata from previously acquired MR exams. To achieve that, we have developed a novel multislice rotational region-based convolutional neural network (MS-R2CNN) architecture and evaluated it on dataset of knee MRI exams.

Introduction

High quality scan prescription that optimally covers the area of interest with scan planes aligned to relevant anatomical structures is crucial for error-free radiologic interpretation. Consistency of prescription is especially important for evaluation of disease progression in serial imaging studies. Manual prescription quality varies significantly depending on the operator's skill and training.Previously, automated scan planning has been developed for applications, such as MR spectroscopic imaging1-3 and knee MRI4. These techniques relied on computationally intensive iterative optimization and atlas registration algorithms, making it difficult to incorporate into clinical protocols. Recently, a machine learning approach has been proposed for automated slice planning in the brain, based on locations of several anatomical landmarks5. It required manual pixel level annotation of a large number of localizer images and was limited to brain anatomy.

Object detection convolutional neural network (CNN) architectures, such as Faster-RCNN6, have been widely used for automated bounding box placement in natural images. Specialized architectures have been developed for oriented object detection in applications such as text detection and satellite image analysis7-9. Application of these techniques in MR image analysis has been limited, since these architectures expect 2D images as input.

The goal of this project was to develop a machine learning pipeline for oblique scan prescription that could be trained on localizer images and metadata from previously acquired MR exams of any anatomical region without the need for pixel-level annotation or manual feature engineering. To achieve that, we have developed a novel multislice, rotational, region-based convolutional neural network (MS-R2CNN) architecture and evaluated it on dataset of knee MRI exams.

Methods

For this project we have used a dataset of 1133 knee MRI exams of patients with and without osteoarthritis, after anterior cruciate ligament (ACL) injury and follow‐up post‐ACL reconstruction collected from two previous studies conducted on 3T scanners (GE Healthcare, Waukesha, WI). The dataset was shuffled and split into training and validation sets with a ratio of 70:30%. Random horizontal flipping was implemented for data augmentation.Geometric parameters (center coordinates, field of view, and three tilt angles) were extracted from the headers of the DICOM files of 3D fast spin‐echo (FSE) CUBE acquisitions. The localizer images, immediately preceding the CUBE acquisition were sorted by orientation and, along with the extracted prescription parameters, stored in TFRecord files.

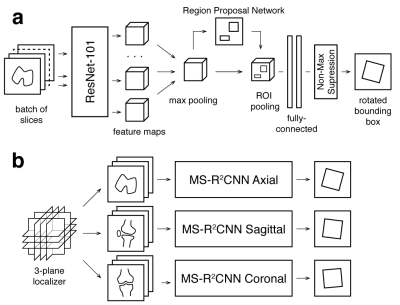

MS-R2CNN architecture (Fig. 1) was based on R2CNN oriented object detection architecture7, as implemented by Xue Yang, et al.10 The network was implemented in Python with the TensorFlow framework. Compared to R2CNN, which performed oriented detection on single images, MS-R2CNN accepted stacks of localizer slices of one of three orientations as input.

Since relevant image features could be found in any of the slices, the ten central slices of each stack were combined into a batch (10x256x256x3) and passed through a ResNet feature extractor, pre-trained on ImageNet dataset, to generate a batch of feature maps (10x16x16x1024). These feature maps were combined using a 1D max-pooling operation, producing a single set of feature maps (16x16x1024) with features from all slices of the stack. These feature maps served as an input to the subsequent layers of the R2CNN network. The output of the network was a set of geometric parameters of an inclined box.

The R2CNN network layers were initialized from a model, pre-trained on DOTA satellite imaging dataset11. Three models were trained using a Titan Xp GPU (NVidia, Santa Clara, CA) on axial, sagittal, and coronal localizer slices and the corresponding inclined boxes from the 3D FSE CUBE acquisitions until the models overfit.

For validation, metrics, such as intersection over union (IOU), difference in center position, box size and tilt angle between the detection results and ground truth, were quantified on the entire validation dataset.

Results

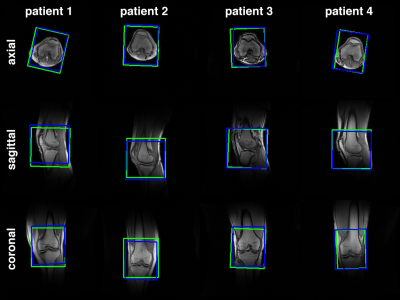

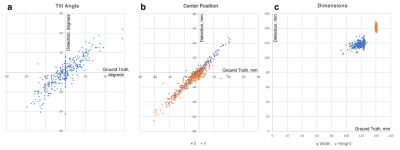

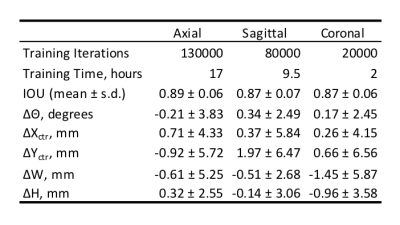

Table 1 shows the number of iterations and training time for axial, sagittal and coronal models. Axial model trained longer before overfitting due to higher variability of rotation angles in the training dataset. Inference time was 0.12 s for each stack of slices.Mean and standard deviations for IOU, difference in rotation angles, center positions, and FOV sizes between generated and ground truth boxes are also shown in Table 1. Figure 2 shows examples of generated and ground truth boxes overlaid on the localizer images. Figure 3 shows plots of generated rotation angles, center coordinates and box sizes vs. the ground truth values.

Discussion

Our results showed that MS-R2CNN oriented object detection convolutional neural network achieved high accuracy in replicating prescriptions of a skilled operator.Compared to the previous approaches, our network required very short computation time and no additional images to generate a high-quality prescription. Our technique did not require any additional data beyond localizer images and existing prescription metadata embedded in the image headers for training. This will make it straightforward to adapt this technique for other anatomical regions and acquisition types.

In conclusion, this study demonstrates the feasibility of using oriented object detection convolutional neural networks for automated prescription of oblique MRI acquisitions. This will reduce prescription errors and achieve more consistent and easier to interpret imaging studies.

Acknowledgements

The authors would like to thank Francesco Caliva and Claudia Iriondo for help with model implementation as well as support from NIH P50AR060752, NIH R01AR046905, and GE Healthcare.References

1. Ozhinsky E, Vigneron DB, Chang SM, Nelson SJ. Automated prescription of oblique brain 3D magnetic resonance spectroscopic imaging. Magnetic Resonance in Medicine. 2013;69(4):920-930. doi:10.1002/mrm.24339.

2. Yung KT, Zheng W, Zhao C, Ramón MM, van der Kouwe A, Posse S. Atlas‐based automated positioning of outer volume suppression slices in short‐echo time 3D MR spectroscopic imaging of the human brain. Magnetic Resonance in Medicine. 2011;66(4):911-922. doi:10.1002/mrm.22887.

3. Bian W, Li Y, Crane JC, Nelson SJ. Fully automated atlas‐based method for prescribing 3D PRESS MR spectroscopic imaging: Toward robust and reproducible metabolite measurements in human brain. Magnetic Resonance in Medicine. 2018;79(2):636-642. doi:10.1002/mrm.26718.

4. Goldenstein J, Schooler J, Crane JC, et al. Prospective image registration for automated scan prescription of follow-up knee images in quantitative studies. Magnetic Resonance Imaging. 2011;29(5):693-700. doi:10.1016/j.mri.2011.02.023.

5. Shanbhag DD, Bhushan C, de Alm Maximo A, et al. A generalized deep learning framework for multi-landmark intelligent slice placement using standard tri-planar 2D localizers. Proc Intl Soc Mag Reson Med. 2019.

6. Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Advances in Neural Information Processing Systems. 2015:91-99.

7. Ma J, Shao W, Ye H, et al. Arbitrary-Oriented Scene Text Detection via Rotation Proposals. IEEE Trans Multimedia. 20(11):3111-3122. doi:10.1109/TMM.2018.2818020.

8. Jiang Y, Zhu X, Wang X, et al. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv preprint arXiv:170609579. June 2017.

9. Yang X, Sun H, Sun X, Yan M, Guo Z, Fu K. Position Detection and Direction Prediction for Arbitrary-Oriented Ships via Multitask Rotation Region Convolutional Neural Network. IEEE Access. 6:50839-50849. doi:10.1109/ACCESS.2018.2869884.

10. https://github.com/DetectionTeamUCAS/R2CNN_Faster-RCNN_Tensorflow

11. Xia G-S, Bai X, Ding J, et al. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. The IEEE Conference on Computer Vision and Pattern Recognition CVPR. 2018:3974-3983.

Figures