3551

Fetal pose estimation from volumetric MRI using generative adversarial network1Department of Electrical Engineering & Computer Science, MIT, Cambridge, MA, United States, 2Fetal-Neonatal Neuroimaging and Developmental Science Center, Boston Children’s Hospital, Boston, MA, United States, 3Computer Science and Artificial Intelligence Laboratory (CSAIL), MIT, Cambridge, MA, United States, 4Harvard Medical School, Boston, MA, United States, 5Institute for Medical Engineering and Science, MIT, Cambridge, MA, United States

Synopsis

Estimating fetal pose from 3D MRI has a wide range of applications including fetal motion tracking and prospective motion correction. Fetal pose estimation is challenging since fetuses may have different orientation and body configuration in utero. In this work, we propose a method for fetal pose estimation from low-resolution 3D EPI MRI using generative adversarial network. Results show that the proposed method produces a more robust estimation of fetal pose and achieves higher accuracy compared with conventional convolution neural network.

Introduction

Fetal magnetic resonance imaging (MRI) is increasingly used in clinical practice for diagnostic imaging in pregnancy. However, motion of the fetus during MR scan is a major problem that severely degrades image quality. Estimation of pose of the fetus from volumetric time-series MRI has a wide range of applications, including motion tracking for evaluation of fetal movements per se, as well as for “self-driving” imaging protocols with automatic slice prescription schemes for prospective artifact mitigation of artifacts during MR imaging. In this abstract, we propose a deep learning method for fetal pose estimation from volumetric MR images using a generative adversarial network (GAN) and its performance is evaluated.Methods

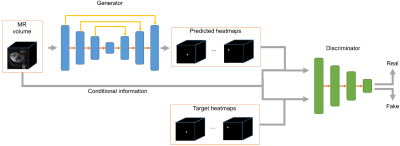

Multi-slice EPI data (matrix size = 120*120*80, resolution = 3mm*3mm*3mm, TR = 3.5s, scan duration between 10 and 30 minutes) were acquired of the pregnant abdomen for subjects at gestational age between 25 and 35 weeks, resulting in a dataset with 77 series and 19,816 volumes. Fifteen so-called keypoints (ankles, knees, hips, bladder, shoulders, elbows, wrists and eyes) were labeled as landmarks to define the pose of fetus1. The dataset was divided into 49 series (12,332 frames) for training, 14 series (3,402 frames) for validation, and 14 series (4,082 frames) for testing.The proposed deep learning method for fetal pose estimation is shown in Figure 1, which consists of two networks, generator G and discriminator D. The generator is based on a 3D U-Net architecture2, which is able to estimate heatmaps from input MR volumes. The heatmaps are the distribution of each keypoint and the pose can be inferred from heatmaps with the argmax operation. The discriminator adopts an encoder structure and is responsible for distinguishing between fake heatmaps generated by generative network and real heatmaps from labels.

The loss function of generator consists of two terms, mean squared error between estimated heatmaps and ground truth and the adversarial loss to fool the discriminator. Similar to least squares GAN3, we use L2 loss for adversarial loss.

$$L_G=||G(I)-H||_2^2+\lambda ||D([G(I), I]) - 1||_2^2$$

For discriminator, the loss is the classification error of fake and real inputs.

$$L_D=||D([H,I])-1||_2^2+||D([G(I), I])-0||_2^2$$

By classifying real and fake heatmaps, the discriminator can learn the features of plausible fetal pose from the dataset. For the generator, when competing with the discriminator, it is able to generate realistic fetal pose.

Results

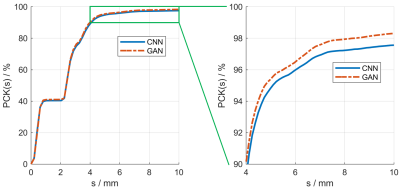

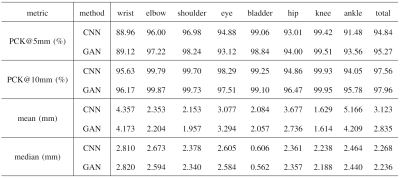

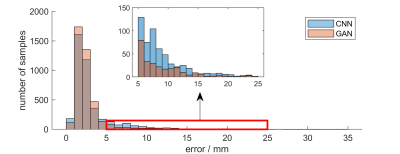

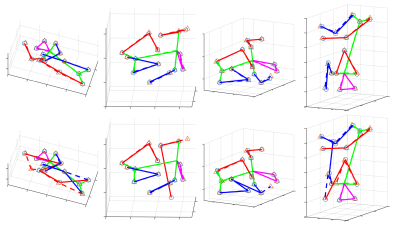

We compared the proposed GAN method and a standard CNN method, i.e., using only a generative network. Several metrics are used for evaluation, including percentage of correct keypoint (PCK)4, mean and median of error, as shown in Figures 2 and 3. Results show that the proposed GAN method can achieve lower error and higher accuracy in fetal pose estimation. To further investigate how can GAN improves the performance of networks, we plot the distribution of error of model with and without GAN as shown in Figure 4. Besides, Figure 5 illustrates some examples of pose estimation results of these two models.Discussion

The zoom-in view in Figure 4. shows that the model with GAN has a lighter tailed distribution of error, which indicates that the gain in performance from GAN is mainly due to the reduction of outlier cases with large error, where the large error is usually caused by misconfiguration of keypoints. Quantitative metrics in Figure 3 lead to the same result, where the proposed method reduced the mean error but not the median error.Examples of estimated fetal pose are displayed in Figure 5. The poses produced by the model without GAN (the bottom row) may have wrong configurations and look unrealistic. However, by competing with the discriminator, the generator is able to produce poses that are close to the subspace of plausible fetal pose (the top row). Another way to interpret GAN is to treat the adversarial loss parameterized by the discriminator as a learnable loss function. By distinguishing between ground truth heatmaps and generated ones, the discriminator can learn the feature of target heatmaps and the corresponding adversarial loss can model the constraints on rational fetal pose implicitly.

Conclusion

In this work, we propose a generative adversarial network for fetal pose estimation from 3D MRI. The discriminator can implicitly model the constraints on plausible fetal pose configurations. Therefore, by competing with the discriminator, the generator is able to produce realistic fetal poses more robustly. Evaluation shows that the proposed method is useful for improving accuracy in fetal pose estimation with particular benefits to outlier cases with large errors in a conventional CNN.Acknowledgements

This research was supported by NIH U01HD087211, NIH R01EB01733 and NIH NIBIB NAC P41EB015902. Nvidia.References

1. Xu, J., Zhang, M., Turk, E. A., Zhang, L., Grant, P. E., Ying, K., Golland, P., & Adalsteinsson, E. (2019, October). Fetal Pose Estimation in Volumetric MRI Using a 3D Convolution Neural Network. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 403-410). Springer, Cham.

2. Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T., & Ronneberger, O. (2016, October). 3D U-Net: learning dense volumetric segmentation from sparse annotation. In International conference on medical image computing and computer-assisted intervention (pp. 424-432). Springer, Cham.

3. Mao, X., Li, Q., Xie, H., Lau, R. Y., Wang, Z., & Paul Smolley, S. (2017). Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision (pp. 2794-2802).

4. Andriluka, M., Pishchulin, L., Gehler, P., & Schiele, B. (2014). 2d human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on computer Vision and Pattern Recognition (pp. 3686-3693).

Figures