3535

Informed deep convolutional neural networks1Applications and Workflow, GE Healthcare, Calgary, AB, Canada, 2Applications and Workflow, GE Healthcare, New York, NY, United States

Synopsis

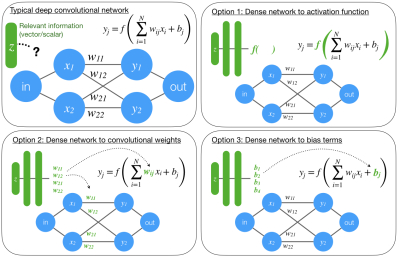

Convolutional neural networks are an emerging tool in medical imaging. Conventional CNNs accept an image as input and return a task-specific output (e.g., a filtered image, a disease probability). Conventional CNNs struggle to generalize or perform poorly when image data alone is insufficient to solve the problem. We propose three ways to incorporate relevant scan information into a CNN. The value of this method is demonstrated on rSOS image denoising, a previously unstable problem.

Introduction

Deep convolutional neural networks (CNNs) have become instrumental in reconstructing [1], processing, and analyzing MR images. These networks involve a cascade of convolutional layers. Each layer contains a set of feature maps, typically created by convolving the previous layer with a set of learnt weights, adding biases, then applying a non-linear activation. The weights, bias terms, and possibly even the activation functions, are trained to solve specific problems. CNNs typically receive images as inputs and return a task specific output.Conventional CNNs can perform extremely well under ideal circumstances. However, CNNs may fail to generalize to different input data, for example, segmentation/classification performance may deteriorate using images with different resolutions or contrast weightings, or reconstruction networks may not generalize to new sub-sampling patterns. Additionally, some tasks are simply ill-poised based on imaging data alone: parameter mapping without sequence timings or flip angles, or denoising a root sum-of-squares (rSOS) image without knowing the number of coils.

In medical imaging, there is typically relevant information to assist in the image processing task being performed. In MRI, this may include TR, TE, flip angle(s), scan plane, anatomy, noise correlation matrix, resolution, etc. Standard CNNs accept only image input and it’s not obvious how to leverage non image-based information. In this work, we present simple yet effective options for incorporating arbitrary information into a CNN.

Methods

We propose three mutually-compatible options for incorporating scan data into CNNs, Figure 1. All options involve flattening the relevant information into a vector then using one or more dense networks to transform this data into parameters that a CNN can use. In the first option, parametric activation functions, like a parametric ReLU or those in [1], are modulated by the output of the dense network. In the second option, the outputs of the dense network are reshaped into the kernel weights used during convolution. The third option involves using output from the dense network as the bias terms. In all cases, the activations, weights, and/or biases are learned to solve the task, but the network adapts based on the additional information that is provided.We demonstrate the value of informed networks in a simple test case: denoising images created with a rSOS coil combine. The Rician noise distribution [2] makes it difficult to distinguish low amplitude signals from the baseline skew without knowledge of the number of coils. Two CNNs, based on a 24-layer RED architecture [3], designed to denoise images were trained using pristine data with a channel count ranging from 1 to 48. Noise was added to each channel of the pristine data then combined via rSOS. The first CNN was blind to both the noise power and number of channels; the second was blind to the noise power, but the number of channels was used to learn the bias terms of every second layer (Figure 1, option #3).

Results

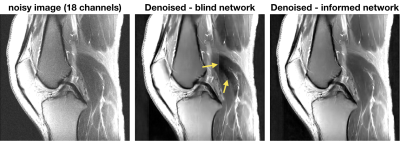

The validation loss (mean squared error) of the informed CNN was 42% lower than the standard CNN. The informed CNN increased the number of trainable parameters by 2% due to the small dense network used to learn the biases.The standard CNN is unable to consistently distinguish noise from low-amplitude signals resulting in regions of failed denoising, Figure 2. The yellow arrows indicate regions where low signal levels were mis-attributed as baseline noise and were erroneously attenuated. The informed network performed consistent and uniform denoising.

Discussion

Standard image processing routinely makes use of known acquisition parameters so it’s not surprising that this information improves the performance and generalizability of CNNs. In our test case, a network trained with a fixed number of channels performs well for that number of channels, but fails to generalize to other channel counts. A network trained with a variable number of channels performs poorly everywhere. Providing the number of channels via a small dense network provides adaptability and improves performance.We have presented three simple yet effective options for including arbitrary information into a CNN. These options are mutually compatible but the appropriate combination is application dependent. Our denoising example used only informed bias terms since this problem consists mostly of ambiguous noise offsets. Kernel weights that are informed by anatomy or scan plane may be beneficial for segmentation.

Conclusion

Informed networks improve both accuracy and generalizability of CNNs by providing relevant information to the network. We have demonstrated how scalar and vector information can be easily included in a network. This was demonstrated with a controlled example, where basic information is needed for viable performance.Acknowledgements

No acknowledgement found.References

[1] Hammernik K, Klatzer T, Kobler E, Recht MP, Sodickson DK, Pock T, Knoll F.Learning a variational network for reconstruction of accelerated MRI data. MagnReson Med. 2018 Jun;79(6):3055-3071

[2] Gudbjartsson H, Patz S. The Rician distribution of noisy MRI data. Magn Reson Med. 1995 Dec;34(6):910-4. Erratum in: Magn Reson Med 1996 Aug;36(2):332.

[3] Mao XJ, Shen C, Yang YB. Image Restoration Using Convolutional Auto-encoders with Symmetric Skip Connections. arXiv. 2016. Eprint: 1606.08921.

Figures