3528

A Deep-Learning Based 3D Liver Motion Prediction for MR-guided-Radiotherapy1Medical Physics & Research Department, Hong Kong Sanatorium & Hospital, Hong Kong, China

Synopsis

Respiratory induced organ motion reduces radiation delivery accuracy of radiotherapy in thorax and abdomen. MR-guided-radiotherapy (MRgRT) is capable of continuous MRI acquisition during treatment. However, the latency due to MRI acquisition and reconstruction, the detection of tumor position change, and the interaction with multileaf collimator (MLC) have been identified as the major challenges for real-time MRgRT. In this study, we proposed a deep-learning based 3D motion prediction technique to predict liver motion from volumetric dynamic MR images. Our algorithm showed promising results (< 0.3 cm prediction error on average) , suggesting its possibility of real-time motion tracking in the future MRgRT.

Purpose:

Respiratory induced organ motion reduces radiation delivery accuracy of radiotherapy in thorax and abdomen. MR-guided-radiotherapy (MRgRT), such as MR-Linac, is capable of continuous MRI acquisition during treatment. However, the latency due to MRI acquisition and reconstruction, the detection of tumor position and change, as well as the interaction with multileaf collimator (MLC) have been identified as the major challenges for real-time MRgRT. As such, motion prediction is essential for latency reduction for real-time motion tracking in MRgRT. Recently, model-less deep-learning techniques have been shown to outperform the traditional respiratory motion models1-7 in motion prediction8-15. In this study, we proposed a deep-learning based 3D motion prediction technique to predict liver motion from volumetric dynamic MR images. The proposed method employed nonlinear autoregressive neural network with exogenous input (NarxNet)16 that selectively memorized the subject’s historical motion profile, coupled with the latest acquired organ position and the subject’s respiratory profile, to produce a future position prediction.Material and Methods:

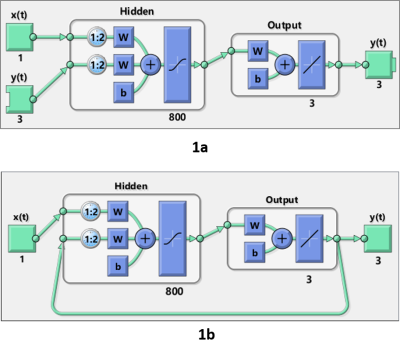

8 healthy volunteers (34.33±5.77 years) underwent five free-breathing 4D-MRI scans on a 1.5T MR simulator (Aera, Siemens Healthineers, Erlangen, Germany). A CAIPIRINHA-VIBE 3D spoiled-gradient-echo sequence (transversal, FOV=350x262.5mm, thickness=4mm, matrix size=128x128x56, TE/TR=0.6/1.7ms, flip-angle=6o, RBW=1250Hz/voxel, CAIPIRINHA factor=4, partial Fourier factor=6/8, volumetric temporal resolution = 1 frame-per-second, 144 time frames) was applied. During each acquisition, respiratory curve was logged and its time stamp was corresponded to acquired images. Liver was manually delineated on the 2nd frame images. The following frames were rigidly registered to the reference to calculate the displacement, which served as the ground truth of motion profiles. A nonlinear autoregressive neural network with exogenous input (NarxNet) was implemented using MATLAB 2019b. NarxNet network selectively memorizes previous inputs $$$y(t - 2),...,y(t - {n_y})$$$ (i.e. positions), in combination with current position $$$y(t - 1)$$$ and the recorded subject’s respiratory profile $$$x(t),x(t - 1),...,x(t - {n_x})$$$, produces a future position $$$y(t)$$$, or mathematically can be expressed as \[y(t) = f(y(t - 1),y(t - 2),...,y(t - {n_y}),x(t),x(t - 1),...,x(t - {n_x})).\] In the training phase, the NARX network assessed nonlinear dynamic system actual output given the current and future liver position. A series-parallel design was employed, where the predicted position was replaced by the actual position (Figure 1a). After the training phase, the series-parallel configuration was transformed into a parallel architecture to obtain multistep-ahead prediction (Figure 1b). The network contained 800 hidden layer neurons followed by a fully connected layer. Levenberg-Marquardt (LM) training algorithm was used for network training with 10000 epochs, and 0.125 dropout. The ground truth motion profiles from the scan sessions 1-4 together with the corresponding respiratory profiles were used for training. The scan session 5 was used for validation of motion prediction. A common network structure was used for all data, but individually trained for each subject. A five-fold cross-validation was conducted so that every session was used for testing and the rest 4 sessions for training. The prediction accuracy was evaluated by the root-mean-squared-error (RMSE) between the acquired and predicted liver positions. One-way ANOVA and paired t-test were used to assess the difference between the predicted and acquired motion profiles with a p-value of 0.05.Results

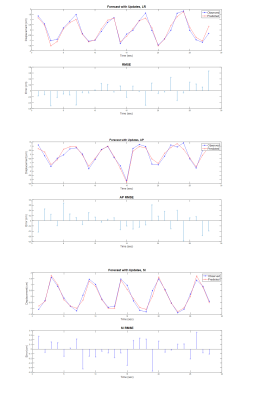

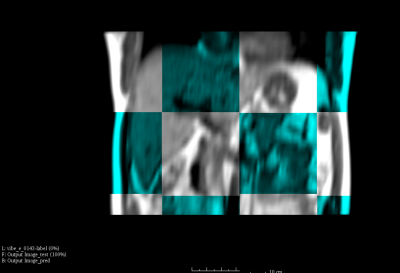

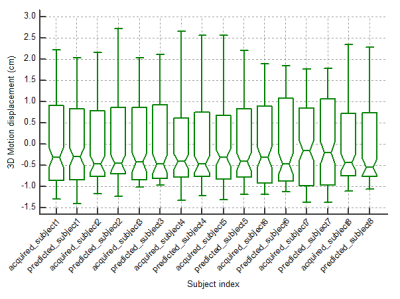

Figure 2 shows one representative predicted liver motion profile compared with the ground truth. The overall predicted liver motion achieved mean RMSE of 0.27, 0.35 and 0.21 cm (Range –1.53-1.75, -3.33-1.29 and -1.27 – 1.75 cm, SD of 0.16, 0.28 and 0.18 cm) in LR, AP and SI, respectively, indicating good prediction accuracy. One representative slice of the fused predicted image and the corresponding acquired image were shown in Figure.3. Figure 4 shows the box-plot of the 3D motion vectors (sum-of-square of displacements along LR, AP, SI). No significant difference was observed between the acquired and predicted motion profiles in all subjects (p>0.05).Discussion and Conclusion

In this study, we developed a deep-learning based 3D liver motion prediction technique, and evaluated its performance on 8 healthy volunteers. In conjunction with a fast time-resolved volumetric MRI acquisition17, our algorithm showed promising results (< 0.3 cm prediction error on average) for motion prediction, suggesting its possibility of treatment margin reduction and real-time motion tracking in the future MRgRT. The main limitation of this study is the recruitment of only a small number of healthy volunteers. Motion profiles were extracted using rigid registration, neglecting the liver deformability during motion. The motion profile of real patients might be substantially different from healthy volunteers. Respiration irregularity and its influence on organ motion prediction should be further investigated.Acknowledgements

This study was approved by the Institutional Research Ethics Committee (REC-2019-09)References

[1]. Adler JR, Chang S, Murphy M. The Cyberknife: a frameless robotic system for radiosurgery. Stereo Funct Neurosurg. 1997;69:124–128.

[2]. Sharp GC, Jiang SB, Shimizu S, Shirato H. Prediction of respiratory tumor motion for real-time image-guided radiotherapy. Phys Med Biol. 2004;49:425–440.

[3]. Ren Q, Nishioka S, Shirato H, Berbeco RI. Adaptive prediction of respiratory motion for motion compensation radiotherapy. Phys Med Biol. 2007;52:6651–6661.

[4]. Riaz N, Shanker P, Wiersma R, et al. Predicting respiratory tumor motion with multi-dimensional adaptive filters and support vector regression. Phys Med Biol. 2009;54:5735–5748.

[5]. Vedam SS, Keall P, Docef A, et al. Predicting respiratory motion for four-dimensional radiotherapy. Med Phys. 2004;31:2274–2283.

[6]. Murphy MJ, Jalden J, Isaksson M. Adaptive filtering to predict lung tumor breathing motion during image-guided radiation therapy. In: Proc. 16th Int. Congress on Computer-assisted Radiology Surgery (CARS 2002); 2002:539–544.

[7]. Putra D, Haas OCL, Mills JA, Bumham KJ. Prediction of tumor motion using interacting multiple model filter. In: Proc. 3rd IET Int’l Conf. Medical Signal and Information Processing (MEDSIP), 2006, CP520; 2006:1–4.

[8]. Isaksson M, Joakim J, Murphy M. On using an adaptive neural network to predict lung tumor motion during respiration for radiotherapy applications. Med Phys. 2005;32:3801–3812.

[9]. Murphy MJ. Using neural networks to predict breathing motion. In: Proc. 7th Int’l Conf. Machine Learning and Applications. IEEE Press; 2008:528–532.

[10]. Murphy MJ, Pokhrel D. Optimization of an adaptive neural network to predict breathing. Med Phys. 2009;36:40–47.

[11]. Goodband JH, Haas OC, Mills JA. A comparison of neural network approaches for online prediction in IGRT. Med Phys. 2008;35:1113– 1122.

[12]. Rottmann J, Berbeco R. Using an external surrogate for predictor model training in real-time motion management of lung tumors. Med Phys. 2014;41:121706.

[13]. Murphy MJ, Dieterich S. Comparative performance of linear and nonlinear neural networks to predict irregular breathing. Phys Med Biol. 2006;51:5903–5910.

[14]. Krauss A, Nill S, Oelfke U. The comparative performance of four respiratory motion predictors for real-time tumor tracking. Phys Med Biol. 2011;56:5303–5317.

[15]. Yun, J., S. Rathee, and B. G. Fallone. "A Deep-Learning Based 3D Tumor Motion Prediction Algorithm for Non-Invasive Intra-Fractional Tumor-Tracked Radiotherapy (nifteRT) on Linac-MR." International Journal of Radiation Oncology• Biology• Physics 105.1 (2019): S28.

[16]. Boussaada Z, Curea O, Remaci A, Camblong H, Mrabet Bellaaj N. A nonlinear autoregressive exogenous (narx) neural network model for the prediction of the daily direct solar radiation. Energies. 2018 Mar;11(3):620.

[17]. Yuan J, Wong OL, Zhou Y, Chueng KY, Yu SK. A fast volumetric 4D-MRI with sub-second frame rate for abdominal motion monitoring and characterization in MRI-guided radiotherapy. Quant Imaging Med Surg 2019;9(7):1303-1314.

Figures