3526

Differentiation of Breast Cancer Molecular Subtypes on DCE-MRI by Using Convolutional Neural Network with Transfer Learning1Department of Radiological Science, University of California, Irvine, CA, United States, 2Department of Radiology, First Affiliate Hospital of Wenzhou Medical University, Wenzhou, China, 3Department of Medical Imaging, Taichung Tzu-Chi Hospital, Taichung, Taiwan, 4Department of Radiology, E-Da Hospital and I-Shou University, Kaohsiung, Taiwan

Synopsis

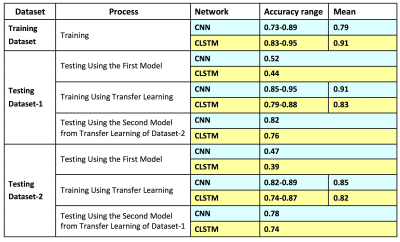

A total of 244 patients were analyzed, 99 in Training, 83 in Testing-1 and 62 in Testing-2. Patients were classified into 3 molecular subtypes: TN, HER2+ and (HR+/HER2-). Deep learning using CNN and Convolutional Long Short Term Memory (CLSTM) were implemented. The mean accuracy in Training dataset evaluated using 10-fold cross-validation was higher using CLSTM (0.91) than CNN (0.79). When the developed model was applied to testing datasets, the accuracy was very low, 0.4-0.5. When transfer learning was applied to re-tune the model using one testing dataset, it could greatly improve accuracy in the other dataset from 0.4-0.5 to 0.8-0.9.

Introduction

Determination of different molecular subtypes in newly diagnosed cancer is very important for choosing the most appropriate treatment strategy. While these markers can be evaluated from tissues obtained in biopsy or surgery, it is subject to the tissue sampling bias problem. For patients electing to receive neoadjuvant chemotherapy, tumor can shrink substantially or even completely regress to achieve pathological complete response, and it will be very difficult to perform a thorough molecular subtyping for choosing additional therapies after surgery. For patients with hormonal receptor positive cancer, long term hormonal therapy is needed, which is known to be very effective in reducing the risk of recurrence and metastasis. Breast MR images contain rich information, which may be used for differentiation of molecular subtypes, done using images acquired at the time of diagnosis before any treatment for a thorough assessment of the entire tumor. The goal of this study is to apply deep learning using two different convolutional neural networks to differentiate three different molecular subtypes of breast cancer: triple negative (TN), HER2 positive (HER2+), and Hormonal receptor positive & HER2 negative (HR+/HER2-). One training dataset and two testing datasets were used. In addition to directly testing, we also applied transfer learning to investigate how it can be used to improve the accuracy.Methods

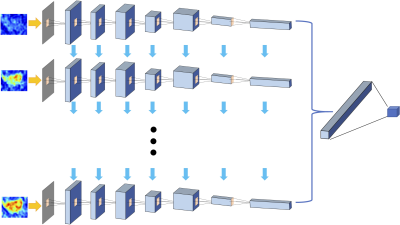

The Training Dataset was obtained from one hospital performed on a Siemens 1.5T system, with a total of 99 patients (65 HR+/HER2-, 24 HER2+, 10 TN). The independent testing was done using cases collected from a different hospital performed on a GE 3T system. The Testing Dataset-1 was collected from Jan 2017 to May 2018, with a total of 83 patients (54 HR+/HER2-, 19 HER2+, 10 TN); and Testing Dataset-2 included newer cases collected from June to Dec 2018, with a total of 62 patients (37 HR+/HER2-, 15 HER2+, 10 TN). Tumors were segmented on T1w contrast-enhanced maps using fuzzy-C-means (FCM) clustering algorithm [1]. In a recent study we reported that including a small amount of peri-tumor tissue into analysis can achieve a higher diagnostic accuracy compared to using tumor ROI alone [2], so in this study we adopted the same method. The ROI’s obtained on all slices of one lesion were projected together and the smallest bounding box covering them was used as the input in deep learning [3]. Only the DCE images were analyzed, which included one set of pre- and 4 sets of post-contrast images. Since a time series of DCE-MRI was acquired, a recurrent neural network could be implemented to consider the change of signal intensity over time [4]. Figure 1 shows the conventional convolutional neural network [5-8] by using all 5 sets of images as inputs. Figure 2 shows the convolutional long short term memory (CLSTM) network [9], and the DCE images were put into the network one by one. To avoid overfitting, the dataset was augmented by random affine transformation. In the training dataset, the evaluation was done using 10-fold cross-validation. The developed model was directly applied to the testing datasets to evaluate the accuracy. Then, the first model developed from training was used as the basis in Testing Dataset-1 for transfer learning to re-tune the model. Then this second model developed from transfer learning in Testing Dataset-1 was applied in Testing Dataset-2 for evaluation. The process was repeated again using Dataset-2 for transfer learning and tested in Dataset-1.Results

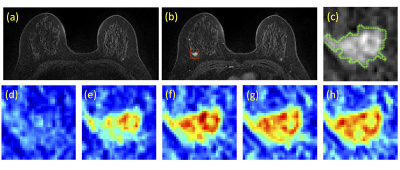

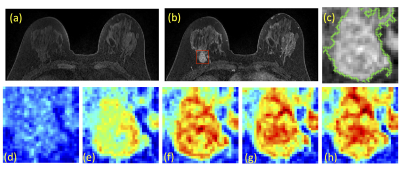

Figures 3 and 4 show two case examples, with the smallest bounding box used in deep learning. All results are summarized in Table 1. The accuracy obtained in the training process was evaluated using 10-fold cross-validation, and the range and the mean values were reported. When using conventional CNN, the mean accuracy was 0.79 in the Training dataset, but when the model was applied to Testing-1 and 2, the accuracy was very low, only 0.52 and 0.47. When transfer learning was applied, the mean accuracy in Testing-1 could reach 0.91, and the new model improved accuracy in Tetsing-2 from 0.47 to 0.78. When using the recurrent network with CLSTM, the mean accuracy was 0.91 in the Training dataset, higher than 0.79 using CNN. When the model was applied to Testing-1 and 2, the accuracy was also very low, only 0.44 and 0.39. When transfer learning was applied, the mean accuracy reached 0.83 in Testing-1, and improved accuracy in Tetsing-2 from 0.39 to 0.74. The results were similar when using Testing Dataset-2 for transfer learning, which also greatly improved the accuracy in Dataset-1.Discussion

In the standard practice for breast cancer, the hormonal receptor and HER2 receptor must be evaluated, so the patient can receive targeted therapies that are known to be effective, including hormonal therapy using tamoxifen and aromatase inhibitors, and HER2 targeting therapy using trastuzumad and pertuzumab. Therefore, an accurate diagnosis of the subtype is very important. Imaging may provide a complementary approach, especially for patients receiving neoadjuvant chemotherapy and the residual tissue is not sufficient for analysis. The training and testing datasets in this study were acquired using different systems (Siemens 1.5T vs. GE 3T) with different protocols (non-fat-sat vs. fat-sat), which might explain the very low accuracy when the developed model from Training was directly applied to Testing datasets. We further showed that transfer learning was an efficient method to re-tune the model for a different dataset. After transfer learning done using one testing dataset, the accuracy was greatly improved in the second testing dataset. Lastly, we compared the results obtained using CNN and CLSTM, and showed that recurrent network was a better architecture to analyze the DCE-MRI images acquired in a time series, which have been demonstrated by several other studies as well. In conclusion, our results show that deep learning provides an efficient method to extract subtle information from images to improve prediction of breast cancer molecular subtypes.Acknowledgements

This work was supported in part by NIH R01 CA127929, R21 CA208938.References

[1] Nie K, Chen JH, Yu HJ, Chu Y, Nalcioglu O, Su MY. "Quantitative analysis of lesion morphology and texture features for diagnostic prediction in breast MRI." Academic radiology 15.12 (2008): 1513-1525.

[2] Zhou J, Zhang Y, Chang KT, et al. Diagnosis of Benign and Malignant Breast Lesions on DCE-MRI by Using Radiomics and Deep Learning With Consideration of Peritumor Tissue. J Magn Reson Imaging. 2019 Nov 1. doi: 10.1002/jmri.26981. [Epub ahead of print]

[3] Shi L, Zhang Y, Nie K, et al. Machine learning for prediction of chemoradiation therapy response in rectal cancer using pre-treatment and mid-radiation multi-parametric MRI. Magn Reson Imaging 2019;61:33–40.

[4] Lang N, Zhang Y, Zhang E, et al. Differentiation of spinal metastases originated from lung and other cancers using radiomics and deep learning based on DCE-MRI. Magn Reson Imaging. 2019 Feb 28. pii: S0730-725X(18)30672-6. doi: 10.1016/j.mri.2019.02.013. [Epub ahead of print]

[5] LeCun Y, Bengio Y. Convolutional networks for images, speech, and time series. The handbook of brain theory and neural networks. 1995;3361(10):1995.

[6] Kingma D, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. 2014 Dec 22.

[7] Nair V, Hinton GE. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10) 2010 (pp. 807-814).

[8] Srivastava N, Hinton GE, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. Journal of machine learning research. 2014 Jan 1;15(1):1929-58.

[9] Xingjian SH, Chen Z, Wang H, Yeung DY, Wong WK, Woo WC. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Advances in neural information processing systems 2015 (pp. 802-810). 1.

Figures