3523

A 3D attention model based Recurrent Neural Network for Alzheimer’s Disease Diagnosis

Jie Zhang1,2, Xiaojing Long1, Xin Feng2, and Dong Liang1

1Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, shenzhen, China, 2Chongqing University of Technology, ChongQing, China

1Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, shenzhen, China, 2Chongqing University of Technology, ChongQing, China

Synopsis

The early diagnosis of AD is important for patient care and disease management. However, early diagnosis of AD is still challenging. In this work, we proposed a 3D attention model based densely connected Convolution Neural Network to learn the multilevel features of MR brain images for AD classification and prediction. The proposed network was constructed with the emphasis on the interior resource utilization and introduced the attention mechanism into the classification of AD for the first time. Our results showed that the proposed model is effective for AD classification.

Introduction

Alzheimer’s Disease (AD) is a progressive and irreversible brain degenerative disorder. Mild cognitive impairment(MCI) is the prodromal state of the disease, which has a high risk of further developing to AD. However, early diagnosis of AD is still challenging, especially for predicting the conversion from MCI to AD. In recent years, machine learning and deep learning methods have been widely used on analysis of multi-modality neuroimages for quantitative evaluation and computer-aided-diagnosis (CAD) of AD. In this study, we proposed a new algorithm, which developed a network based on 3D attention model for AD diagnosis and prediction.Dataset and Method

The data used in this work were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database. We retrieved the T1-weighted MR imaging data from the baseline visits of 968 participants including 280 AD, 162 MCI subjects who had converted to AD within 18 months (cMCI), 251 MCI subjects who did not convert within 5 years (sMCI) and 275 normal controls (NC) for evaluation.This paper proposed a 3D attention model based Densely Connected Convolution Neural Network (CWAN) to learn the multilevel features of MR brain images for AD classification. First, we divided the entire brain image into 64 local areas with the same size, each of which was 96×120×96. A Connection-Wise attention model based densely connected Convolution Neural Network was built to hierarchically transform the MR image into more compact high-level features by adding a threshold value to each earlier layer. The features of each layer were interconnected with the features of all the previous layers, and the last layer was combined with a fully connected layer followed by softmax for AD classification. The proposed method automatically learned general multilevel features for classification, which was robust to the scale and rotation variations to some extent. Our network consisted of 4 types of layers. The first one was the input layer which accepted a 3D MR image of fixed size (96×120×96 voxels in this work). The second type was the convolutional layer which convolved the learned filters with the input image and produce a feature map for each filter. The CWAN was built with 8 convolutional layers, each two convolutional layers followed by a max pooling layers. The size of the convolution filters was 3×3×3, and the filter number was set as 35. The third type was the pooling layer which down-samples the input feature map along the spatial dimensions by replacing each non-overlapping block with their maximum. Max pooling was applied for each 2×2×2 region, and Tanh was adopted as the activation function in these layers because of its good performance for CNNs. The forth type of layer is the fully connected layer which consisted of a number of input and output neurons.

Result

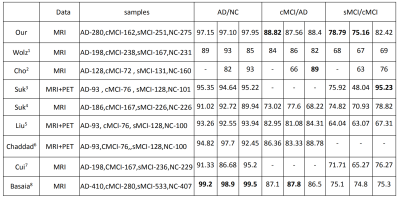

The proposed method presented good classification power where it obtained the accuracy of 97.15%, 88.82%, and 78.79% to respectively differentiate mild AD, cMCI and sMCI subjects versus normal controls. Comparing with the other three popular networks including the AlexNet, ResNet, and DenseNet, our method achieved higher accuracy than all the networks in classifying AD vs NC, cMCI vs NC, and sMCI vs cMCI (shown in Table 1). The AlexNet demonstrated the worst performance in group classification with accuracy of 85.07% and 79.32% to differentiate AD vs NC and cMCI vs NC, 72.19% to discriminate cMCI vs sMCI. When comparing with state-of-the-art methods, our accuracy is the highest among all compared algorithms in classifying cMCI vs NC, and sMCI vs cMCI (shown in Table 2), specifically the accuracy is almost 3% higher than the second best model in classifying sMCI vs cMCI.Conclusion

In this paper we have proposed a new design of a multimodal 3D CNN for Alzheimer’s Disease diagnostics inspired by DenseNet which has showed efficiency in 2D datasets. The proposed network is constructed with the emphasis on the interior resource utilization. The classification of sMCI and cMCI can be used to judge whether the disease will be converted into AD, and provide help for doctors' follow-up treatment. The results have showed that our proposed method obtained superior classification accuracy in comparison with other networks or methods reported in previous literatures. The reason may be that in our method each 3D CNN layer selectively combined the low-level feature maps to generate higher-level features, which can achieve more robustness to variations in translation and rotation in the image.Acknowledgements

Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12-2-0012).This research was supported by Ostrogradsky scholarship grant 2017 established by French Embassy in Russia and TOUBKAL French-Morocco research grant Alclass. We thank Dr. Pierrick Coupe from LABRI UMR 5800 University of ´Bordeaux/CNRS/Bordeaux-IPN who provided insight and expertise that greatly assisted the research.References

- Robin W , Valtteri J , Juha K , et al. Multi-Method Analysis of MRI Images in Early Diagnostics of Alzheimer's Disease[J]. PLoS ONE, 2011, 6(10):e25446-.

- Cho Y , Seong J K , Jeong Y , et al. Individual subject classification for Alzheimer\"s disease based on incremental learning using a spatial frequency representation of cortical thickness data[J]. Neuroimage, 2012, 59(3):2217-2230.

- Suk H I , Lee S W , Shen D . Hierarchical feature representation and multimodal fusion with deep learning for AD/MCI diagnosis[J]. NeuroImage, 2014, 101:569-582.

- Suk H I , Lee S W , Shen D . Deep ensemble learning of sparse regression models for brain disease diagnosis[J]. Medical Image Analysis, 2017, 37:101-113.

- Liu M , Cheng D , Wang K , et al. Multi-Modality Cascaded Convolutional Neural Networks for Alzheimer’s Disease Diagnosis[J]. Neuroinformatics, 2018.

- Basaia S, Agosta F, Wagner L, et al. Automated classification of Alzheimer's disease and mild cognitive impairment using a single MRI and deep neural networks[J]. NeuroImage: Clinical, 2019, 21: 101645.

- Chaddad A, Desrosiers C, Niazi T. Deep radiomic analysis of MRI related to Alzheimer’s disease[J]. IEEE Access, 2018, 6: 58213-58221.

- Cui R, Liu M, Alzheimer's Disease Neuroimaging Initiative. RNN-based longitudinal analysis for diagnosis of Alzheimer’s disease[J]. Computerized Medical Imaging and Graphics, 2019, 73: 1-10.