3519

Simulated CMR images can improve the performance and generalization capability of deep learning-based segmentation algorithms1Biomedical Engineering Department, Eindhoven University of Technology, Eindhoven, Netherlands, 2Philips Research Laboratories, Hamburg, Germany, 3Philips Healthcare, MR R&D - Clinical Science, Best, Netherlands

Synopsis

The generalization capability of deep learning-based segmentation algorithms across different sites and vendors, as well as MRI data with high variance in contrast, is limited. This affects the usability of such automated segmentation algorithms in clinical settings. The lack of freely accessible medical datasets additionally limits the development of stable models. In this work, we explore the benefits of adding a simulated dataset, containing realistic contrast variance, into the training procedure of the neural network for one of the most clinically important segmentation tasks, the CMR ventricular cavity segmentation.

Introduction

Cardiovascular magnetic resonance (CMR) imaging is often utilized for clinical evaluation of cardiac function, where an accurate segmentation of multiple cardiac tissues in both end diastolic (ED) and end systolic (ES) phases is one of the primary tasks1. Recent advances in medical image processing largely focus on achieving a fully automated segmentation, with deep learning-based methods showing promising results2, 3. However, such results are achievable only for a small range of applications, mainly defined by the type of training data used. MRI acquisition can have a substantial impact on tissue segmentation performance, as the abundance of various acquisition protocols and parameters results in a wide range of image appearances and influences image quality. Even state-of-the-art models show degraded accuracy when tested on data obtained from MR imaging sequences or scanners not matching that of the training data4, 5. A straightforward solution to this problem consists of training the algorithms with enough data to cover the overall range of variability. This poses a particular challenge in the medical domain, where data is limited and mostly confidential, while obtaining accurate ground truth annotations is costly6. To tackle this problem, we propose to incorporate simulated CMR images, obtained from a virtual population of realistic anatomical masks, into a training procedure of a 2D U-net with the aim of segmenting the heart ventricular cavity in short-axis cine CMR images.Methods

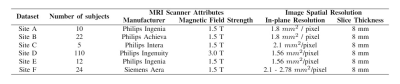

Short-axis cine MR images were obtained from six different sites, with highly heterogeneous contrasts (as seen in figure 1) due to differences in scanner vendors and models, as well as variable scanner parameters, as summarized in table 1. The segmentation ground truth is provided for both ED and ES phases, containing expert manual segmentations of right ventricular blood pool (RV), left ventricular blood pool (LV) and left ventricular myocardium (LVM).To account for this variance, we propose adding simulated CMR images to the training set, obtained using a human anatomical model for XCAT phantom, where several parameters were varied to provide a realistic variance in the heart’s LV function, orientation and position inside the torso. By modifying the sequence parameters to the real ones and scaling the simulated signal intensity, we match the simulated image contrast to their real counterpart. Samples of simulated images are available in figure 2.

The experiments performed in this study are designed to evaluate whether the addition of simulated data into the training set improves the overall segmentation performance (table 2), as well as how it affects the generalization capability of neural networks to data coming from unseen sites (table 3).

We adopt a 2D U-Net architecture to perform a multi-structure cardiac segmentation task, following the recommendations of the nnU-Net framework7, 8. We choose the 2D U-Net over a 3D U-Net primarily due to the anisotropic nature of the multi-site data. Initially, all data is pre-processed by applying normalization to zero mean and unit standard deviation, as well as by resampling to a fixed voxel spacing of 1.5 mm x 1.5 mm x 1.5 mm. Data augmentation is applied on the fly to increase the variety of the training set and avoid over-fitting, using elastic deformations, random scaling and random rotations.

During training, a random batch of 32 2D short-axis slices was fed per each iteration into the network. To reduce over-fitting, we use a subset from the training data as a validation set. The network structure is similar to the one proposed in the original paper9, with the addition of the batch normalization and leaky ReLU activation functions, as well as dropout regularization (dropout rate of 0.4). We use the sum of cross-entropy and dice loss as a loss function, optimized using the Adam optimizer for stochastic gradient descent with an initial learning rate of 0.001 and a weight decay of 5 x 10-5.

Results

Table 2 compares the performance of the networks trained with and without the inclusion of simulated data, where we systematically increase the number of simulated images in the training set. In all cases both the Dice score and intersection over union (IoU) score increase as more simulated data is included. Table 3 shows the segmentation performance of the network on images from different sites. Since the majority of simulated data is designed to match the contrast variance in site F, the improvements in segmentation are most significant for that particular site.Discussion and Conclusion

The results obtained in this study indicate a promising solution to address the lack of data availability and generalization capability of neural networks in medical imaging segmentation tasks, which affect the use of DL-based methods in clinical settings. This is achieved even without having highly realistic simulations, which we hypothesize is mainly due to the availability of highly accurate “ground-truth” and inclusion of high contrast variance. Future work involves understanding how much realism is needed for networks to achieve a clinically acceptable performance across larger varying datasets and tasks, such as in large multi-center studies. Moreover, the proposed method can serve as a better and more realistic data augmentation strategy compared to existing methods, as well as improve transfer learning and adaptation methods.Acknowledgements

This research is a part of the OpenGTN project, supported by the European Union in the Marie Curie Innovative Training Networks (ITN) fellowship program under project No. 764465.References

1. Weinsaft JW, Klem I, Judd RM. MRI for the assessment of myocardial viability. Magnetic resonance imaging clinics of North America. 2007 Nov 1;15(4):505-25.

2. Işın A, Direkoğlu C, Şah M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Computer Science. 2016 Jan 1;102:317-24.

3. Litjens G, Ciompi F, Wolterink JM, de Vos BD, Leiner T, Teuwen J, Išgum I. State-of-the-Art Deep Learning in Cardiovascular Image Analysis. JACC: Cardiovascular Imaging. 2019 Aug 1;12(8):1549-65.

4. Bai W, Sinclair M, Tarroni G, Oktay O, Rajchl M, Vaillant G, Lee AM, Aung N, Lukaschuk E, Sanghvi MM, Zemrak F. Automated cardiovascular magnetic resonance image analysis with fully convolutional networks. Journal of Cardiovascular Magnetic Resonance. 2018 Dec;20(1):65.

5. Petitjean C, Dacher JN. A review of segmentation methods in short axis cardiac MR images. Medical image analysis. 2011 Apr 1;15(2):169-84.

6. Chen C, Bai W, Davies RH, Bhuva AN, Manisty C, Moon JC, Aung N, Lee AM, Sanghvi MM, Fung K, Paiva JM. Improving the generalizability of convolutional neural network-based segmentation on CMR images. arXiv preprint arXiv:1907.01268. 2019 Jul 2.

7. Isensee F, Petersen J, Klein A, Zimmerer D, Jaeger PF, Kohl S, Wasserthal J, Koehler G, Norajitra T, Wirkert S, Maier-Hein KH. nnu-net: Self-adapting framework for u-net-based medical image segmentation. arXiv preprint arXiv:1809.10486. 2018 Sep 27.

8. Isensee F, Petersen J, Kohl SA, Jäger PF, Maier-Hein KH. nnU-Net: Breaking the Spell on Successful Medical Image Segmentation. arXiv preprint arXiv:1904.08128. 2019 Apr 17.

9. Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. InInternational Conference on Medical image computing and computer-assisted intervention 2015 Oct 5 (pp. 234-241). Springer, Cham.

Figures