3514

Multi-scale Entity Encoder-decoder Network Learning for Stroke Lesion Segmentation1Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Inst. of Advanced Technology, Shenzhen, China, 2Case Western Reserve University, Cleveland, OH, United States

Synopsis

The encoder-decoder structure have demonstrated encouraging progress in biomedical image segmentation. Nevertheless, there are still many challenges related to the segmentation of stroke lesions, including dealing with diverse lesion locations, variations in lesion scales, and fuzzy lesion boundaries. In order to address these challenges, this paper proposes a deep neural network architecture denoted as the Multi-Scale Deep Fusion Network (MSDF-Net) with Atrous Spatial Pyramid Pooling (ASPP) for the feature extraction at different scales, and the inclusion of capsules to deal with complicated relative entities. Experimental results shows that the proposed model achieved a higher evaluating score compared to 5 models.

INTRODUCTION

The development of brain image segmentation technology has undergone several stages. Manual operation was previously used in medical image segmentation, whereby well-trained experts manually segmented strokes 1. However, this manual process was time-consuming and relied heavily on subjective perceptions. Thus, research into T1W auto-segmentation is critical 2. There are several challenges that must be overcome for the precise segmentation of images 3. First, T1 scan images exhibit many shapes and intensities similar to stroke lesions. Second, there is a wide variation in the location and size of lesions. Third, stroke ambiguity and complex appearances result in blurred boundaries between lesions and non-lesions. Limitations of classical encoder-decoder architectures include the degradation of the ability to capture global context due to the fixed convolution, and the difficulty in dealing with complex information (e.g. the relative position between entities).Based on the above observations, we propose a Multi-Scale Deep Fusion Network (MSDF-Net) model for stroke lesion segmentation. Specifically, we designed a multi-scale deep fusion scheme that employs the merits of Atrous Spatial Pyramid Pooling(ASPP) 4 in multi-scale predictions and a capsule network 5 in order to fully use the global context to reduce the influence of blurred lesion edges on lesion segmentation.METHODS

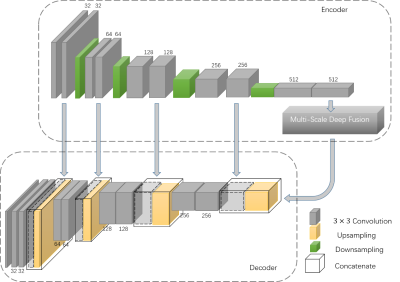

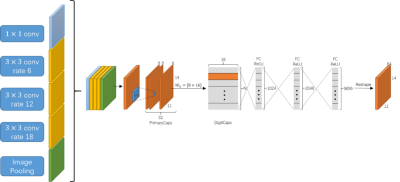

Figure 1 shows the architecture of the proposed MSDF-Net. This architecture consists of three sub-models, denoted as the ‘encoder’, 'multi-scale fusion' and ‘decoder’ respectively. The encoder extract features from the MR image through several convolution and pooling layers, and subsequently extract multi-scale complex information using multi-scale deep fusion. These features are then fed into the decoder model. The decoder retrieves abundant and accurate information by the convolution and up-sampling of the layers. However, pooling layer tends to damage the resolution of this method, leading to the loss of details in the retrieved image. Therefore, we introduce several connections between the encoder and decoder to transfer the details information, shown as the gray row in Figure 1. The multi-scale deep feature fusion process, shown in Figure 2, is composed of three components: the ASPP 4, capsule routine and reconstruction.EXPERIMENT

We adopted the subset of open source dataset ATLAS 2, which contains 229 subjects. We randomly selected 160 subjects for training, and 69 for testing. In addition, we croped the images from 233 × 197 to 224 × 176 in order to adapt to the input size of the network. We have compared our approach with different outstanding methods, including U-Net 6, SegNet 7, DeepLabv3+ 4, PSPNet 8, and FCN-8s 9. All of these methods are configured according to the original articles. The training parameters of our approach were set as follows: used Gaussian function to initialize the weight, use Dice Loss as the loss function, weight decay, and the Adam optimizer for gradient optimization. The learning rate is set to 0.0001.RESULTS

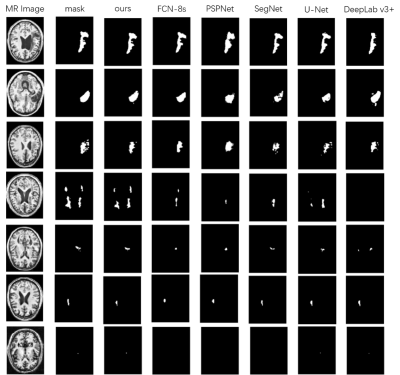

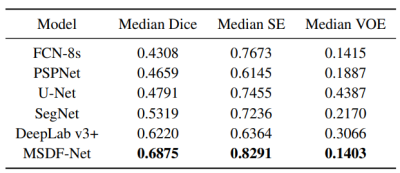

The predictions of each model are shown in Figure 3. For difficult small lesion samples, our methods presented stronger capability in identifying and segmenting them. And for the large lesions, our model can get more detailed boundary information. This proves the effectiveness of our proposed method in improving the segmentation accuracy. In Table 1, The performance is judged using the Dice score, sensitivity (SE) and Volumetric Overlap Error (VOE). Our proposed method performs better than all five methods, with improvements of 0.0655, 0.0618 and 0.0012 on the median Dice score, SE and VOE, respectively, compared to the best result achieved by other models. The experiment demonstrates the strong generalization capability and promising effectiveness of the proposed MSDF-Net model.CONCLUSION

We propose the MSDF-Net, a new model that automatically segments stroke lesions. This network is equipped with multi-scale feature extraction to stimulate the potential of information complementation. We use the capsule method to replace the commonly used convolution operation, and generate features that have complex biomedical properties using the agreement of the active capsules. We compare the proposed method with several widely used frameworks and our model achieves the highest Dice score on the ATLAS dataset. This confirms the validity of the proposed method. In addition, due to the dynamic routing and feature reconstruction process between vectors, the calculation amount and parameter amount of the module are large. Future work will focus on improving the design of the module to optimize calculations.Acknowledgements

This research was partially supported by the National Natural Science Foundation of China (61601450, 61871371, 81830056), Science and Technology Planning Project of Guangdong Province (2017B020227012, 2018B010109009), Youth Innovation Promotion Association Program of Chinese Academy of Sciences (2019351), and the Basic Research Program of Shenzhen (JCYJ20180507182400762).References

1. J. A. Fiez, H. Damasio et al. Lesion segmentation and manual warping to a reference brain: Intra- and interobserver reliability. Nature neuroscience. 2000;9(4):192-211.

2. Liew S L, Anglin J M, Banks N W, et al. A large, open source dataset of stroke anatomical brain images and manual lesion segmentations. Scientific Data. 2018;5:180011.

3. D. Duncan, G. Barisano et al. Analytic Tools for Post-traumatic Epileptogenesis Biomarker Search in Multimodal Dataset of an Animal Model and Human Patients. Frontiers in neuroinformatics. 2018;12.

4. L. C. Chen, Y. Zhu et al. Encoder-decoder with atrous separable convolution for semantic image segmentation. Proc. ECCV. 2018;801-818.

5. S. Sabour, N. Frosst, and G. E. Hinton. Dynamic routing between capsules. Proc. NIPS. 2017;3856-3866.

6. O. Ronneberger, P. Fischer et al. U-net: Convolutional networks for biomedical image segmentation. Proc. MICCAI. 2015;234-241.

7. V. Badrinarayanan, A. Kendall et al. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. pattern analysis and machine intelligence. 2003;39(12):2481-2495.

8. H. Zhao, J. Shi et al. Pyramid scene parsing network. Proc. CVPR. 2017;2881-2890. 9. J. Long, E. Shelhamer et al. Fully convolutional networks for semantic segmentation. Proc. CVPR. 2015;3431-3440.

Figures