3510

A deep neural network with convolutional LSTM for brain tumor segmentation in multi-contrast volumetric MRI1Korea Advanced Institute of Science and Technology, Daejeon, Republic of Korea

Synopsis

A medical image segmentation method is a key step in contouring of designs for radiotherapy planning and has been widely studied. In this work, we propose a method using inter-slice contexts to distinguish small objects such as tumor tissues in 3D volumetric MR images by adding recurrent neural network layers to existing 2D convolutional neural networks. It is necessary to apply a convolutional long-short term memory (ConvLSTM) since 3D volumetric data can be considered as a sequence of 2D slices. We verified through the analysis that the correlation between neighboring segmentation maps and the overall segmentation performance was improved.

Introduction

Accurate medical image segmentation is essential and necessary in clinical practice. Thus, automatic approaches have been actively studied for a long time in order to replace manual hand-crafted methods, which are tedious and time-consuming. The performance of automatic approaches has considerably improved with the advent of deep neural network techniques. However, there is still room for further development. The limitation of 2D convolutional neural networks (CNN) is that they cannot use inter-slice contexts because the tasks are performed independently for each slice. In this abstract, we propose a compact and efficient neural network by using a 2D CNN with ConvLSTM that can also utilize inter-slice information.Methods

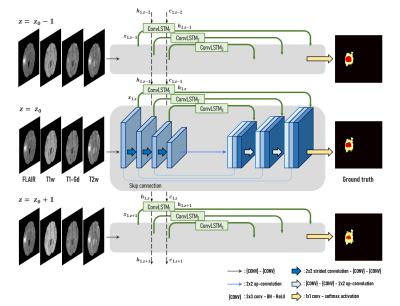

We used the multimodal brain tumor segmentation challenge (BraTS) 2018 dataset, consisting of the four MRI contrasts such as T1-weighted (T1w), T1 contrast-enhanced (T1-Gd), T2-weighted (T2w), and FLAIR volumes for this experiment.1 The datasets have been manually annotated as either Gadolinium-enhancing tumor (ET), peritumoral edema (ED), or necrotic and non-enhancing tumor (NCR/NET) core. In this abstract, we divide the multiclass segmentation task into three kinds of binary segmentation by cascading three modules to focus on each of the three tumor sub-regions (Fig. 1-(a)). Three modules sequentially perform binary segmentation of sub-regions such as whole tumor (WT), tumor core (TC), and enhancing tumor (ET), respectively. In the second and third modules, the output probability maps of the previous module are multiplied to the input volume in order to pay more attention to the tumor tissues. In detail, each module consists of two neural networks that read the entire volume in the top-down and bottom-up directions, respectively, and the merging block combines the feature maps from both networks (Fig. 1-(b)). The architecture of the proposed neural network is a mixture of ConvLSTM layers and 2D U-Net.2,3 A ConvLSTM layer is a recurrent neural network layer, which delivers feature maps extracted from previous slices through its internal cell state $$${c_{k,z}}$$$ so that it is appropriate for dealing with image sequences such as a video or 3D volume data. In the proposed architecture, this layer is added at each level $$$k$$$ of the 2D U-Net for the network to learn dependencies at different resolutions as shown in Fig. 2. ConvLSTM layers are used to memorize key features extracted during segmentation of the previous slices, and to utilize information along the z-axis. This study used 159 multimodal MRI scans of glioblastoma (GBM/HGG) patients (100 for training, 25 for validation, and 34 for test datasets). Since the datasets were acquired from multiple institutions, it was necessary to reduce the variation of their statistical distributions by applying Gaussian normalization for preprocessing. The loss function is defined by the sum of the dice loss and the cross-entropy loss produced by comparing the label with the probability map generated after the final activation. The dice coefficient, which is an indicator used to analyze performance in various medical image segmentation challenges, evaluates the performance of segmentation tasks, and the dice loss is set to $$$1-DICE(X,Y)$$$ where $$DICE(X,Y) = \frac{2\big|X\cap Y\big|}{\big|X\big|+\big|Y\big|} \qquad (X:\ Prediction,\ Y:\ Ground\ truth)$$ so that the value of the dice index is maximized through the training process.Results

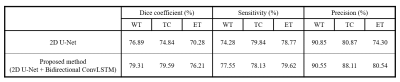

We evaluated the 2D U-Net and our proposed method using the dice coefficient, the sensitivity and the precision (Table 1). The BraTS challenge 2018 determined the ranking of participants using the dice score of whole tumor (WT), tumor core (TC), and enhancing tumor (ET). WT refers to the entire region of glioma and TC includes non-enhancing tumor and enhancing tumor except edema. The overall performance of our proposed method showed improvements on each sub-region compared to the 2D CNN, proving that the information obtained along the z-axis is useful. As we expected, we found that the problem of 2D segmentation, in which the segmentation maps between adjacent slices were much different because 2D segmentation was performed independently for each slice, did not appear in the proposed method as shown in Fig. 4.Discussion & Conclusion

In the proposed method, the recurrent layers were added to preserve the feature extracted from processing the previous slices which will affect the segmentation map of the present slice to be processed. This enabled us to overcome the limitation of 2D CNN. By leveraging the memory from the cell state of the ConvLSTM, correlation between adjacent segmentation maps have significantly increased. Sensitivity and precision are statistical measures of the performance of a binary classification task. It refers that the classifier missed a lot if the sensitivity is low, whereas it overestimated and responded excessively positive if the precision is low. The precision is relatively higher than the sensitivity shown in Table 1; we analyzed that there are more missing voxels than leakages. Consequently, we proposed a 2D U-Net with additive ConvLSTM layers, which could divide the multiclass segmentation task into several binary segmentation tasks. We proved that it is also possible to process 3D volumetric MR images via recurrent layers.Acknowledgements

This research was supported by Institute for Information & communications Technology Promotion (IITP) grant funded by the Korea government (MSIT) (No. 2017-0-01778) and the Technology Innovation Program (#10076675) funded by the Ministry of Trade, Industry & Energy (MOTIE, Korea).References

1. Menze, B. H., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., ... & Lanczi, L. (2014). The multimodal brain tumor image segmentation benchmark (BRATS). IEEE transactions on medical imaging, 34(10), 1993-2024.

2. Xingjian, S. H. I., Chen, Z., Wang, H., Yeung, D. Y., Wong, W. K., & Woo, W. C. (2015). Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Advances in neural information processing systems (pp. 802-810).

3. Ronneberger, O., Fischer, P., & Brox, T. (2015, October). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234-241). Springer, Cham.

4. Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T., & Ronneberger, O. (2016, October). 3D U-Net: learning dense volumetric segmentation from sparse annotation. In International conference on medical image computing and computer-assisted intervention (pp. 424-432). Springer, Cham.

5. Salvador, A., Bellver, M., Campos, V., Baradad, M., Marques, F., Torres, J., & Giro-i-Nieto, X. (2017). Recurrent neural networks for semantic instance segmentation. arXiv preprint arXiv:1712.00617.

6. Zhang, D., Icke, I., Dogdas, B., Parimal, S., Sampath, S., Forbes, J., ... & Chen, A. (2018, April). A multi-level convolutional LSTM model for the segmentation of left ventricle myocardium in infarcted porcine cine MR images. In 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018) (pp. 470-473). IEEE.

7. shahabeddin Nabavi, S., Rochan, M., & Wang, Y. (2018, July). Future semantic segmentation with convolutional lstm. In BMVC.

8. Azad, R., Asadi-Aghbolaghi, M., Fathy, M., & Escalera, S. (2019). Bi-Directional ConvLSTM U-Net with Densley Connected Convolutions. In Proceedings of the IEEE International Conference on Computer Vision Workshops (pp. 0-0).

9. Alom, M. Z., Hasan, M., Yakopcic, C., Taha, T. M., & Asari, V. K. (2018). Recurrent residual convolutional neural network based on u-net (R2U-net) for medical image segmentation. arXiv preprint arXiv:1802.06955.

10. Visin, F., Ciccone, M., Romero, A., Kastner, K., Cho, K., Bengio, Y., ... & Courville, A. (2016). Reseg: A recurrent neural network-based model for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (pp. 41-48).

11. Li, R., Li, K., Kuo, Y. C., Shu, M., Qi, X., Shen, X., & Jia, J. (2018). Referring image segmentation via recurrent refinement networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 5745-5753).

12. Gao, Y., Phillips, J. M., Zheng, Y., Min, R., Fletcher, P. T., & Gerig, G. (2018, April). Fully convolutional structured LSTM networks for joint 4D medical image segmentation. In 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018) (pp. 1104-1108). IEEE.

13. Chen, J., Yang, L., Zhang, Y., Alber, M., & Chen, D. Z. (2016). Combining fully convolutional and recurrent neural networks for 3d biomedical image segmentation. In Advances in neural information processing systems (pp. 3036-3044).

Figures