3484

Domain adaptation for prostate lesion segmentation on VERDICT-MRI1Centre of Medical Imaging Computing, University College London, London, United Kingdom, 2Department of Computer Science, University College London, London, United Kingdom, 3Department of Radiology, UCLH NHS Foundation Trust, University College London, London, United Kingdom, 4Division of Surgery & Interventional Science, University College London, London, United Kingdom, 5Centre for Medical Imaging, Division of Medicine, University College London, London, United Kingdom

Synopsis

The successful adoption of convolutional neural networks (CNNs) for improved diagnosis can be hindered for pathologies and clinical settings where the amount of labelled training data is limited. In such cases, domain adaptation provides a viable alternative. In this work we propose domain adaptation to enhance the performance of prostate lesion segmentation on VERDICT-MRI utilising diffusion weighted (DW)-MRI data from multi-parametric (mp)-MRI acquisitions. Experimental results show that domain adaptation significantly improves the segmentation performance on VERDICT-MRI.

Introduction

Convolutional neural networks bring advances in many recognition tasks in medical imaging. However, their performance relies on two conditions: i) the availability of a significant amount of labelled training data and ii) whether the training and test data belong in the same image domain. Satisfying both conditions is challenging in biomedical applications due to the cost of human labour and expertise required to produce ground truth labels, and the variations in image acquisition. In this work we tackle this problem for prostate cancer diagnosis and an advanced diffusion weighted (DW)-magnetic resonance imaging (MRI) method called VERDICT. VERDICT-MRI is a non-invasive imaging technique for cancer microstructure characterisation [1, 2, 3]. The method has been recently in clinical trial to supplement standard multi-parametric (mp)-MRI for prostate cancer diagnosis [4]. Compared to the naive DW-MRI from mp-MRI, VERDICT-MRI has a richer protocol to probe the underlying microstructure and reveal changes in tissue features similar to histology. However, the limited amount of available labelled training data does not allow the training of robust deep neural networks that could directly exploit the information in the raw VERDICT-MRI. In cases where the amount of labelled training data is limited, domain adaptation (DA) provides a viable solution. In this work, we investigate the use of DA for lesion segmentation on raw VERDICT-MRI data. Specifically, we use residual adapters (RAs) [5] and DW-MRI from mp-MRI to train a robust network for lesion segmentation on VERDICT-MRI.Methods

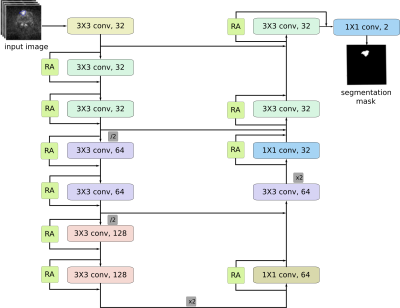

Segmentation architectureThe main component of our framework is an encoder-decoder network for lesion segmentation [6, 7]. Given an input image $$$\mathbf{X}\in\mathbb R^{H\times W\times C}$$$, where $$$C$$$ the number of input channels, the network provides a segmentation mask $$$\widehat{\mathbf{Y}}\in\mathcal Y^{H\times W}$$$, where $$$\mathcal{Y}=\{0, 1\}$$$, indicating the class of each pixel in the image. We train the network using as objective function a soft generalisation of the dice score proposed in [8] and expressed as

$${\mathcal L_{DSC}} = \frac{2\sum_{k=1}^{K}{p_k g_k}}{\sum_{k=1}^{K}{p_k^2 +\sum_{k=1}^{K}{g_k^2 }}}, $$ where $$$K = H\times W$$$ is the number of voxels in the input images, $$$p_k\in[0,1]$$$ is the probability of voxel $$$k$$$ belonging in class $$$1$$$ and $$$g_k\in\{0,1\}$$$ is the ground truth label of voxel $$$k$$$.

Residual adapters

Let $$$\phi_l$$$ be a convolutional layer in the source domain network and $$$\mathbf F_l\in\mathbb R^{k\times k\times C_i\times C_o}$$$ be a set of filters for that layer, where $$$k\times k$$$ is the kernel size and $$$C_i$$$, $$$C_o$$$ are the number of input and output feature channels respectively. Let also $$$\mathbf G_l\in\mathbb R^{1\times1 \times C_i \times C_o}$$$ be a set of residual adapter filters installed in parallel with the existing set of filters $$$\mathbf F_l$$$. Given an input tensor $$$\mathbf x_l \in \mathbb R^{H \times W \times C_i}$$$, the output $$$\mathbf y_l \in \mathbb R^{H\times W\times C_o}$$$ of layer $$$\phi_l$$$ is given by $$\mathbf{y}_l= \mathbf{F}_l*\mathbf{x}+\mathbf{G}_l*\mathbf{x}.$$

Introducing RAs in the pre-trained network ensures that most parameters stay the same, but also that the new unit introduces a small, but effective modification that can effectively compensate for changes in the feature statistics.

Materials

VERDICT-MRIWe use VERDICT-MRI data from $$$60$$$ men. We acquire VERDICT-MRI images with pulsed-gradient spin-echo sequence (PGSE) using an optimised imaging protocol for VERDICT prostate characterisation with $$$5$$$ b-values ($$$90-3000\ \rm{s/mm^2}$$$), in $$$3$$$ orthogonal directions, on a $$$3$$$T scanner [10]. We also acquire images with $$$b=0\ \rm{s/mm^2}$$$ before each b-value acquisition. VERDICT-MRI data was registered using rigid registration [11]. A dedicated radiologist contoured the lesions (Likert score of $$$3$$$ and higher) on VERDICT-MRI using mp-MRI for guidance.

DW-MRI from mp-MRI acquisitions

We use DW-MRI data from the ProstateX challenge dataset consisting of training mp-MRI data acquired from $$$204$$$ patients [12]. The DW-MRI data were acquired with a single-shot echo planar imaging sequence with diffusion encoding gradients in three directions. Three b-values were acquired and subsequently, the ADC map and a b-value image at $$$b=1400\ \rm{s/mm^2}$$$ were calculated by the scanner software. We use DW-MRI data from $$$114$$$ patients. Since the ProstateX dataset provides only the position of the lesion, a radiologist manually annotated the lesions on the ADC map using as reference the provided position of the lesion.

Results

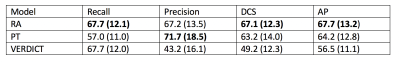

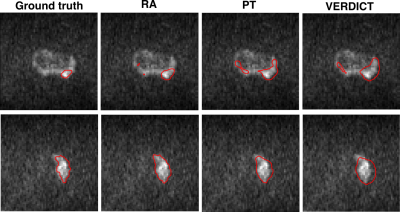

We evaluate the performance of RAs against two baselines. Below we describe the experiments we perform.- Residual adapters (RA): We introduce the RAs in the pre-trained network and update only the RAs using VERDICT-MRI data.

- Pre-trained network (PT): We perform standard fine-tuning of the pre-trained network using VERDICT-MRI data.

- VERDICT-MRI data (VERDICT): We train the encoder-decoder network from scratch in the target domain.

Conclusion

In this work we employed DA to leverage labelled DW-MRI data from mp-MRI acquisitions to improve prostate lesion segmentation on VERDICT-MRI. Experimental results indicate that introducing RAs in a pre-trained network in the source domain significantly improves the performance in the target domain.Acknowledgements

This research is funded by EPSRC grand EP/N021967/1. The Titan Xp used for this research was donated by the NVIDIA Corporation.References

1. Panagiotaki, E., et al.: Noninvasive quantification of solid tumor microstructure using VERDICT-MRI. Cancer Research 74, 7, 2014.

2. Panagiotaki, E., et al.: Microstructural characterization of normal and malignant human prostate tissue with vascular, extracellular, and restricted diffusion for cytometry in tumours magnetic resonance imaging. Investigative Radiology 50, 4, 2015.

3. Johnston, W., E., et al.: Verdict MRI for prostate cancer: Intracellular volume fraction versus apparent diffusion coefficient. Radiology, 291, 2, 2019.

4. Johnston, W., E., et al.: INNOVATE: A prospective cohort study combining serum and urinary biomarkers with novel diffusion-weighted magnetic resonance imaging for the prediction and characterization of prostate cancer. BMC Cancer, 16, 816, 2016.

5. Rebuffi, S.A., et al.: Efficient parametrization of multi-domain deep neural networks. In: CVPR, 2018.

6. Badrinarayanan, V., et al.: Segnet: A deep convolutional encoder-decoder architecture for image segmentation. TPAMI, 2017.

7. Chen, L.C., et al.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: ECCV, 2018.

8. Milletari, F., et al.: V-net: Fully convolutional neural networks for volumetric medical image segmentation. In: 3DV, 2016.

9. He, K., et al.: Deep residual learning for image recognition. In: CVPR, 2016.

10. Panagiotaki, E., et al.: Optimised VERDICT MRI protocol for prostate cancer characterisation. In: ISMRM, 2015.

11. Ourselin, S., et al.: Reconstructing a 3D structure from serial histological sections. IVC 19, 1, 2001. 12. Litjens, G., et al.: Computer-aided detection of prostate cancer in MRI. TMI 33, 5, 2014.

13. Paszke, A., et al.: Automatic differentiation in pytorch. In: Autodiff Workshop, NIPS, 2017.

14. Srivastava, N., et al.: Dropout: A simple way to prevent neural networks from overfitting. JMLR 15, 2014.

Figures