3480

Retrospective Artifact Correction of Pediatric MRI via Disentangled Cycle-Consistency Adversarial Networks1Biomedical Research Imaging Center, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States, 2University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

Synopsis

Retrospective artifact removal using supervised learning requires explicit generation of artifact-corrupted images and is impractical since generating the wide variety of potential artifacts can be challenging. Using unsupervised learning, we show how artifacts can be disentangled with remarkable efficacy from artifact-corrupted images to recover the artifact-free counterparts, without requiring explicit artifact generation.

Purpose

Structural magnetic resonance imaging (sMRI) is highly susceptible to motion artifacts, which can be difficult to avoid especially for pediatric subjects1. Deep learning retrospective artifact correction (RAC)2 can be employed to improve image quality. However, acquiring a large amount of paired data, i.e., images with and without artifacts, for supervised training is impractical since generating data for a wide range of artifacts is challenging. In this abstract, we demonstrate that RAC can be carried out effectively via a cycle-consistency adversarial network that is trained with unpaired artifact-free and artifact-corrupted images.Method

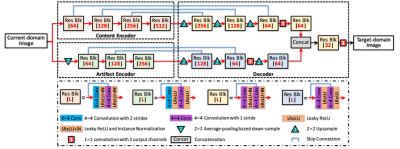

A. Data Preparation: From T1- and T2-weighted MR volumes of pediatric subjects from birth to age six, 20 artifact-free and 20 artifact-corrupted volumes were selected for training and 5 artifact-corrupted volumes were selected for testing. 1620, 1600 and 425 axial slices were extracted, respectively, from the 20 artifact-free, 20 artifact-corrupted, and 5 artifact-corrupted T1-weighted volumes. 1520, 1550 and 425 axial slices were extracted, respectively, from the 20 artifact-free, 20 artifact-corrupted, and 5 artifact-corrupted T2-weighted volumes.B. Network Architecture: We consider two image domains: artifact-free and artifact-corrupted. To learn these domains from the data, similar to CycleGAN3, we employ two auto-encoders to learn a cycle translation (Fig. 1) that translates forward and backward the images in the two domains. During training, two patchGAN4 discriminators are used to distinguish between translated and real images in each domain. Each auto-encoder consists of two encoders to disentangle content and artifact information (Fig. 2). For complete disentanglement of content and artifact, we propose a content-swapping mechanism where the translated image in each domain is constructed with the content information of the opposite domain. We also enforce that when the input image is artifact-free, the output is unaltered with no removal of image details.

C. Loss Functions: In addition to pixel and perceptual cycle-consistency losses, we propose a multi-scale content consistency loss based on pixel, low- and high-level content features between images. For adversarial learning, we employ two least-squares adversarial losses. For quality-maintaining learning, we use a pixel-wise consistency loss to enforce identity translation mappings.

Results

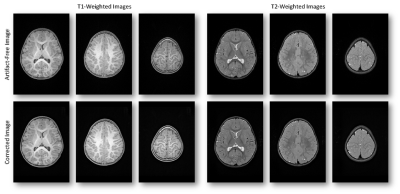

The corrected results for different levels of artifacts T1- and T2-weighted images are shown in Fig. 3 and 4, respectively. It can be observed that the artifacts are removed without significantly without introducing new artifacts. The results for the quality maintaining of T1- and T2-weighted images are summarized in Fig. 5, from which we can observe that the corrected images are highly consistent with the input artifact-free images.Conclusions

We have demonstrated that our disentangled cycle-consistency adversarial network achieves remarkable efficacy in artifact correction and yields high-quality corrected images. Thanks to the unsupervised nature of our method, the artifacts do not need to be explicitly specified.Summary of Main Findings

Our network reduces artifacts in MR images without specifying the nature of the artifacts. This potentially allows image imperfections such as noise, streaking, and ghosting to be removed without explicit generating them for supervised training.Acknowledgements

This work was supported in part by NIH grants (EB006733 and 1U01MH110274).References

1. Zhu, J., Gullapalli, R. P., MR artifacts, safety, and quality control. RadioGraphics 26, 275-297 (2006).

2. Zaitsev, M., Maclaren, J. & Herbst, M. Motion artifacts in MRI: A complex problem with many partial solutions. Journal of Magnetic Resonance Imaging 42, 887–901 (2015).

3. Zhu, J.-Y., Park, T., Isola, P., Efros, A. A. Unpaired image-to-image translation using cycle-consistent adversarial networks. IEEE International Conference on Computer Vision (ICCV) (2017).

4. Isola, P., Zhu, J.-Y., Zhou, T., Efros, A. A. Image-to-image translation with conditional adversarial networks. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2017).