3364

A deep network for continuous motion detection during MRI scanning1GE Global Research Center, Niskayuna, NY, United States, 2GE Global Research Center, Herzliya, Israel

Synopsis

We introduce ISHMAPS, a method for detecting and adapting to patient motion in real time during an MR scan. The method uses a neural network trained on motion-corrupted data to detect and score motion using as little as 6% of k-space. Once motion is detected, multiple separate complex sub-images from different motion states can be reconstructed and combined into a motion-free image, or the scan can adaptively re-acquire sections of k-space taken before motion occurred.

Purpose

Patient motion is one of the biggest sources of inefficiency in clinical MRI, often requiring re-scans or even second visits by the patient1. Most current approaches to motion correction require either some sort of hardware for monitoring the motion (adding to cost and patient setup time), or navigator sequences (which take time away from the imaging sequence). We created a scoring system to train a convolutional network to detect motion in sub-images reconstructed from partial k-space to detect the timing as well as severity of motion while a scan is in progress. The ISHMAPS (Inter-Shot Motion Artifact Predictive Scoring) network was able to detect motion within a window of as little as 6% of k-space.Methods

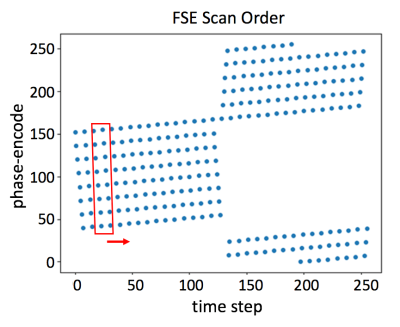

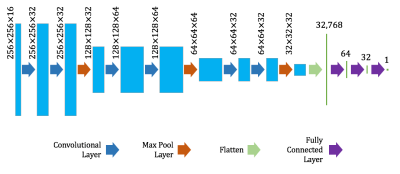

The ISHMAPS neural network was trained to continuously identify and score motion severity during an MRI scan. Various acquisition protocols were considered including multi-shot fast spin echo (FSE), with echo-train length (ETL) ranging from 8 to 23, as exemplified in Fig. 1. Two neighboring shots, each comprising ETL k-space lines, were fed into the neural network, to predict whether motion had occurred between shots, and the window was then slid by one shot to assess the next pairing. The network was trained with use of a dataset of simulated in-plane rigid-body motion. The dataset was generated starting with a series of motion-free head images. For each image, a second image was created by randomly translating (by up to 10 pixels in any direction) and/or rotating (by angles between +/- 10 degrees centered around the back of the head) the initial image. Both original and moved images were multiplied by coil sensitivity maps and Fourier transformed into k-space. K-space lines from the first shot of a pair were filled with pre-motion data, and the second shot with post-motion. Zero filling and Fourier transformation back into the image domain created a multi-coil complex “sub-image” with motion artifacts (Fig. 2). For each sub-image, the corresponding motion-free sub-image was created, and a motion corruption score calculated based on entropy-of-the-difference between the two. This metric takes normalized difference images, multiplies by the log of the difference, and averages the value over all pixels and coils. The convolutional neural network shown in Fig. 3 was then trained to predict the motion-corruption score by comparing it to the labeled score in the cost function. The ISHMAPS network was trained on 1713 datasets using random translations and rotations, all combinations of adjacent shot pairings, and a variety of ETLs. The network was validated and tested on 151 and 183 datasets, respectively. The network was then used to generate continuous motion scores from a subject moving his head in specified patterns during scanning. Relatively artifact-free images were reconstructed using an iterative reconstruction algorithm similar to [2], with the motion model constrained using the motion timing information generated by ISHMAPS. In other cases, adaptive scanning was performed by selectively reacquiring regions of k-space after detecting motion events.Results

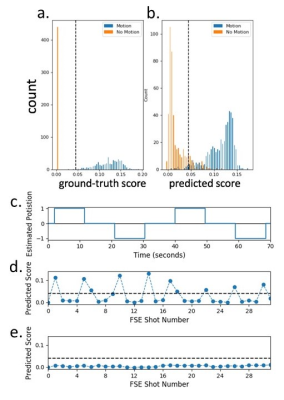

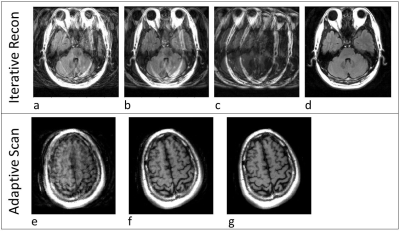

Figure 4a,b shows the results of the network prediction against the ground-truth scores. An ROC curve was generated for motion detection by this method, with the area under the curve found to be 0.98. With choice of an appropriate threshold (dashed line, Fig. 4), the network prediction becomes a classifier determining whether significant motion occurred in the sub-image or not. An example of volunteer head motion is shown in Fig. 4c, with the resulting continuous output of the network shown in Fig. 4d, and a motionless scan in Fig. 4e. Figure 5a-d shows an example of a motion-free image reconstructed using motion timings derived from the network. Figure 5e-g shows an example of adaptive scanning based on network detection of motion events during scanning.Discussion

Training a network to predict a motion score rather than just training for motion classification enables a more nuanced approach to ameliorating motion’s effects. By simultaneously determining if/when motion has occurred and the severity of motion artifacts, algorithms can use this information to make decisions on appropriate steps to reduce or remove the artifacts, including reacquiring portions of k-space to compensate, combining multiple motion-free sub-images created from regions acquired between detected motion using a neural network or similar reconstruction, or, in case of severe motion, restarting the scan after instructing or sedating the patient.Conclusion

We created a scoring system to train a convolutional network to detect motion artifacts in sub-images reconstructed from partial k-space, to detect the timing as well as severity of motion while a scan is in progress. Using a score based on entropy between a motion-corrupted and ground-truth sub-image allows the network to predict the degree of motion corruption. The network was able to predict motion from a sub-image within 8 phase-encodes (3%) of the motion occurring.Acknowledgements

No acknowledgement found.References

1. J Andre, et al. Toward quantifying the prevalence, severity, and cost associated with patient motion during clinical MR examinations. JACR, 2015;12:689695.

2. L Cordero-Grande, et al. Sensitivity encoding for aligned multishot magnetic resonance reconstruction. IEEE Trans Comput Imaging, 2016;2(3):266-280.

Figures